Hieu Trung Tran

XMainframe: A Large Language Model for Mainframe Modernization

Aug 05, 2024

Abstract:Mainframe operating systems, despite their inception in the 1940s, continue to support critical sectors like finance and government. However, these systems are often viewed as outdated, requiring extensive maintenance and modernization. Addressing this challenge necessitates innovative tools that can understand and interact with legacy codebases. To this end, we introduce XMainframe, a state-of-the-art large language model (LLM) specifically designed with knowledge of mainframe legacy systems and COBOL codebases. Our solution involves the creation of an extensive data collection pipeline to produce high-quality training datasets, enhancing XMainframe's performance in this specialized domain. Additionally, we present MainframeBench, a comprehensive benchmark for assessing mainframe knowledge, including multiple-choice questions, question answering, and COBOL code summarization. Our empirical evaluations demonstrate that XMainframe consistently outperforms existing state-of-the-art LLMs across these tasks. Specifically, XMainframe achieves 30% higher accuracy than DeepSeek-Coder on multiple-choice questions, doubles the BLEU score of Mixtral-Instruct 8x7B on question answering, and scores six times higher than GPT-3.5 on COBOL summarization. Our work highlights the potential of XMainframe to drive significant advancements in managing and modernizing legacy systems, thereby enhancing productivity and saving time for software developers.

Predicting Job Titles from Job Descriptions with Multi-label Text Classification

Dec 21, 2021

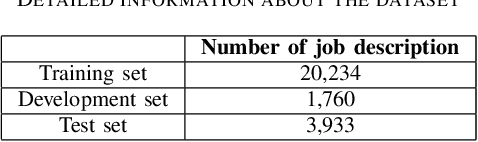

Abstract:Finding a suitable job and hunting for eligible candidates are important to job seeking and human resource agencies. With the vast information about job descriptions, employees and employers need assistance to automatically detect job titles based on job description texts. In this paper, we propose the multi-label classification approach for predicting relevant job titles from job description texts, and implement the Bi-GRU-LSTM-CNN with different pre-trained language models to apply for the job titles prediction problem. The BERT with multilingual pre-trained model obtains the highest result by F1-scores on both development and test sets, which are 62.20% on the development set, and 47.44% on the test set.

Automatically Detecting Cyberbullying Comments on Online Game Forums

Jun 03, 2021

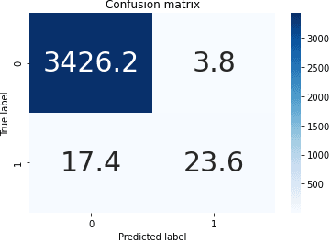

Abstract:Online game forums are popular to most of game players. They use it to communicate and discuss the strategy of the game, or even to make friends. However, game forums also contain abusive and harassment speech, disturbing and threatening players. Therefore, it is necessary to automatically detect and remove cyberbullying comments to keep the game forum clean and friendly. We use the Cyberbullying dataset collected from World of Warcraft (WoW) and League of Legends (LoL) forums and train classification models to automatically detect whether a comment of a player is abusive or not. The result obtains 82.69% of macro F1-score for LoL forum and 83.86% of macro F1-score for WoW forum by the Toxic-BERT model on the Cyberbullying dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge