Henry WJ Reeve

Optimistic bounds for multi-output prediction

Feb 22, 2020Abstract:We investigate the challenge of multi-output learning, where the goal is to learn a vector-valued function based on a supervised data set. This includes a range of important problems in Machine Learning including multi-target regression, multi-class classification and multi-label classification. We begin our analysis by introducing the self-bounding Lipschitz condition for multi-output loss functions, which interpolates continuously between a classical Lipschitz condition and a multi-dimensional analogue of a smoothness condition. We then show that the self-bounding Lipschitz condition gives rise to optimistic bounds for multi-output learning, which are minimax optimal up to logarithmic factors. The proof exploits local Rademacher complexity combined with a powerful minoration inequality due to Srebro, Sridharan and Tewari. As an application we derive a state-of-the-art generalization bound for multi-class gradient boosting.

The K-Nearest Neighbour UCB algorithm for multi-armed bandits with covariates

Mar 01, 2018

Abstract:In this paper we propose and explore the k-Nearest Neighbour UCB algorithm for multi-armed bandits with covariates. We focus on a setting where the covariates are supported on a metric space of low intrinsic dimension, such as a manifold embedded within a high dimensional ambient feature space. The algorithm is conceptually simple and straightforward to implement. The k-Nearest Neighbour UCB algorithm does not require prior knowledge of the either the intrinsic dimension of the marginal distribution or the time horizon. We prove a regret bound for the k-Nearest Neighbour UCB algorithm which is minimax optimal up to logarithmic factors. In particular, the algorithm automatically takes advantage of both low intrinsic dimensionality of the marginal distribution over the covariates and low noise in the data, expressed as a margin condition. In addition, focusing on the case of bounded rewards, we give corresponding regret bounds for the k-Nearest Neighbour KL-UCB algorithm, which is an analogue of the KL-UCB algorithm adapted to the setting of multi-armed bandits with covariates. Finally, we present empirical results which demonstrate the ability of both the k-Nearest Neighbour UCB and k-Nearest Neighbour KL-UCB to take advantage of situations where the data is supported on an unknown sub-manifold of a high-dimensional feature space.

* To be presented at ALT 2018

Diversity and degrees of freedom in regression ensembles

Mar 01, 2018

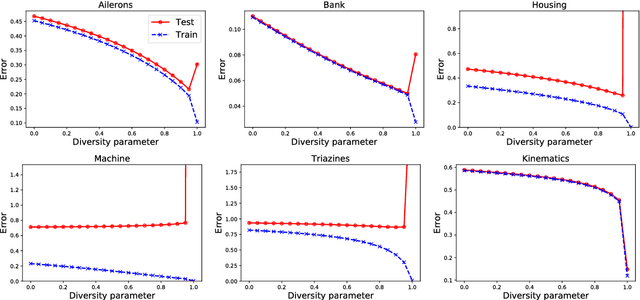

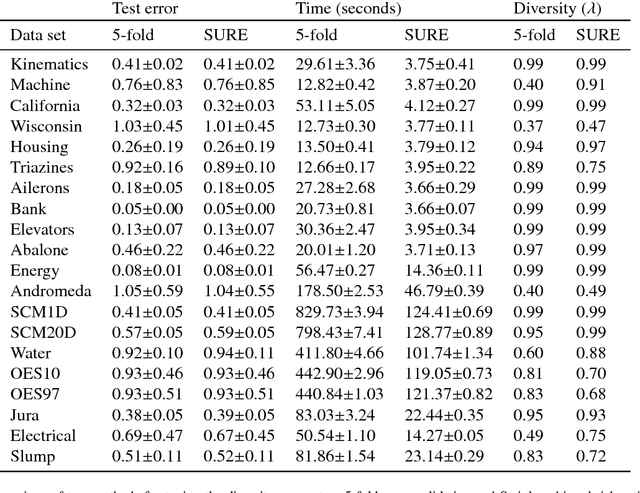

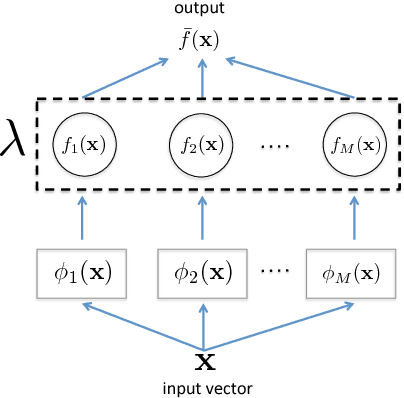

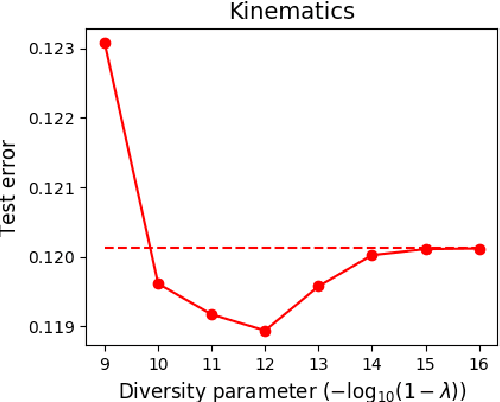

Abstract:Ensemble methods are a cornerstone of modern machine learning. The performance of an ensemble depends crucially upon the level of diversity between its constituent learners. This paper establishes a connection between diversity and degrees of freedom (i.e. the capacity of the model), showing that diversity may be viewed as a form of inverse regularisation. This is achieved by focusing on a previously published algorithm Negative Correlation Learning (NCL), in which model diversity is explicitly encouraged through a diversity penalty term in the loss function. We provide an exact formula for the effective degrees of freedom in an NCL ensemble with fixed basis functions, showing that it is a continuous, convex and monotonically increasing function of the diversity parameter. We demonstrate a connection to Tikhonov regularisation and show that, with an appropriately chosen diversity parameter, an NCL ensemble can always outperform the unregularised ensemble in the presence of noise. We demonstrate the practical utility of our approach by deriving a method to efficiently tune the diversity parameter. Finally, we use a Monte-Carlo estimator to extend the connection between diversity and degrees of freedom to ensembles of deep neural networks.

* Neurocomputing 2018

Minimax rates for cost-sensitive learning on manifolds with approximate nearest neighbours

Mar 01, 2018Abstract:We study the approximate nearest neighbour method for cost-sensitive classification on low-dimensional manifolds embedded within a high-dimensional feature space. We determine the minimax learning rates for distributions on a smooth manifold, in a cost-sensitive setting. This generalises a classic result of Audibert and Tsybakov. Building upon recent work of Chaudhuri and Dasgupta we prove that these minimax rates are attained by the approximate nearest neighbour algorithm, where neighbours are computed in a randomly projected low-dimensional space. In addition, we give a bound on the number of dimensions required for the projection which depends solely upon the reach and dimension of the manifold, combined with the regularity of the marginal.

* Published in ALT 2017

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge