Helena Stegherr

Investigating the Impact of Independent Rule Fitnesses in a Learning Classifier System

Jul 12, 2022

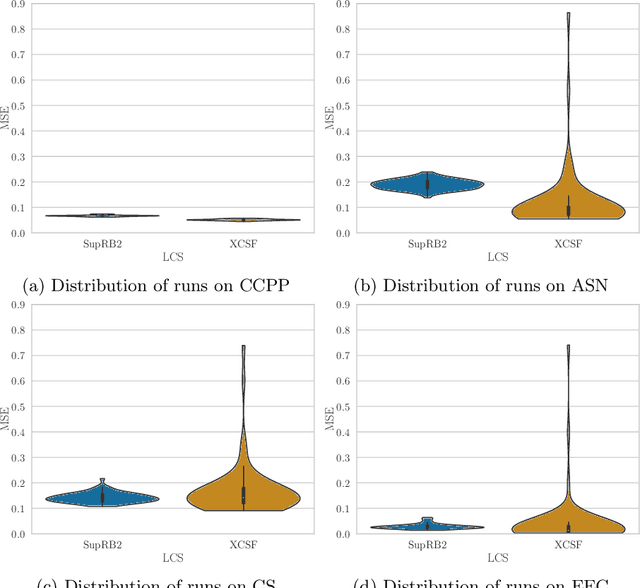

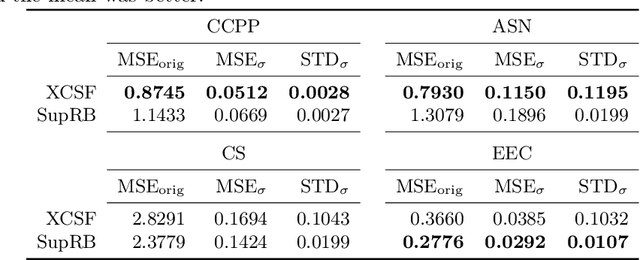

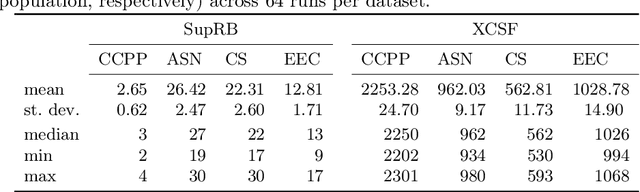

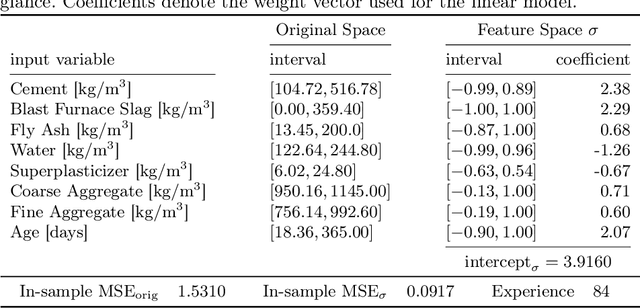

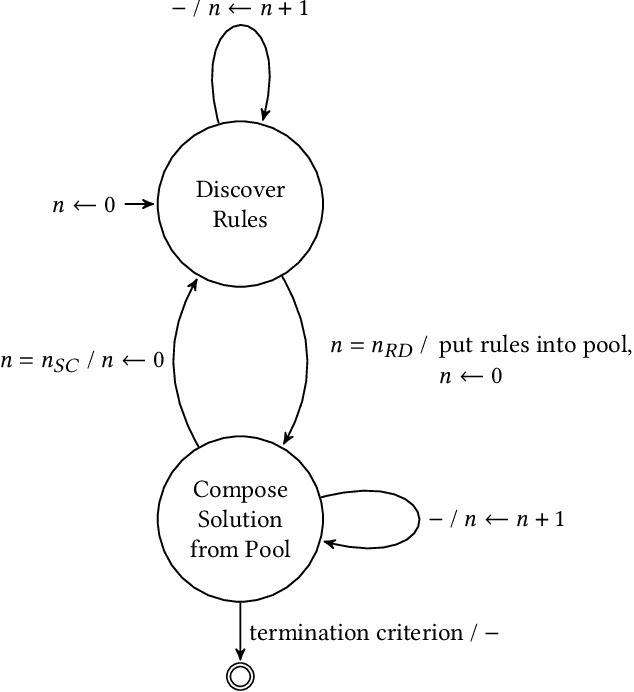

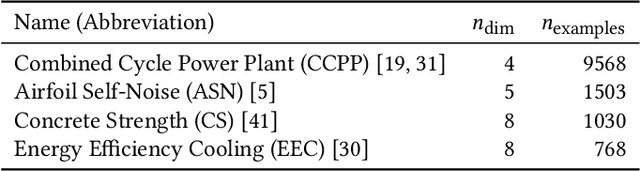

Abstract:Achieving at least some level of explainability requires complex analyses for many machine learning systems, such as common black-box models. We recently proposed a new rule-based learning system, SupRB, to construct compact, interpretable and transparent models by utilizing separate optimizers for the model selection tasks concerning rule discovery and rule set composition.This allows users to specifically tailor their model structure to fulfil use-case specific explainability requirements. From an optimization perspective, this allows us to define clearer goals and we find that -- in contrast to many state of the art systems -- this allows us to keep rule fitnesses independent. In this paper we investigate this system's performance thoroughly on a set of regression problems and compare it against XCSF, a prominent rule-based learning system. We find the overall results of SupRB's evaluation comparable to XCSF's while allowing easier control of model structure and showing a substantially smaller sensitivity to random seeds and data splits. This increased control can aid in subsequently providing explanations for both training and final structure of the model.

Learning Classifier Systems for Self-Explaining Socio-Technical-Systems

Jul 01, 2022

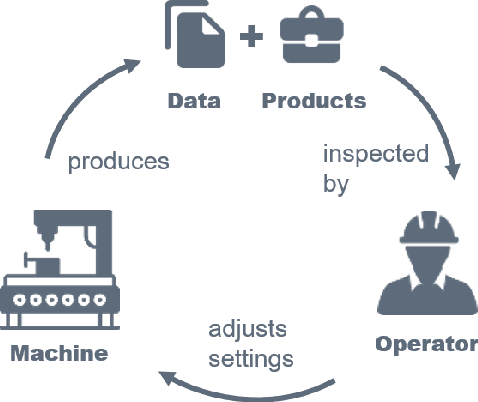

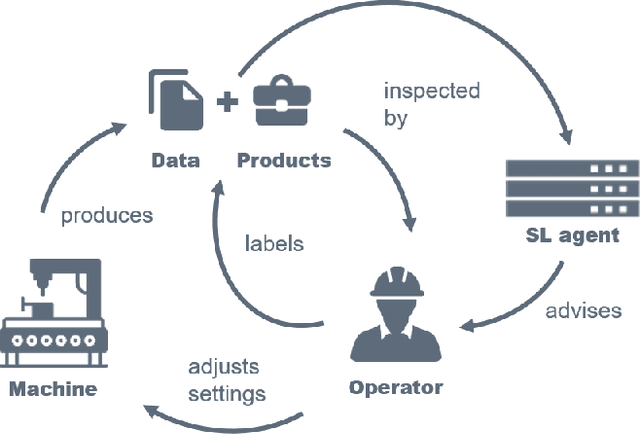

Abstract:In socio-technical settings, operators are increasingly assisted by decision support systems. By employing these, important properties of socio-technical systems such as self-adaptation and self-optimization are expected to improve further. To be accepted by and engage efficiently with operators, decision support systems need to be able to provide explanations regarding the reasoning behind specific decisions. In this paper, we propose the usage of Learning Classifier Systems, a family of rule-based machine learning methods, to facilitate transparent decision making and highlight some techniques to improve that. We then present a template of seven questions to assess application-specific explainability needs and demonstrate their usage in an interview-based case study for a manufacturing scenario. We find that the answers received did yield useful insights for a well-designed LCS model and requirements to have stakeholders actively engage with an intelligent agent.

Separating Rule Discovery and Global Solution Composition in a Learning Classifier System

Feb 03, 2022

Abstract:The utilization of digital agents to support crucial decision making is increasing in many industrial scenarios. However, trust in suggestions made by these agents is hard to achieve, though essential for profiting from their application, resulting in a need for explanations for both the decision making process as well as the model itself. For many systems, such as common deep learning black-box models, achieving at least some explainability requires complex post-processing, while other systems profit from being, to a reasonable extent, inherently interpretable. In this paper we propose an easily interpretable rule-based learning system specifically designed and thus especially suited for these scenarios and compare it on a set of regression problems against XCSF, a prominent rule-based learning system with a long research history. One key advantage of our system is that the rules' conditions and which rules compose a solution to the problem are evolved separately. We utilise independent rule fitnesses which allows users to specifically tailor their model structure to fit the given requirements for explainability. We find that the results of SupRB2's evaluation are comparable to XCSF's while allowing easier control of model structure and showing a substantially smaller sensitivity to random seeds and data splits. This increased control aids in subsequently providing explanations for both the training and the final structure of the model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge