Learning Classifier Systems for Self-Explaining Socio-Technical-Systems

Paper and Code

Jul 01, 2022

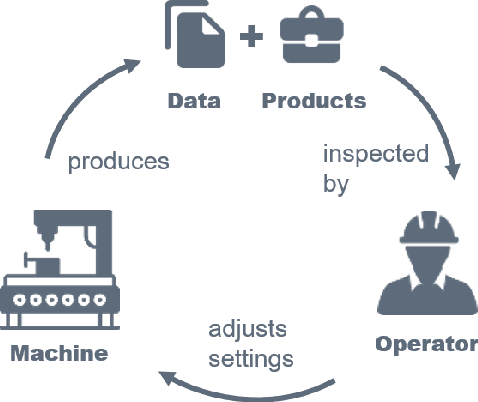

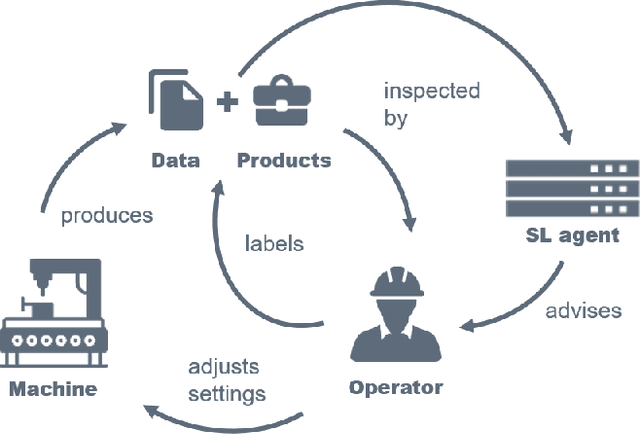

In socio-technical settings, operators are increasingly assisted by decision support systems. By employing these, important properties of socio-technical systems such as self-adaptation and self-optimization are expected to improve further. To be accepted by and engage efficiently with operators, decision support systems need to be able to provide explanations regarding the reasoning behind specific decisions. In this paper, we propose the usage of Learning Classifier Systems, a family of rule-based machine learning methods, to facilitate transparent decision making and highlight some techniques to improve that. We then present a template of seven questions to assess application-specific explainability needs and demonstrate their usage in an interview-based case study for a manufacturing scenario. We find that the answers received did yield useful insights for a well-designed LCS model and requirements to have stakeholders actively engage with an intelligent agent.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge