Helen Toner

Enabling External Scrutiny of AI Systems with Privacy-Enhancing Technologies

Feb 05, 2025Abstract:This article describes how technical infrastructure developed by the nonprofit OpenMined enables external scrutiny of AI systems without compromising sensitive information. Independent external scrutiny of AI systems provides crucial transparency into AI development, so it should be an integral component of any approach to AI governance. In practice, external researchers have struggled to gain access to AI systems because of AI companies' legitimate concerns about security, privacy, and intellectual property. But now, privacy-enhancing technologies (PETs) have reached a new level of maturity: end-to-end technical infrastructure developed by OpenMined combines several PETs into various setups that enable privacy-preserving audits of AI systems. We showcase two case studies where this infrastructure has been deployed in real-world governance scenarios: "Understanding Social Media Recommendation Algorithms with the Christchurch Call" and "Evaluating Frontier Models with the UK AI Safety Institute." We describe types of scrutiny of AI systems that could be facilitated by current setups and OpenMined's proposed future setups. We conclude that these innovative approaches deserve further exploration and support from the AI governance community. Interested policymakers can focus on empowering researchers on a legal level.

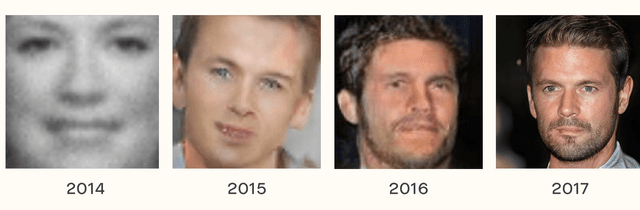

The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation

Feb 20, 2018

Abstract:This report surveys the landscape of potential security threats from malicious uses of AI, and proposes ways to better forecast, prevent, and mitigate these threats. After analyzing the ways in which AI may influence the threat landscape in the digital, physical, and political domains, we make four high-level recommendations for AI researchers and other stakeholders. We also suggest several promising areas for further research that could expand the portfolio of defenses, or make attacks less effective or harder to execute. Finally, we discuss, but do not conclusively resolve, the long-term equilibrium of attackers and defenders.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge