Heesun Park

Tabular-TX: Theme-Explanation Structure-based Table Summarization via In-Context Learning

Jan 17, 2025

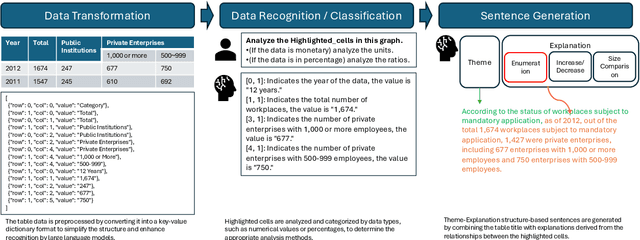

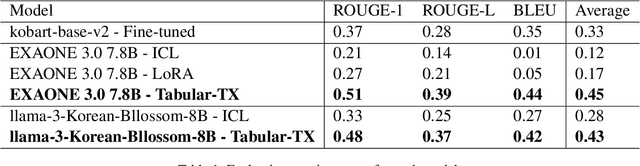

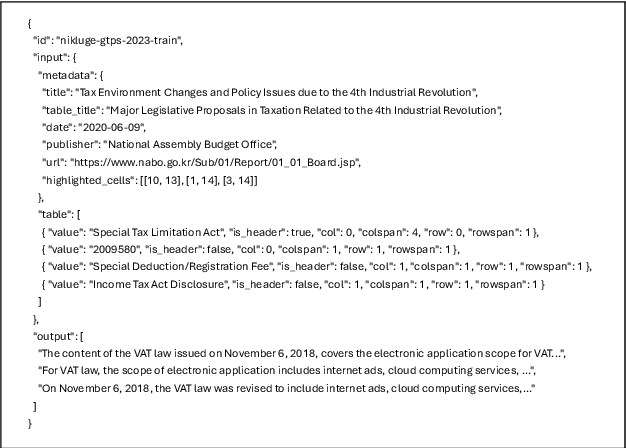

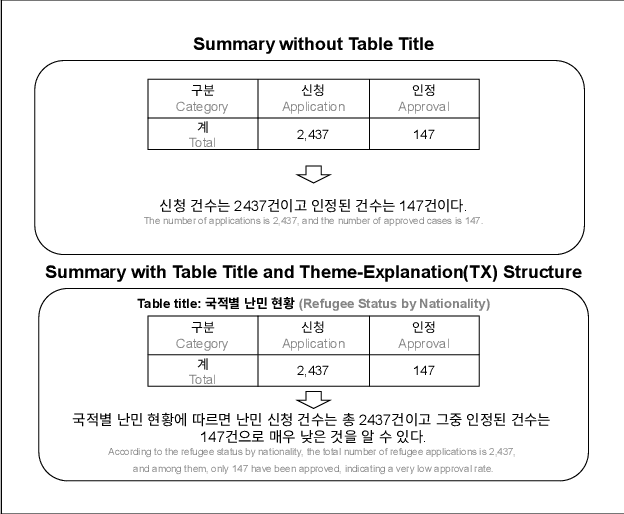

Abstract:This paper proposes a Theme-Explanation Structure-based Table Summarization (Tabular-TX) pipeline designed to efficiently process table data. Tabular-TX preprocesses table data by focusing on highlighted cells and then generates summary sentences structured with a Theme Part in the form of adverbial phrases followed by an Explanation Part in the form of clauses. In this process, customized analysis is performed by considering the structural characteristics and comparability of the table. Additionally, by utilizing In-Context Learning, Tabular-TX optimizes the analytical capabilities of large language models (LLMs) without the need for fine-tuning, effectively handling the structural complexity of table data. Results from applying the proposed Tabular-TX to generate table-based summaries demonstrated superior performance compared to existing fine-tuning-based methods, despite limitations in dataset size. Experimental results confirmed that Tabular-TX can process complex table data more effectively and established it as a new alternative for table-based question answering and summarization tasks, particularly in resource-constrained environments.

Intermediate and Future Frame Prediction of Geostationary Satellite Imagery With Warp and Refine Network

Mar 08, 2023Abstract:Geostationary satellite imagery has applications in climate and weather forecasting, planning natural energy resources, and predicting extreme weather events. For precise and accurate prediction, higher spatial and temporal resolution of geostationary satellite imagery is important. Although recent geostationary satellite resolution has improved, the long-term analysis of climate applications is limited to using multiple satellites from the past to the present due to the different resolutions. To solve this problem, we proposed warp and refine network (WR-Net). WR-Net is divided into an optical flow warp component and a warp image refinement component. We used the TV-L1 algorithm instead of deep learning-based approaches to extract the optical flow warp component. The deep-learning-based model is trained on the human-centric view of the RGB channel and does not work on geostationary satellites, which is gray-scale one-channel imagery. The refinement network refines the warped image through a multi-temporal fusion layer. We evaluated WR-Net by interpolation of temporal resolution at 4 min intervals to 2 min intervals in large-scale GK2A geostationary meteorological satellite imagery. Furthermore, we applied WR-Net to the future frame prediction task and showed that the explicit use of optical flow can help future frame prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge