Hazounne Lee

Wavespace: A Highly Explorable Wavetable Generator

Jul 29, 2024

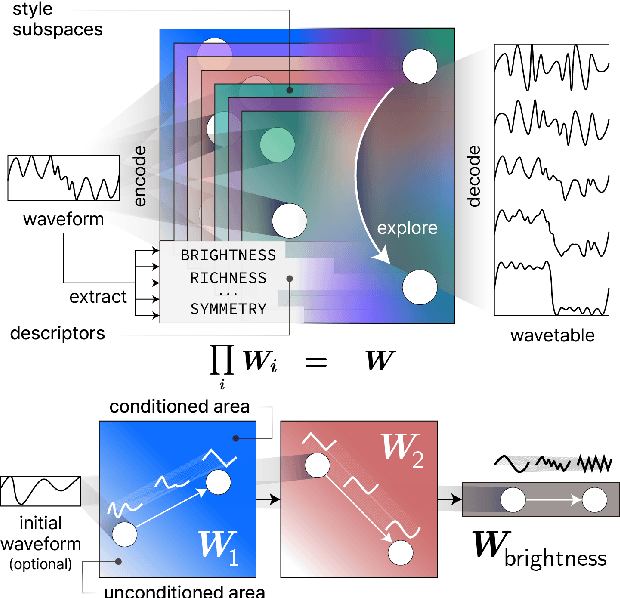

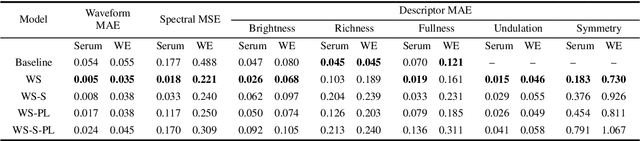

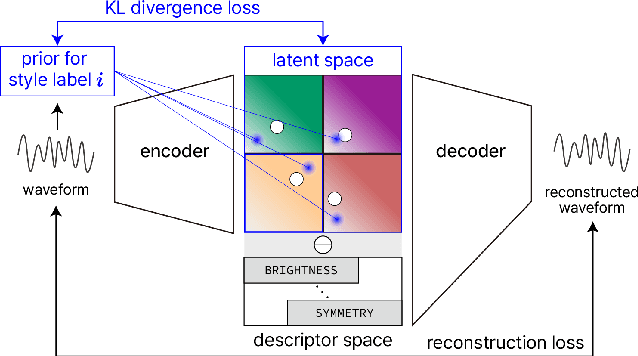

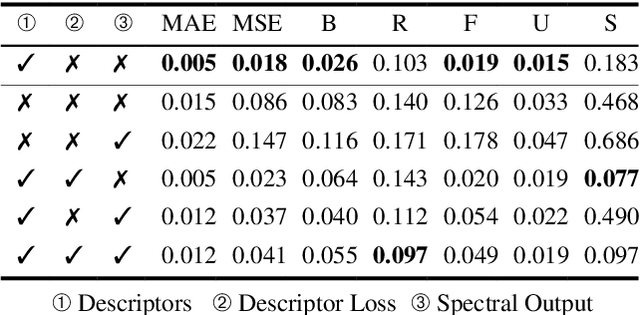

Abstract:Wavetable synthesis generates quasi-periodic waveforms of musical tones by interpolating a list of waveforms called wavetable. As generative models that utilize latent representations offer various methods in waveform generation for musical applications, studies in wavetable generation with invertible architecture have also arisen recently. While they are promising, it is still challenging to generate wavetables with detailed controls in disentangling factors within the latent representation. In response, we present Wavespace, a novel framework for wavetable generation that empowers users with enhanced parameter controls. Our model allows users to apply pre-defined conditions to the output wavetables. We employ a variational autoencoder and completely factorize its latent space to different waveform styles. We also condition the generator with auxiliary timbral and morphological descriptors. This way, users can create unique wavetables by independently manipulating each latent subspace and descriptor parameters. Our framework is efficient enough for practical use; we prototyped an oscillator plug-in as a proof of concept for real-time integration of Wavespace within digital audio workspaces (DAWs).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge