Hardik Tankaria

A Stochastic Variance Reduced Gradient using Barzilai-Borwein Techniques as Second Order Information

Aug 23, 2022

Abstract:In this paper, we consider to improve the stochastic variance reduce gradient (SVRG) method via incorporating the curvature information of the objective function. We propose to reduce the variance of stochastic gradients using the computationally efficient Barzilai-Borwein (BB) method by incorporating it into the SVRG. We also incorporate a BB-step size as its variant. We prove its linear convergence theorem that works not only for the proposed method but also for the other existing variants of SVRG with second-order information. We conduct the numerical experiments on the benchmark datasets and show that the proposed method with constant step size performs better than the existing variance reduced methods for some test problems.

Nys-Curve: Nyström-Approximated Curvature for Stochastic Optimization

Oct 16, 2021

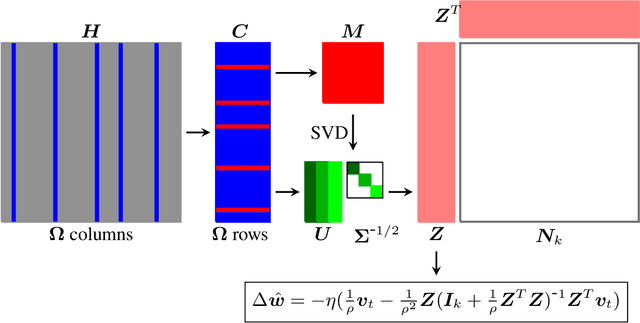

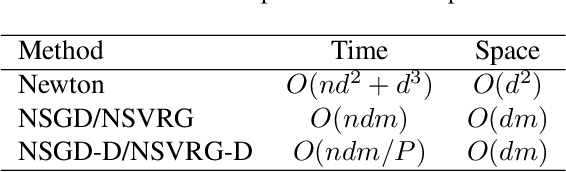

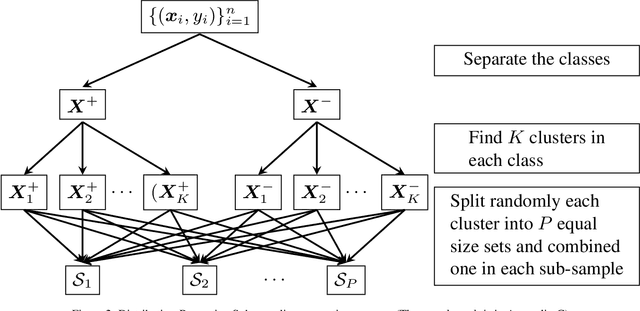

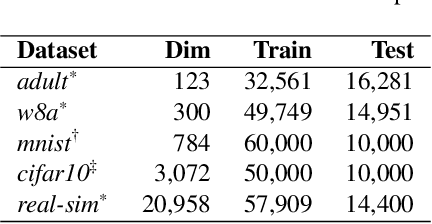

Abstract:The quasi-Newton methods generally provide curvature information by approximating the Hessian using the secant equation. However, the secant equation becomes insipid in approximating the Newton step owing to its use of the first-order derivatives. In this study, we propose an approximate Newton step-based stochastic optimization algorithm for large-scale empirical risk minimization of convex functions with linear convergence rates. Specifically, we compute a partial column Hessian of size ($d\times k$) with $k\ll d$ randomly selected variables, then use the \textit{Nystr\"om method} to better approximate the full Hessian matrix. To further reduce the computational complexity per iteration, we directly compute the update step ($\Delta\boldsymbol{w}$) without computing and storing the full Hessian or its inverse. Furthermore, to address large-scale scenarios in which even computing a partial Hessian may require significant time, we used distribution-preserving (DP) sub-sampling to compute a partial Hessian. The DP sub-sampling generates $p$ sub-samples with similar first and second-order distribution statistics and selects a single sub-sample at each epoch in a round-robin manner to compute the partial Hessian. We integrate our approximated Hessian with stochastic gradient descent and stochastic variance-reduced gradients to solve the logistic regression problem. The numerical experiments show that the proposed approach was able to obtain a better approximation of Newton\textquotesingle s method with performance competitive with the state-of-the-art first-order and the stochastic quasi-Newton methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge