Haoshen Fan

A Hierarchical Attention Based Seq2seq Model for Chinese Lyrics Generation

Jun 15, 2019

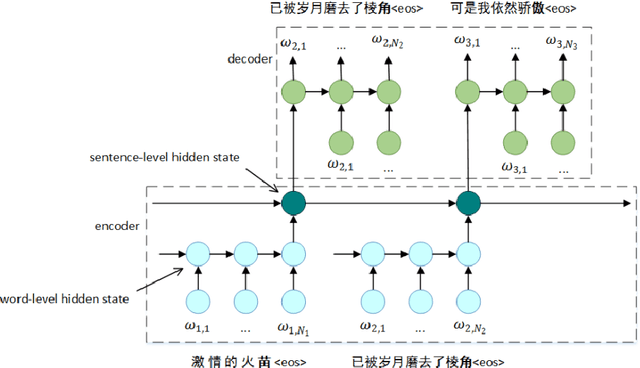

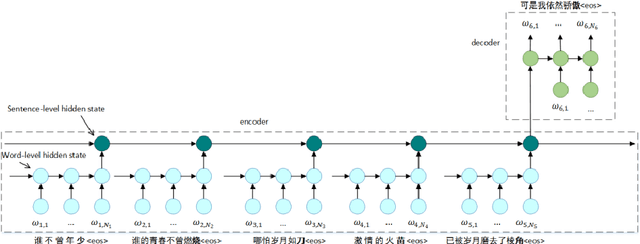

Abstract:In this paper, we comprehensively study on context-aware generation of Chinese song lyrics. Conventional text generative models generate a sequence or sentence word by word, failing to consider the contextual relationship between sentences. Taking account into the characteristics of lyrics, a hierarchical attention based Seq2Seq (Sequence-to-Sequence) model is proposed for Chinese lyrics generation. With encoding of word-level and sentence-level contextual information, this model promotes the topic relevance and consistency of generation. A large Chinese lyrics corpus is also leveraged for model training. Eventually, results of automatic and human evaluations demonstrate that our model is able to compose complete Chinese lyrics with one united topic constraint.

Automatic Acrostic Couplet Generation with Three-Stage Neural Network Pipelines

Jun 15, 2019

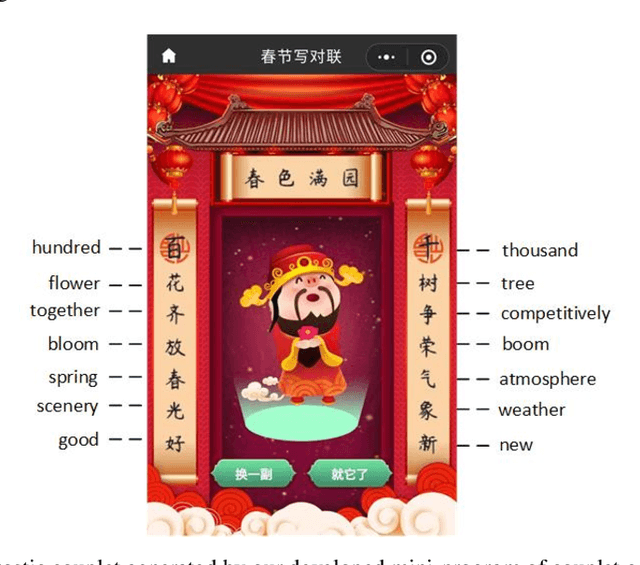

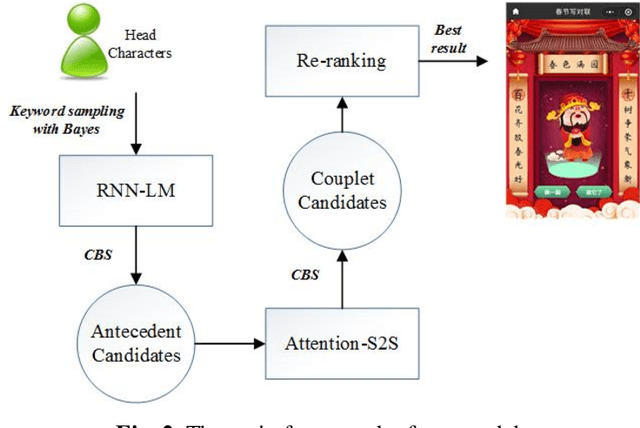

Abstract:As one of the quintessence of Chinese traditional culture, couplet compromises two syntactically symmetric clauses equal in length, namely, an antecedent and subsequent clause. Moreover, corresponding characters and phrases at the same position of the two clauses are paired with each other under certain constraints of semantic and/or syntactic relatedness. Automatic couplet generation is recognized as a challenging problem even in the Artificial Intelligence field. In this paper, we comprehensively study on automatic generation of acrostic couplet with the first characters defined by users. The complete couplet generation is mainly divided into three stages, that is, antecedent clause generation pipeline, subsequent clause generation pipeline and clause re-ranker. To realize semantic and/or syntactic relatedness between two clauses, attention-based Sequence-to-Sequence (S2S) neural network is employed. Moreover, to provide diverse couplet candidates for re-ranking, a cluster-based beam search approach is incorporated into the S2S network. Both BLEU metrics and human judgments have demonstrated the effectiveness of our proposed method. Eventually, a mini-program based on this generation system is developed and deployed on Wechat for real users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge