Haojiang Ying

Face processing emerges from object-trained convolutional neural networks

May 29, 2024

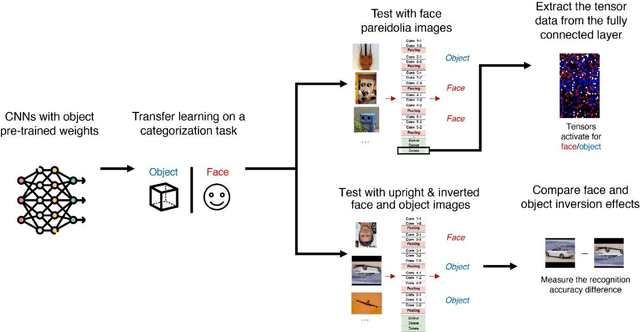

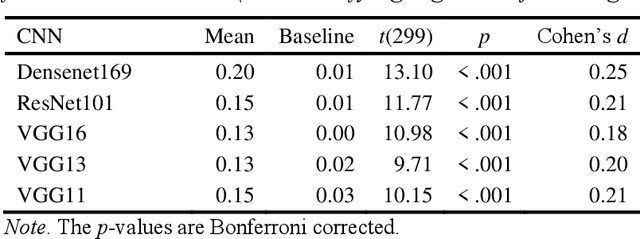

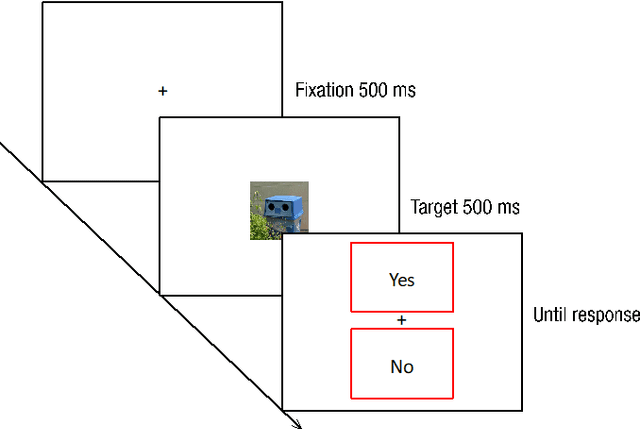

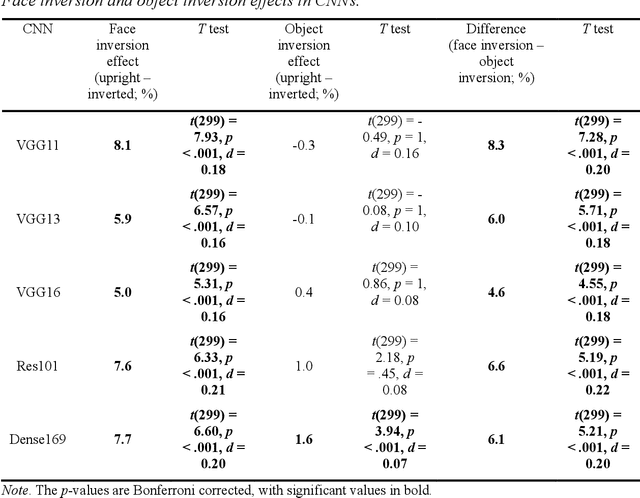

Abstract:Whether face processing depends on unique, domain-specific neurocognitive mechanisms or domain-general object recognition mechanisms has long been debated. Directly testing these competing hypotheses in humans has proven challenging due to extensive exposure to both faces and objects. Here, we systematically test these hypotheses by capitalizing on recent progress in convolutional neural networks (CNNs) that can be trained without face exposure (i.e., pre-trained weights). Domain-general mechanism accounts posit that face processing can emerge from a neural network without specialized pre-training on faces. Consequently, we trained CNNs solely on objects and tested their ability to recognize and represent faces as well as objects that look like faces (face pareidolia stimuli).... Due to the character limits, for more details see in attached pdf

Using Human-like Mechanism to Weaken Effect of Pre-training Weight Bias in Face-Recognition Convolutional Neural Network

Oct 20, 2023

Abstract:Convolutional neural network (CNN), as an important model in artificial intelligence, has been widely used and studied in different disciplines. The computational mechanisms of CNNs are still not fully revealed due to the their complex nature. In this study, we focused on 4 extensively studied CNNs (AlexNet, VGG11, VGG13, and VGG16) which has been analyzed as human-like models by neuroscientists with ample evidence. We trained these CNNs to emotion valence classification task by transfer learning. Comparing their performance with human data, the data unveiled that these CNNs would partly perform as human does. We then update the object-based AlexNet using self-attention mechanism based on neuroscience and behavioral data. The updated FE-AlexNet outperformed all the other tested CNNs and closely resembles human perception. The results further unveil the computational mechanisms of these CNNs. Moreover, this study offers a new paradigm to better understand and improve CNN performance via human data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge