Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Hans Parshall

Neural collapse with unconstrained features

Nov 23, 2020Figures and Tables:

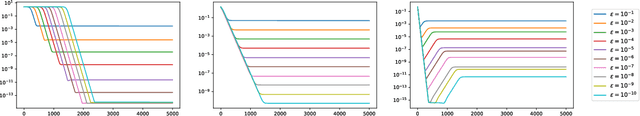

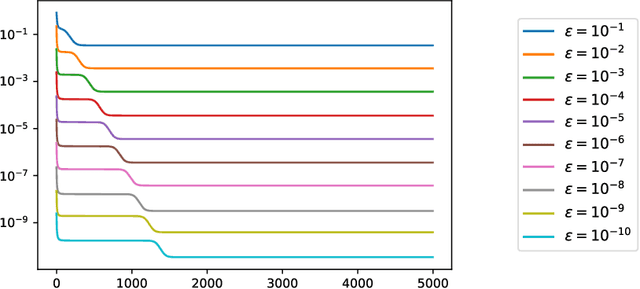

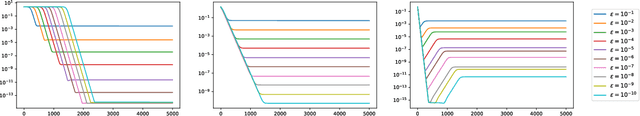

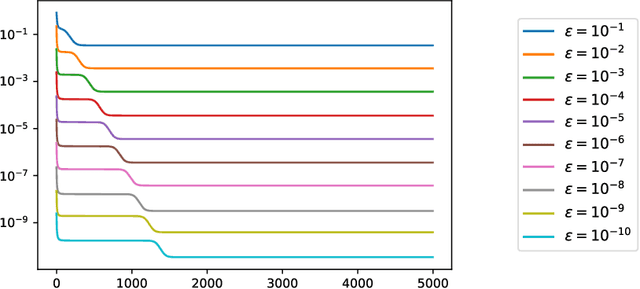

Abstract:Neural collapse is an emergent phenomenon in deep learning that was recently discovered by Papyan, Han and Donoho. We propose a simple "unconstrained features model" in which neural collapse also emerges empirically. By studying this model, we provide some explanation for the emergence of neural collapse in terms of the landscape of empirical risk.

Via

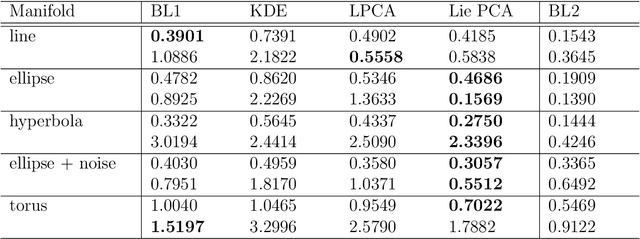

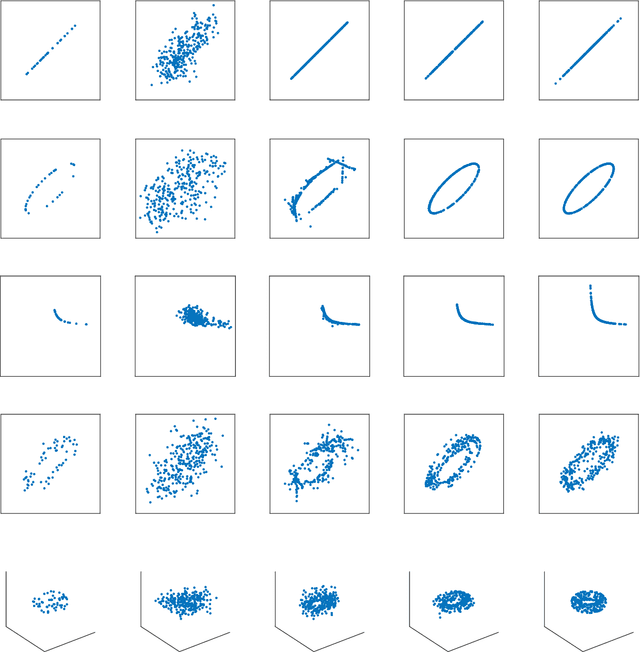

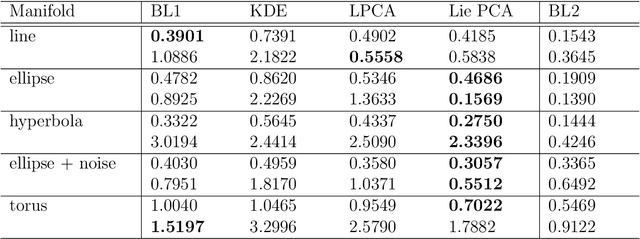

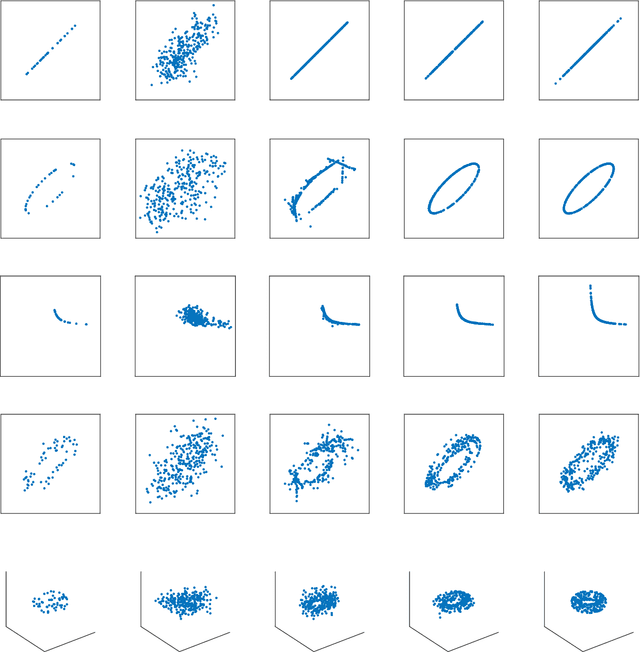

Lie PCA: Density estimation for symmetric manifolds

Sep 13, 2020Figures and Tables:

Abstract:We introduce an extension to local principal component analysis for learning symmetric manifolds. In particular, we use a spectral method to approximate the Lie algebra corresponding to the symmetry group of the underlying manifold. We derive the sample complexity of our method for a variety of manifolds before applying it to various data sets for improved density estimation.

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge