Hannes Ovrén

Drone Detection Using a Low-Power Neuromorphic Virtual Tripwire

Sep 16, 2025Abstract:Small drones are an increasing threat to both military personnel and civilian infrastructure, making early and automated detection crucial. In this work we develop a system that uses spiking neural networks and neuromorphic cameras (event cameras) to detect drones. The detection model is deployed on a neuromorphic chip making this a fully neuromorphic system. Multiple detection units can be deployed to create a virtual tripwire which detects when and where drones enter a restricted zone. We show that our neuromorphic solution is several orders of magnitude more energy efficient than a reference solution deployed on an edge GPU, allowing the system to run for over a year on battery power. We investigate how synthetically generated data can be used for training, and show that our model most likely relies on the shape of the drone rather than the temporal characteristics of its propellers. The small size and low power consumption allows easy deployment in contested areas or locations that lack power infrastructure.

Trajectory Representation and Landmark Projection for Continuous-Time Structure from Motion

May 07, 2018

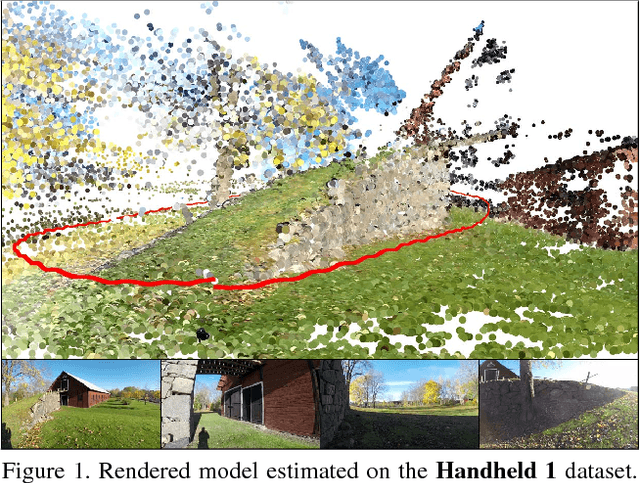

Abstract:This paper revisits the problem of continuous-time structure from motion, and introduces a number of extensions that improve convergence and efficiency. The formulation with a $\mathcal{C}^2$-continuous spline for the trajectory naturally incorporates inertial measurements, as derivatives of the sought trajectory. We analyse the behaviour of split interpolation on $\mathbb{SO}(3)$ and on $\mathbb{R}^3$, and a joint interpolation on $\mathbb{SE}(3)$, and show that the latter implicitly couples the direction of translation and rotation. Such an assumption can make good sense for a camera mounted on a robot arm, but not for hand-held or body-mounted cameras. Our experiments show that split interpolation on $\mathbb{SO}(3)$ and on $\mathbb{R}^3$ is preferable over $\mathbb{SE}(3)$ interpolation in all tested cases. Finally, we investigate the problem of landmark reprojection on rolling shutter cameras, and show that the tested reprojection methods give similar quality, while their computational load varies by a factor of 2.

Spline Error Weighting for Robust Visual-Inertial Fusion

Apr 13, 2018

Abstract:In this paper we derive and test a probability-based weighting that can balance residuals of different types in spline fitting. In contrast to previous formulations, the proposed spline error weighting scheme also incorporates a prediction of the approximation error of the spline fit. We demonstrate the effectiveness of the prediction in a synthetic experiment, and apply it to visual-inertial fusion on rolling shutter cameras. This results in a method that can estimate 3D structure with metric scale on generic first-person videos. We also propose a quality measure for spline fitting, that can be used to automatically select the knot spacing. Experiments verify that the obtained trajectory quality corresponds well with the requested quality. Finally, by linearly scaling the weights, we show that the proposed spline error weighting minimizes the estimation errors on real sequences, in terms of scale and end-point errors.

Efficient Multi-Frequency Phase Unwrapping using Kernel Density Estimation

Aug 18, 2016

Abstract:In this paper we introduce an efficient method to unwrap multi-frequency phase estimates for time-of-flight ranging. The algorithm generates multiple depth hypotheses and uses a spatial kernel density estimate (KDE) to rank them. The confidence produced by the KDE is also an effective means to detect outliers. We also introduce a new closed-form expression for phase noise prediction, that better fits real data. The method is applied to depth decoding for the Kinect v2 sensor, and compared to the Microsoft Kinect SDK and to the open source driver libfreenect2. The intended Kinect v2 use case is scenes with less than 8m range, and for such cases we observe consistent improvements, while maintaining real-time performance. When extending the depth range to the maximal value of 8.75m, we get about 52% more valid measurements than libfreenect2. The effect is that the sensor can now be used in large depth scenes, where it was previously not a good choice. Code and supplementary material are available at http://www.cvl.isy.liu.se/research/datasets/kinect2-dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge