Guido Zarrella

Scaling Remote Sensing Foundation Models: Data Domain Tradeoffs at the Peta-Scale

Dec 29, 2025Abstract:We explore the scaling behaviors of artificial intelligence to establish practical techniques for training foundation models on high-resolution electro-optical (EO) datasets that exceed the current state-of-the-art scale by orders of magnitude. Modern multimodal machine learning (ML) applications, such as generative artificial intelligence (GenAI) systems for image captioning, search, and reasoning, depend on robust, domain-specialized encoders for non-text modalities. In natural-image domains where internet-scale data is plentiful, well-established scaling laws help optimize the joint scaling of model capacity, training compute, and dataset size. Unfortunately, these relationships are much less well-understood in high-value domains like remote sensing (RS). Using over a quadrillion pixels of commercial satellite EO data and the MITRE Federal AI Sandbox, we train progressively larger vision transformer (ViT) backbones, report success and failure modes observed at petascale, and analyze implications for bridging domain gaps across additional RS modalities. We observe that even at this scale, performance is consistent with a data limited regime rather than a model parameter-limited one. These practical insights are intended to inform data-collection strategies, compute budgets, and optimization schedules that advance the future development of frontier-scale RS foundation models.

OCCULT: Evaluating Large Language Models for Offensive Cyber Operation Capabilities

Feb 18, 2025

Abstract:The prospect of artificial intelligence (AI) competing in the adversarial landscape of cyber security has long been considered one of the most impactful, challenging, and potentially dangerous applications of AI. Here, we demonstrate a new approach to assessing AI's progress towards enabling and scaling real-world offensive cyber operations (OCO) tactics in use by modern threat actors. We detail OCCULT, a lightweight operational evaluation framework that allows cyber security experts to contribute to rigorous and repeatable measurement of the plausible cyber security risks associated with any given large language model (LLM) or AI employed for OCO. We also prototype and evaluate three very different OCO benchmarks for LLMs that demonstrate our approach and serve as examples for building benchmarks under the OCCULT framework. Finally, we provide preliminary evaluation results to demonstrate how this framework allows us to move beyond traditional all-or-nothing tests, such as those crafted from educational exercises like capture-the-flag environments, to contextualize our indicators and warnings in true cyber threat scenarios that present risks to modern infrastructure. We find that there has been significant recent advancement in the risks of AI being used to scale realistic cyber threats. For the first time, we find a model (DeepSeek-R1) is capable of correctly answering over 90% of challenging offensive cyber knowledge tests in our Threat Actor Competency Test for LLMs (TACTL) multiple-choice benchmarks. We also show how Meta's Llama and Mistral's Mixtral model families show marked performance improvements over earlier models against our benchmarks where LLMs act as offensive agents in MITRE's high-fidelity offensive and defensive cyber operations simulation environment, CyberLayer.

Mai Ho'omāuna i ka 'Ai: Language Models Improve Automatic Speech Recognition in Hawaiian

Apr 03, 2024Abstract:In this paper we address the challenge of improving Automatic Speech Recognition (ASR) for a low-resource language, Hawaiian, by incorporating large amounts of independent text data into an ASR foundation model, Whisper. To do this, we train an external language model (LM) on ~1.5M words of Hawaiian text. We then use the LM to rescore Whisper and compute word error rates (WERs) on a manually curated test set of labeled Hawaiian data. As a baseline, we use Whisper without an external LM. Experimental results reveal a small but significant improvement in WER when ASR outputs are rescored with a Hawaiian LM. The results support leveraging all available data in the development of ASR systems for underrepresented languages.

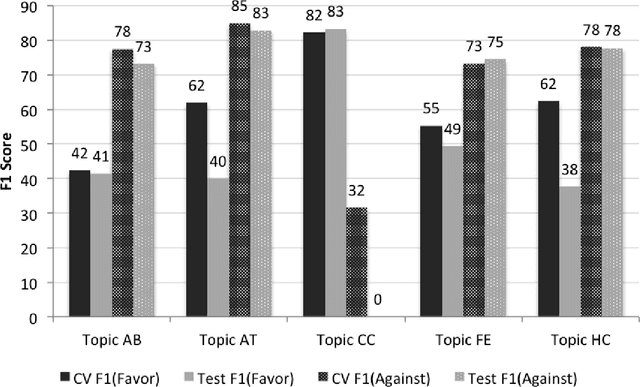

MITRE at SemEval-2016 Task 6: Transfer Learning for Stance Detection

Jun 13, 2016

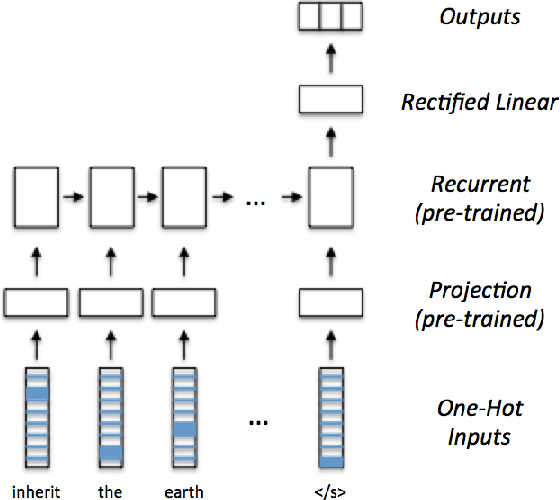

Abstract:We describe MITRE's submission to the SemEval-2016 Task 6, Detecting Stance in Tweets. This effort achieved the top score in Task A on supervised stance detection, producing an average F1 score of 67.8 when assessing whether a tweet author was in favor or against a topic. We employed a recurrent neural network initialized with features learned via distant supervision on two large unlabeled datasets. We trained embeddings of words and phrases with the word2vec skip-gram method, then used those features to learn sentence representations via a hashtag prediction auxiliary task. These sentence vectors were then fine-tuned for stance detection on several hundred labeled examples. The result was a high performing system that used transfer learning to maximize the value of the available training data.

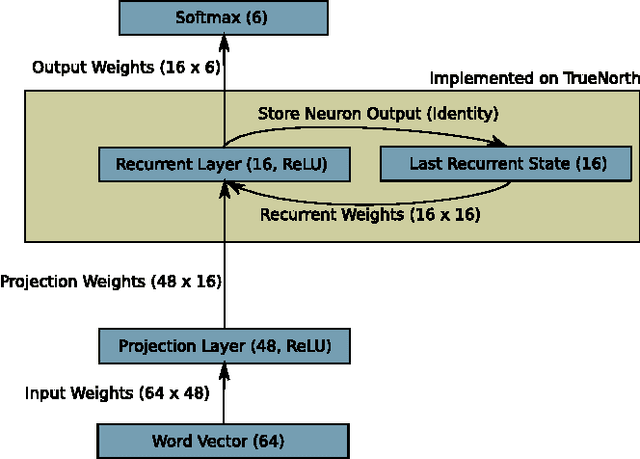

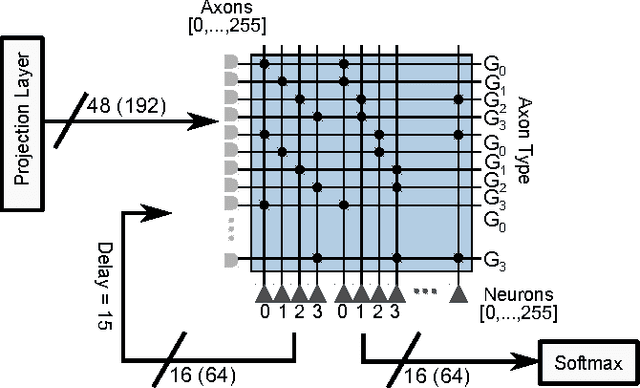

Conversion of Artificial Recurrent Neural Networks to Spiking Neural Networks for Low-power Neuromorphic Hardware

Jan 16, 2016

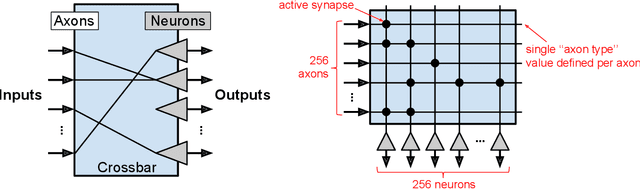

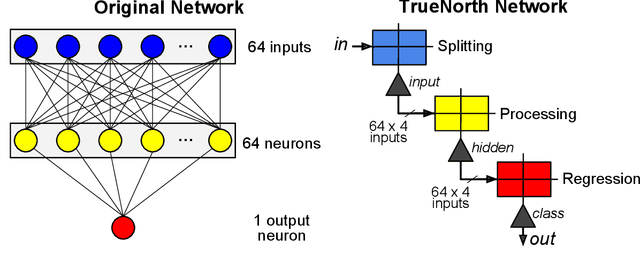

Abstract:In recent years the field of neuromorphic low-power systems that consume orders of magnitude less power gained significant momentum. However, their wider use is still hindered by the lack of algorithms that can harness the strengths of such architectures. While neuromorphic adaptations of representation learning algorithms are now emerging, efficient processing of temporal sequences or variable length-inputs remain difficult. Recurrent neural networks (RNN) are widely used in machine learning to solve a variety of sequence learning tasks. In this work we present a train-and-constrain methodology that enables the mapping of machine learned (Elman) RNNs on a substrate of spiking neurons, while being compatible with the capabilities of current and near-future neuromorphic systems. This "train-and-constrain" method consists of first training RNNs using backpropagation through time, then discretizing the weights and finally converting them to spiking RNNs by matching the responses of artificial neurons with those of the spiking neurons. We demonstrate our approach by mapping a natural language processing task (question classification), where we demonstrate the entire mapping process of the recurrent layer of the network on IBM's Neurosynaptic System "TrueNorth", a spike-based digital neuromorphic hardware architecture. TrueNorth imposes specific constraints on connectivity, neural and synaptic parameters. To satisfy these constraints, it was necessary to discretize the synaptic weights and neural activities to 16 levels, and to limit fan-in to 64 inputs. We find that short synaptic delays are sufficient to implement the dynamical (temporal) aspect of the RNN in the question classification task. The hardware-constrained model achieved 74% accuracy in question classification while using less than 0.025% of the cores on one TrueNorth chip, resulting in an estimated power consumption of ~17 uW.

TrueHappiness: Neuromorphic Emotion Recognition on TrueNorth

Jan 16, 2016

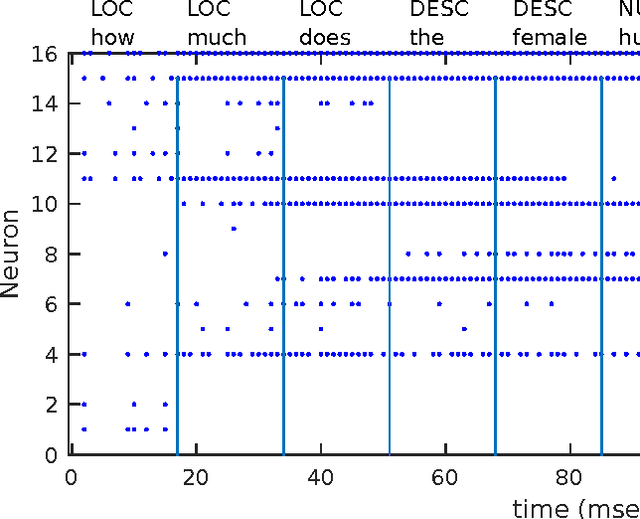

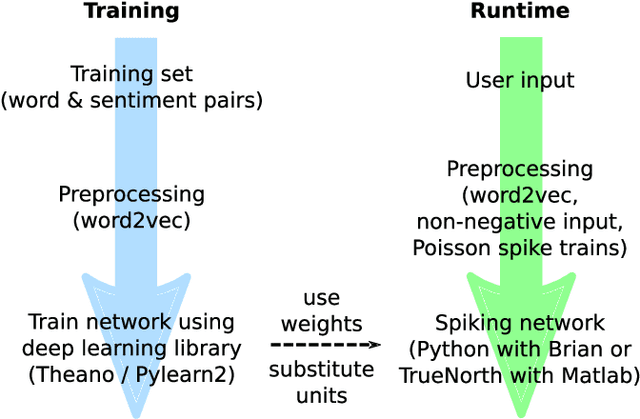

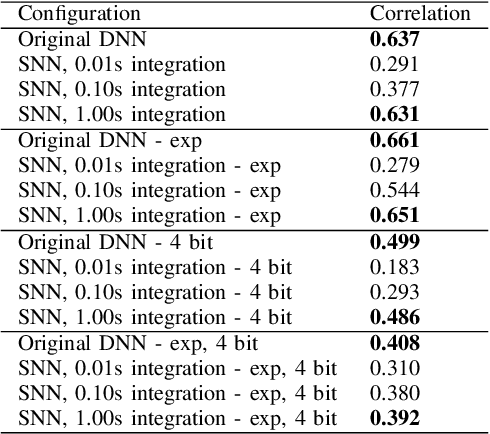

Abstract:We present an approach to constructing a neuromorphic device that responds to language input by producing neuron spikes in proportion to the strength of the appropriate positive or negative emotional response. Specifically, we perform a fine-grained sentiment analysis task with implementations on two different systems: one using conventional spiking neural network (SNN) simulators and the other one using IBM's Neurosynaptic System TrueNorth. Input words are projected into a high-dimensional semantic space and processed through a fully-connected neural network (FCNN) containing rectified linear units trained via backpropagation. After training, this FCNN is converted to a SNN by substituting the ReLUs with integrate-and-fire neurons. We show that there is practically no performance loss due to conversion to a spiking network on a sentiment analysis test set, i.e. correlations between predictions and human annotations differ by less than 0.02 comparing the original DNN and its spiking equivalent. Additionally, we show that the SNN generated with this technique can be mapped to existing neuromorphic hardware -- in our case, the TrueNorth chip. Mapping to the chip involves 4-bit synaptic weight discretization and adjustment of the neuron thresholds. The resulting end-to-end system can take a user input, i.e. a word in a vocabulary of over 300,000 words, and estimate its sentiment on TrueNorth with a power consumption of approximately 50 uW.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge