Guenole Silvestre

MiTU-Net: A fine-tuned U-Net with SegFormer backbone for segmenting pubic symphysis-fetal head

Jan 27, 2024Abstract:Ultrasound measurements have been examined as potential tools for predicting the likelihood of successful vaginal delivery. The angle of progression (AoP) is a measurable parameter that can be obtained during the initial stage of labor. The AoP is defined as the angle between a straight line along the longitudinal axis of the pubic symphysis (PS) and a line from the inferior edge of the PS to the leading edge of the fetal head (FH). However, the process of measuring AoP on ultrasound images is time consuming and prone to errors. To address this challenge, we propose the Mix Transformer U-Net (MiTU-Net) network, for automatic fetal head-pubic symphysis segmentation and AoP measurement. The MiTU-Net model is based on an encoder-decoder framework, utilizing a pre-trained efficient transformer to enhance feature representation. Within the efficient transformer encoder, the model significantly reduces the trainable parameters of the encoder-decoder model. The effectiveness of the proposed method is demonstrated through experiments conducted on a recent transperineal ultrasound dataset. Our model achieves competitive performance, ranking 5th compared to existing approaches. The MiTU-Net presents an efficient method for automatic segmentation and AoP measurement, reducing errors and assisting sonographers in clinical practice. Reproducibility: Framework implementation and models available on https://github.com/13204942/MiTU-Net.

Lightweight Framework for Automated Kidney Stone Detection using coronal CT images

Nov 24, 2023

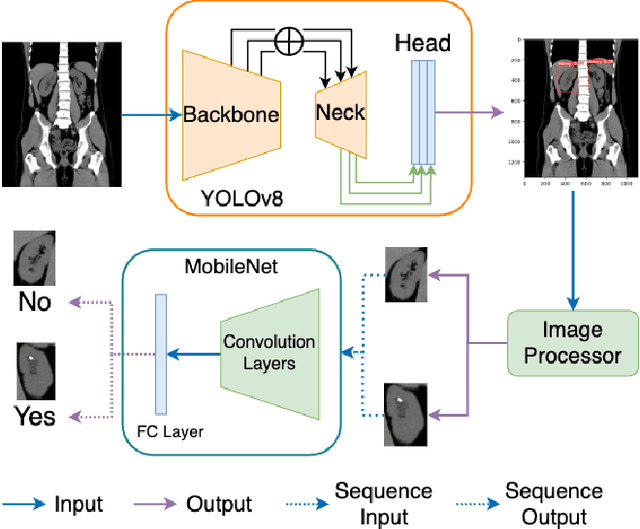

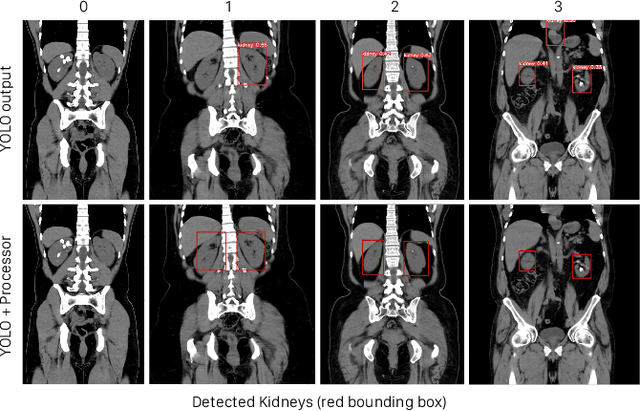

Abstract:Kidney stone disease results in millions of annual visits to emergency departments in the United States. Computed tomography (CT) scans serve as the standard imaging modality for efficient detection of kidney stones. Various approaches utilizing convolutional neural networks (CNNs) have been proposed to implement automatic diagnosis of kidney stones. However, there is a growing interest in employing fast and efficient CNNs on edge devices in clinical practice. In this paper, we propose a lightweight fusion framework for kidney detection and kidney stone diagnosis on coronal CT images. In our design, we aim to minimize the computational costs of training and inference while implementing an automated approach. The experimental results indicate that our framework can achieve competitive outcomes using only 8\% of the original training data. These results include an F1 score of 96\% and a False Negative (FN) error rate of 4\%. Additionally, the average detection time per CT image on a CPU is 0.62 seconds. Reproducibility: Framework implementation and models available on GitHub.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge