Guadalupe Quirarte

Deep Learning for Melt Pool Depth Contour Prediction From Surface Thermal Images via Vision Transformers

Apr 26, 2024

Abstract:Insufficient overlap between the melt pools produced during Laser Powder Bed Fusion (L-PBF) can lead to lack-of-fusion defects and deteriorated mechanical and fatigue performance. In-situ monitoring of the melt pool subsurface morphology requires specialized equipment that may not be readily accessible or scalable. Therefore, we introduce a machine learning framework to correlate in-situ two-color thermal images observed via high-speed color imaging to the two-dimensional profile of the melt pool cross-section. Specifically, we employ a hybrid CNN-Transformer architecture to establish a correlation between single bead off-axis thermal image sequences and melt pool cross-section contours measured via optical microscopy. In this architecture, a ResNet model embeds the spatial information contained within the thermal images to a latent vector, while a Transformer model correlates the sequence of embedded vectors to extract temporal information. Our framework is able to model the curvature of the subsurface melt pool structure, with improved performance in high energy density regimes compared to analytical melt pool models. The performance of this model is evaluated through dimensional and geometric comparisons to the corresponding experimental melt pool observations.

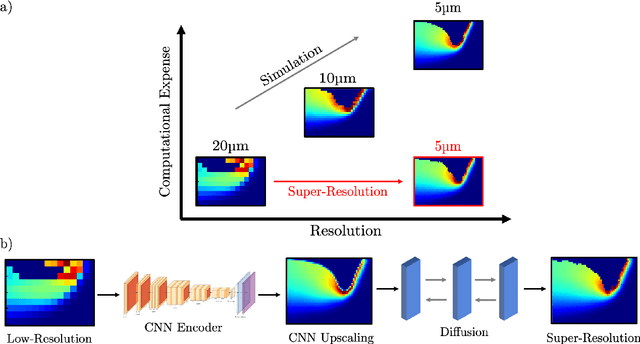

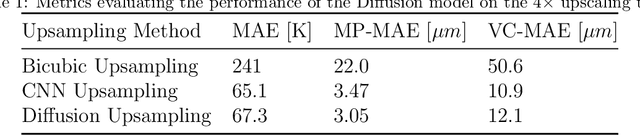

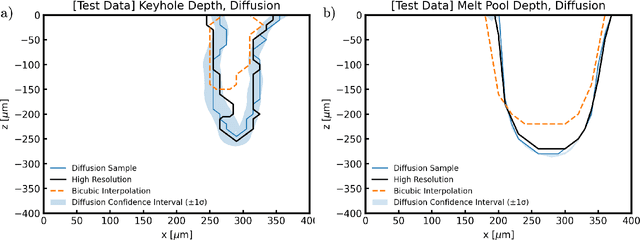

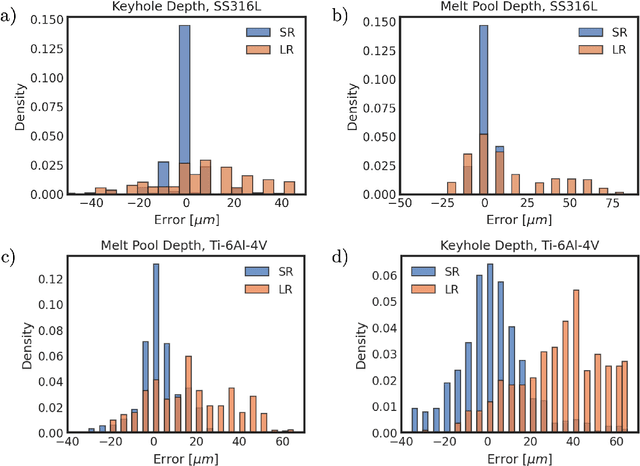

Inexpensive High Fidelity Melt Pool Models in Additive Manufacturing Using Generative Deep Diffusion

Nov 15, 2023

Abstract:Defects in laser powder bed fusion (L-PBF) parts often result from the meso-scale dynamics of the molten alloy near the laser, known as the melt pool. For instance, the melt pool can directly contribute to the formation of undesirable porosity, residual stress, and surface roughness in the final part. Experimental in-situ monitoring of the three-dimensional melt pool physical fields is challenging, due to the short length and time scales involved in the process. Multi-physics simulation methods can describe the three-dimensional dynamics of the melt pool, but are computationally expensive at the mesh refinement required for accurate predictions of complex effects, such as the formation of keyhole porosity. Therefore, in this work, we develop a generative deep learning model based on the probabilistic diffusion framework to map low-fidelity, coarse-grained simulation information to the high-fidelity counterpart. By doing so, we bypass the computational expense of conducting multiple high-fidelity simulations for analysis by instead upscaling lightweight coarse mesh simulations. Specifically, we implement a 2-D diffusion model to spatially upscale cross-sections of the coarsely simulated melt pool to their high-fidelity equivalent. We demonstrate the preservation of key metrics of the melting process between the ground truth simulation data and the diffusion model output, such as the temperature field, the melt pool dimensions and the variability of the keyhole vapor cavity. Specifically, we predict the melt pool depth within 3 $\mu m$ based on low-fidelity input data 4$\times$ coarser than the high-fidelity simulations, reducing analysis time by two orders of magnitude.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge