Greg Burman

Optimal OnTheFly Feedback Control of Event Sensors

Aug 23, 2024

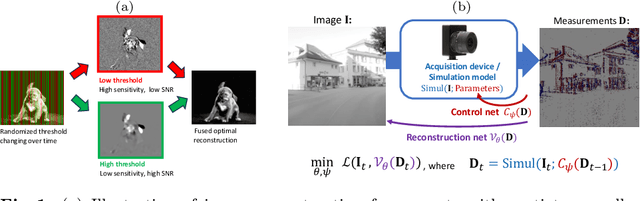

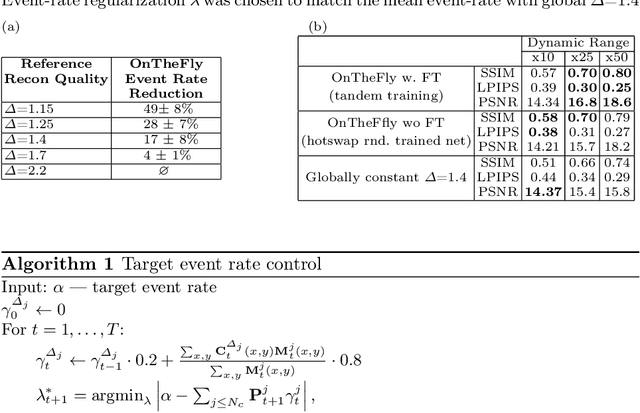

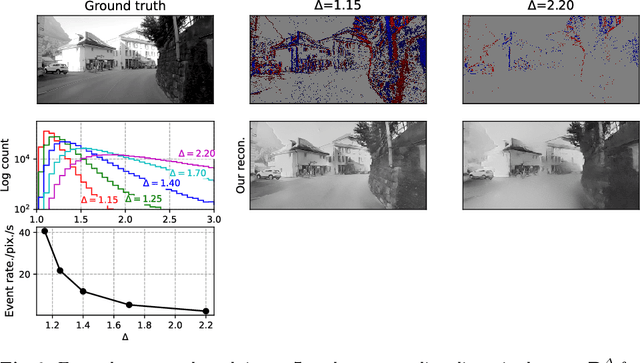

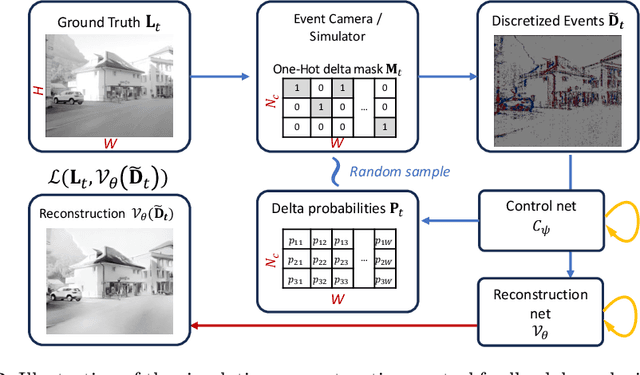

Abstract:Event-based vision sensors produce an asynchronous stream of events which are triggered when the pixel intensity variation exceeds a predefined threshold. Such sensors offer significant advantages, including reduced data redundancy, micro-second temporal resolution, and low power consumption, making them valuable for applications in robotics and computer vision. In this work, we consider the problem of video reconstruction from events, and propose an approach for dynamic feedback control of activation thresholds, in which a controller network analyzes the past emitted events and predicts the optimal distribution of activation thresholds for the following time segment. Additionally, we allow a user-defined target peak-event-rate for which the control network is conditioned and optimized to predict per-column activation thresholds that would eventually produce the best possible video reconstruction. The proposed OnTheFly control scheme is data-driven and trained in an end-to-end fashion using probabilistic relaxation of the discrete event representation. We demonstrate that our approach outperforms both fixed and randomly-varying threshold schemes by 6-12% in terms of LPIPS perceptual image dissimilarity metric, and by 49% in terms of event rate, achieving superior reconstruction quality while enabling a fine-tuned balance between performance accuracy and the event rate. Additionally, we show that sampling strategies provided by our OnTheFly control are interpretable and reflect the characteristics of the scene. Our results, derived from a physically-accurate simulator, underline the promise of the proposed methodology in enhancing the utility of event cameras for image reconstruction and other downstream tasks, paving the way for hardware implementation of dynamic feedback EVS control in silicon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge