Gopal Nookula

TelescopeML -- I. An End-to-End Python Package for Interpreting Telescope Datasets through Training Machine Learning Models, Generating Statistical Reports, and Visualizing Results

Jul 24, 2024Abstract:We are on the verge of a revolutionary era in space exploration, thanks to advancements in telescopes such as the James Webb Space Telescope (\textit{JWST}). High-resolution, high signal-to-noise spectra from exoplanet and brown dwarf atmospheres have been collected over the past few decades, requiring the development of accurate and reliable pipelines and tools for their analysis. Accurately and swiftly determining the spectroscopic parameters from the observational spectra of these objects is crucial for understanding their atmospheric composition and guiding future follow-up observations. \texttt{TelescopeML} is a Python package developed to perform three main tasks: 1. Process the synthetic astronomical datasets for training a CNN model and prepare the observational dataset for later use for prediction; 2. Train a CNN model by implementing the optimal hyperparameters; and 3. Deploy the trained CNN models on the actual observational data to derive the output spectroscopic parameters.

* Please find the accepted paper with complete reference list at https://joss.theoj.org/papers/10.21105/joss.06346

Shadows Aren't So Dangerous After All: A Fast and Robust Defense Against Shadow-Based Adversarial Attacks

Aug 18, 2022

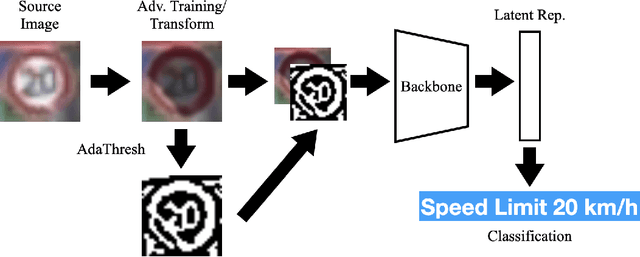

Abstract:Robust classification is essential in tasks like autonomous vehicle sign recognition, where the downsides of misclassification can be grave. Adversarial attacks threaten the robustness of neural network classifiers, causing them to consistently and confidently misidentify road signs. One such class of attack, shadow-based attacks, causes misidentifications by applying a natural-looking shadow to input images, resulting in road signs that appear natural to a human observer but confusing for these classifiers. Current defenses against such attacks use a simple adversarial training procedure to achieve a rather low 25\% and 40\% robustness on the GTSRB and LISA test sets, respectively. In this paper, we propose a robust, fast, and generalizable method, designed to defend against shadow attacks in the context of road sign recognition, that augments source images with binary adaptive threshold and edge maps. We empirically show its robustness against shadow attacks, and reformulate the problem to show its similarity $\varepsilon$ perturbation-based attacks. Experimental results show that our edge defense results in 78\% robustness while maintaining 98\% benign test accuracy on the GTSRB test set, with similar results from our threshold defense. Link to our code is in the paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge