Gongyan Li

Attention Round for Post-Training Quantization

Jul 07, 2022

Abstract:At present, the quantification methods of neural network models are mainly divided into post-training quantization (PTQ) and quantization aware training (QAT). Post-training quantization only need a small part of the data to complete the quantification process, but the performance of its quantitative model is not as good as the quantization aware training. This paper presents a novel quantification method called Attention Round. This method gives parameters w the opportunity to be mapped to all possible quantized values, rather than just the two quantized values nearby w in the process of quantization. The probability of being mapped to different quantified values is negatively correlated with the distance between the quantified values and w, and decay with a Gaussian function. In addition, this paper uses the lossy coding length as a measure to assign bit widths to the different layers of the model to solve the problem of mixed precision quantization, which effectively avoids to solve combinatorial optimization problem. This paper also performs quantitative experiments on different models, the results confirm the effectiveness of the proposed method. For ResNet18 and MobileNetV2, the post-training quantization proposed in this paper only require 1,024 training data and 10 minutes to complete the quantization process, which can achieve quantization performance on par with quantization aware training.

Fastidious Attention Network for Navel Orange Segmentation

Mar 26, 2020

Abstract:Deep learning achieves excellent performance in many domains, so we not only apply it to the navel orange semantic segmentation task to solve the two problems of distinguishing defect categories and identifying the stem end and blossom end, but also propose a fastidious attention mechanism to further improve model performance. This lightweight attention mechanism includes two learnable parameters, activations and thresholds, to capture long-range dependence. Specifically, the threshold picks out part of the spatial feature map and the activation excite this area. Based on activations and thresholds training from different types of feature maps, we design fastidious self-attention module (FSAM) and fastidious inter-attention module (FIAM). And then construct the Fastidious Attention Network (FANet), which uses U-Net as the backbone and embeds these two modules, to solve the problems with semantic segmentation for stem end, blossom end, flaw and ulcer. Compared with some state-of-the-art deep-learning-based networks under our navel orange dataset, experiments show that our network is the best performance with pixel accuracy 99.105%, mean accuracy 77.468%, mean IU 70.375% and frequency weighted IU 98.335%. And embedded modules show better discrimination of 5 categories including background, especially the IU of flaw is increased by 3.165%.

A Real-Time Tiny Detection Model for Stem End and Blossom End of Navel Orange

May 24, 2019

Abstract:To distinguish the stem end and blossom end of navel orange from its black spot, we propose a real-time tiny detection model (RTTD) with low computational cost, compact architecture and high detection accuracy. In particular, based on the characteristics of the data, we apply pure dense connectivity to limit and simplify the design of the model architecture and use k-means clustering to set the size and aspect ratios of the default boxes. The architecture of model is based on deeply supervised object detectors (DSOD), and which reduces some components like dense block and prediction layers for efficient and adds some auxiliary structure like Squeeze-and-Excitation layer and Swish for accuracy. And we create a dataset in Pascal VOC format annotated the three types of detection targets stem end, blossom end and black spot. Experimental results on our orange data set confirm that RTTD has competitive results to the state-of-the-art one stage detectors like SSD, DSOD, YOLOv2, YOLOv3, RFB and FSSD, and it achieves 87.479%mAP at 131 FPS with only 5.812M parameters.

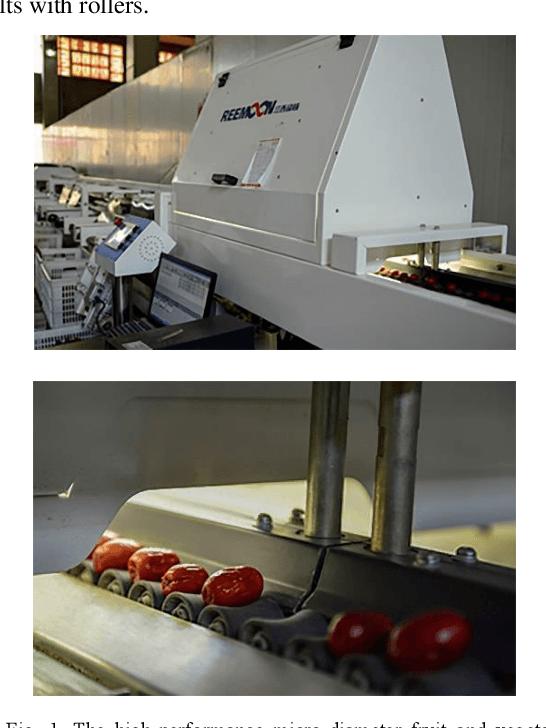

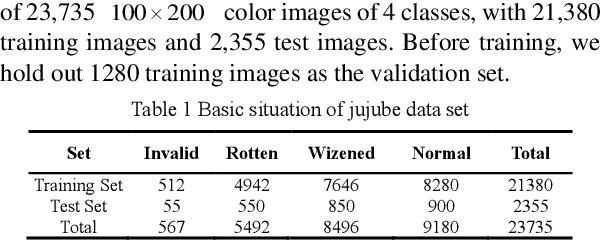

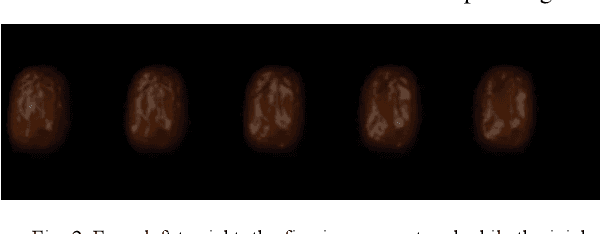

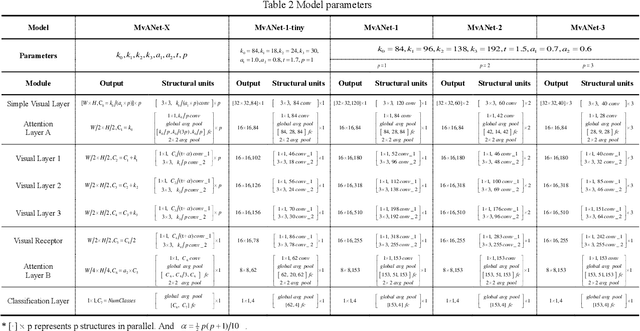

Multi-vision Attention Networks for On-line Red Jujube Grading

Mar 31, 2019

Abstract:To solve the red jujube classification problem, this paper designs a convolutional neural network model with low computational cost and high classification accuracy. The architecture of the model is inspired by the multi-visual mechanism of the organism and DenseNet. To further improve our model, we add the attention mechanism of SE-Net. We also construct a dataset which contains 23,735 red jujube images captured by a jujube grading system. According to the appearance of the jujube and the characteristics of the grading system, the dataset is divided into four classes: invalid, rotten, wizened and normal. The numerical experiments show that the classification accuracy of our model reaches to 91.89%, which is comparable to DenseNet-121, InceptionV3, InceptionV4, and Inception-ResNet v2. However, our model has real-time performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge