Giulio Filippi

Intersectional Fairness: A Fractal Approach

Feb 24, 2023

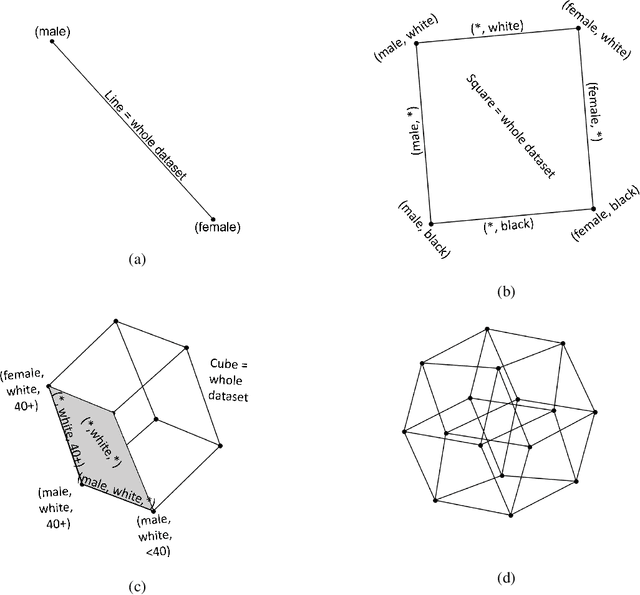

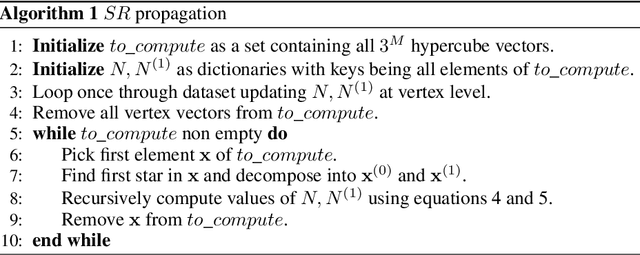

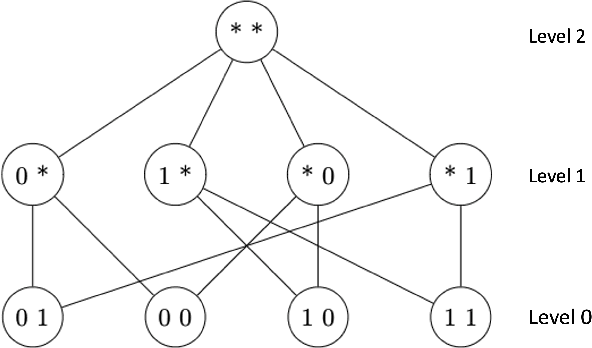

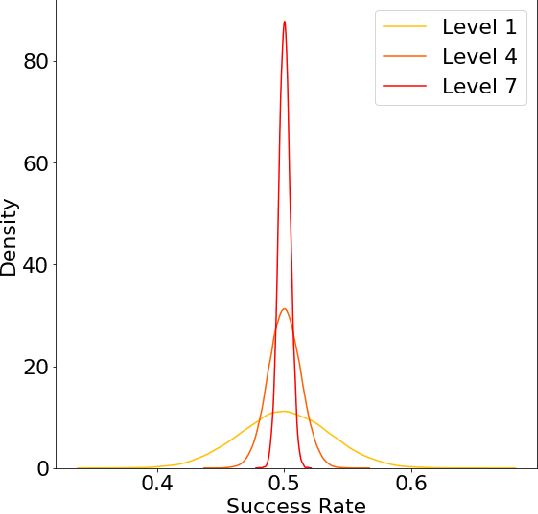

Abstract:The issue of fairness in AI has received an increasing amount of attention in recent years. The problem can be approached by looking at different protected attributes (e.g., ethnicity, gender, etc) independently, but fairness for individual protected attributes does not imply intersectional fairness. In this work, we frame the problem of intersectional fairness within a geometrical setting. We project our data onto a hypercube, and split the analysis of fairness by levels, where each level encodes the number of protected attributes we are intersecting over. We prove mathematically that, while fairness does not propagate "down" the levels, it does propagate "up" the levels. This means that ensuring fairness for all subgroups at the lowest intersectional level (e.g., black women, white women, black men and white men), will necessarily result in fairness for all the above levels, including each of the protected attributes (e.g., ethnicity and gender) taken independently. We also derive a formula describing the variance of the set of estimated success rates on each level, under the assumption of perfect fairness. Using this theoretical finding as a benchmark, we define a family of metrics which capture overall intersectional bias. Finally, we propose that fairness can be metaphorically thought of as a "fractal" problem. In fractals, patterns at the smallest scale repeat at a larger scale. We see from this example that tackling the problem at the lowest possible level, in a bottom-up manner, leads to the natural emergence of fair AI. We suggest that trustworthiness is necessarily an emergent, fractal and relational property of the AI system.

Local Law 144: A Critical Analysis of Regression Metrics

Feb 08, 2023

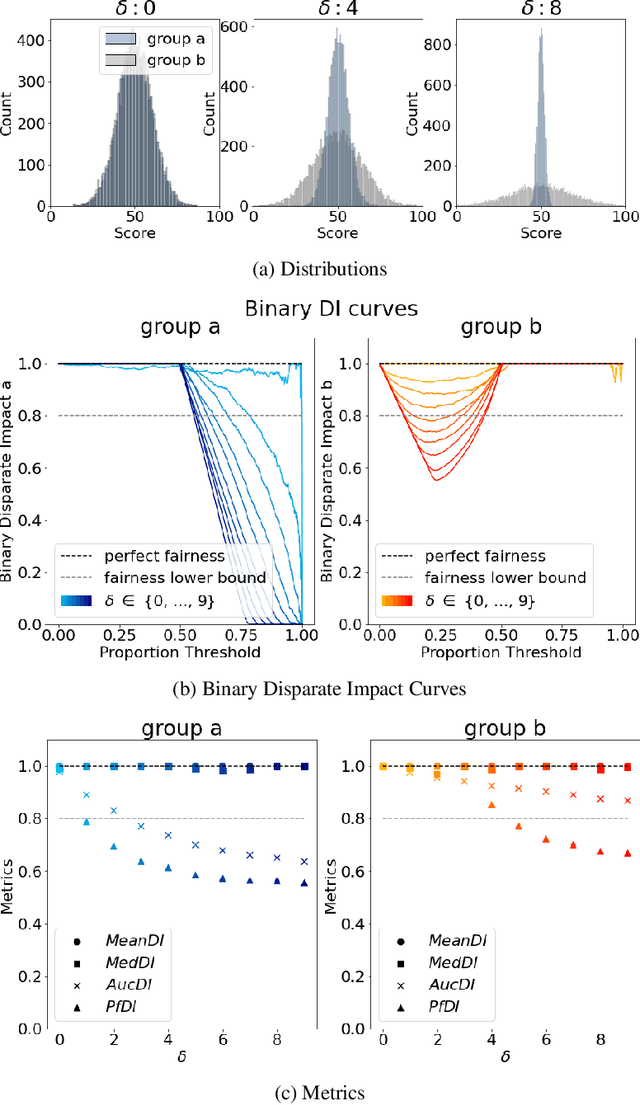

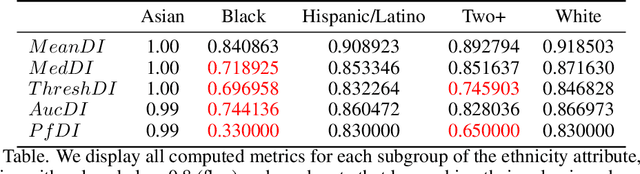

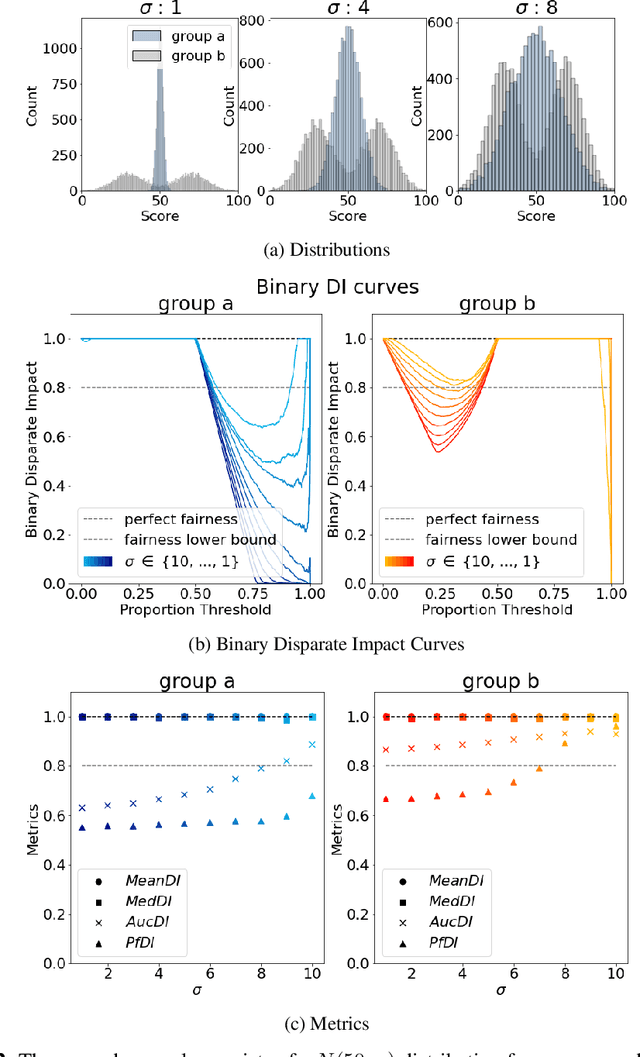

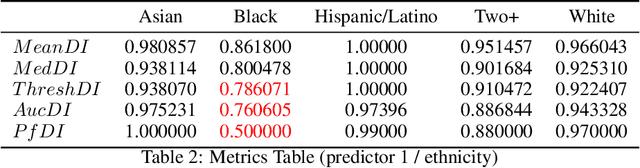

Abstract:The use of automated decision tools in recruitment has received an increasing amount of attention. In November 2021, the New York City Council passed a legislation (Local Law 144) that mandates bias audits of Automated Employment Decision Tools. From 15th April 2023, companies that use automated tools for hiring or promoting employees are required to have these systems audited by an independent entity. Auditors are asked to compute bias metrics that compare outcomes for different groups, based on sex/gender and race/ethnicity categories at a minimum. Local Law 144 proposes novel bias metrics for regression tasks (scenarios where the automated system scores candidates with a continuous range of values). A previous version of the legislation proposed a bias metric that compared the mean scores of different groups. The new revised bias metric compares the proportion of candidates in each group that falls above the median. In this paper, we argue that both metrics fail to capture distributional differences over the whole domain, and therefore cannot reliably detect bias. We first introduce two metrics, as possible alternatives to the legislation metrics. We then compare these metrics over a range of theoretical examples, for which the legislation proposed metrics seem to underestimate bias. Finally, we study real data and show that the legislation metrics can similarly fail in a real-world recruitment application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge