Giri Krishnan

What Variables Affect Out-Of-Distribution Generalization in Pretrained Models?

May 23, 2024

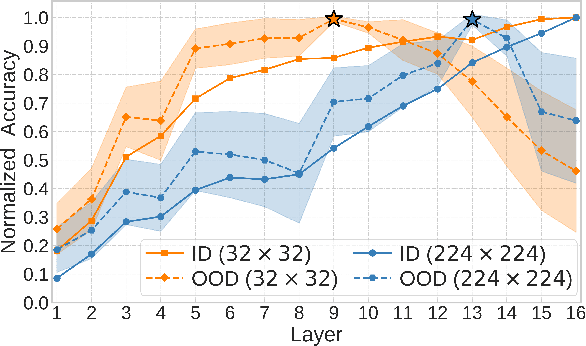

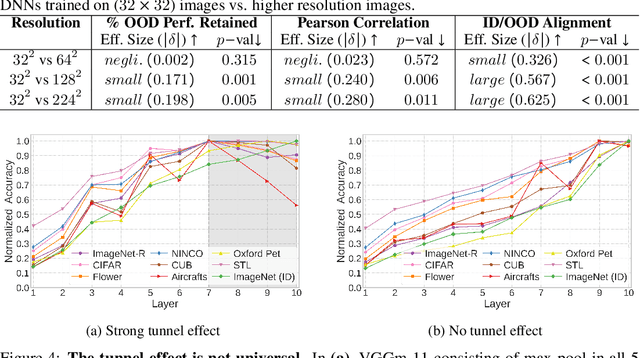

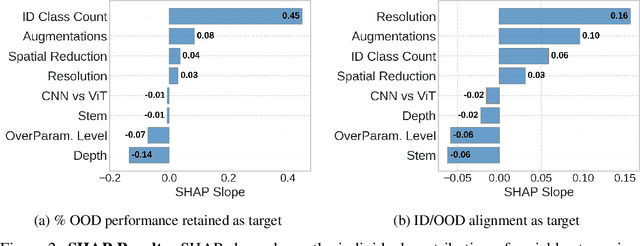

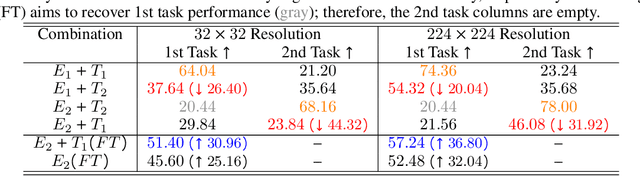

Abstract:Embeddings produced by pre-trained deep neural networks (DNNs) are widely used; however, their efficacy for downstream tasks can vary widely. We study the factors influencing out-of-distribution (OOD) generalization of pre-trained DNN embeddings through the lens of the tunnel effect hypothesis, which suggests deeper DNN layers compress representations and hinder OOD performance. Contrary to earlier work, we find the tunnel effect is not universal. Based on 10,584 linear probes, we study the conditions that mitigate the tunnel effect by varying DNN architecture, training dataset, image resolution, and augmentations. We quantify each variable's impact using a novel SHAP analysis. Our results emphasize the danger of generalizing findings from toy datasets to broader contexts.

Sleep-Like Unsupervised Replay Improves Performance when Data are Limited or Unbalanced

Feb 12, 2024

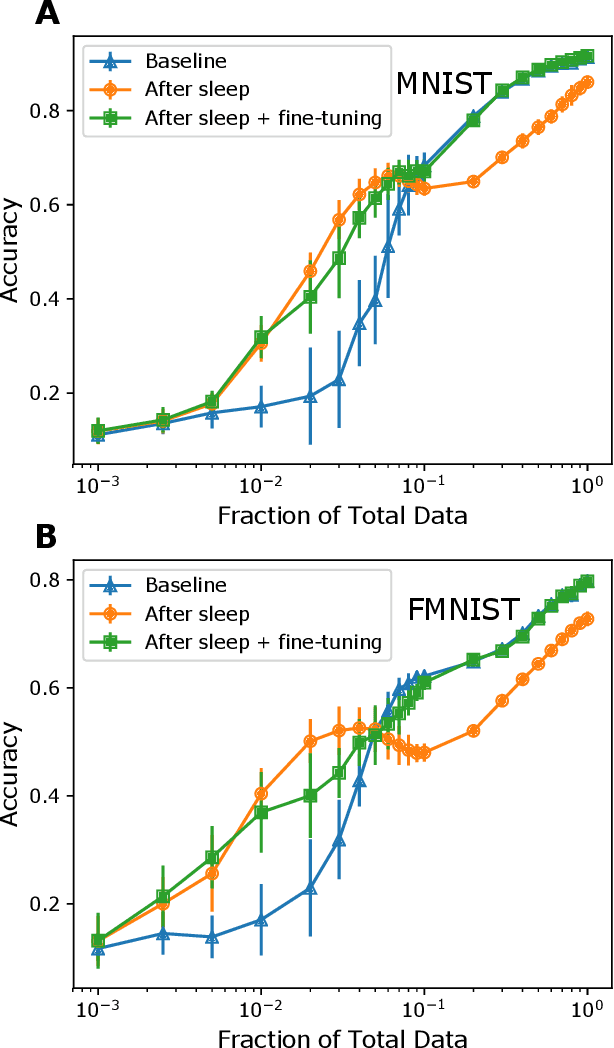

Abstract:The performance of artificial neural networks (ANNs) degrades when training data are limited or imbalanced. In contrast, the human brain can learn quickly from just a few examples. Here, we investigated the role of sleep in improving the performance of ANNs trained with limited data on the MNIST and Fashion MNIST datasets. Sleep was implemented as an unsupervised phase with local Hebbian type learning rules. We found a significant boost in accuracy after the sleep phase for models trained with limited data in the range of 0.5-10% of total MNIST or Fashion MNIST datasets. When more than 10% of the total data was used, sleep alone had a slight negative impact on performance, but this was remedied by fine-tuning on the original data. This study sheds light on a potential synaptic weight dynamics strategy employed by the brain during sleep to enhance memory performance when training data are limited or imbalanced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge