Gil Segev

Crypto-Oriented Neural Architecture Design

Nov 27, 2019

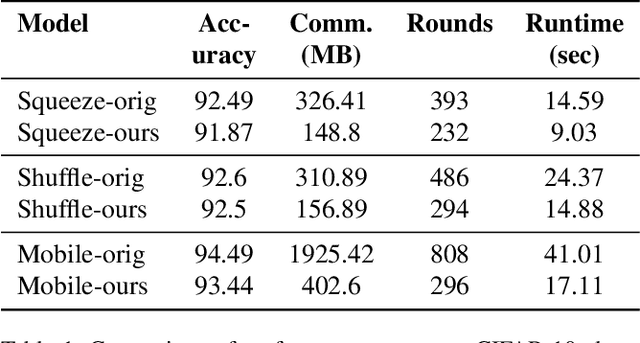

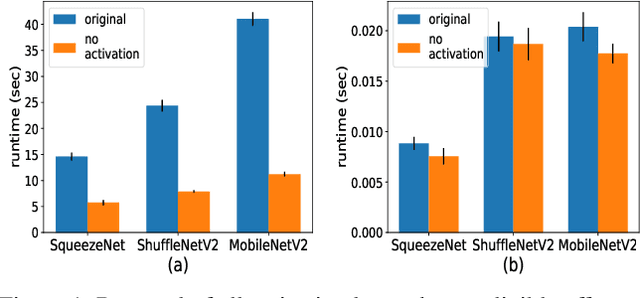

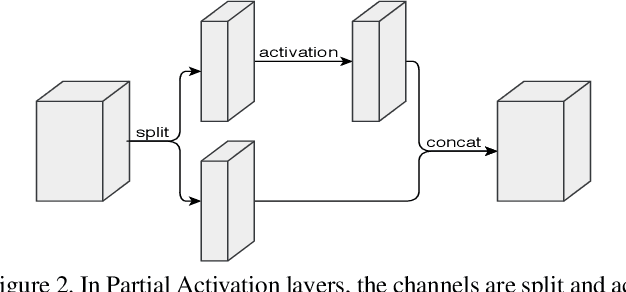

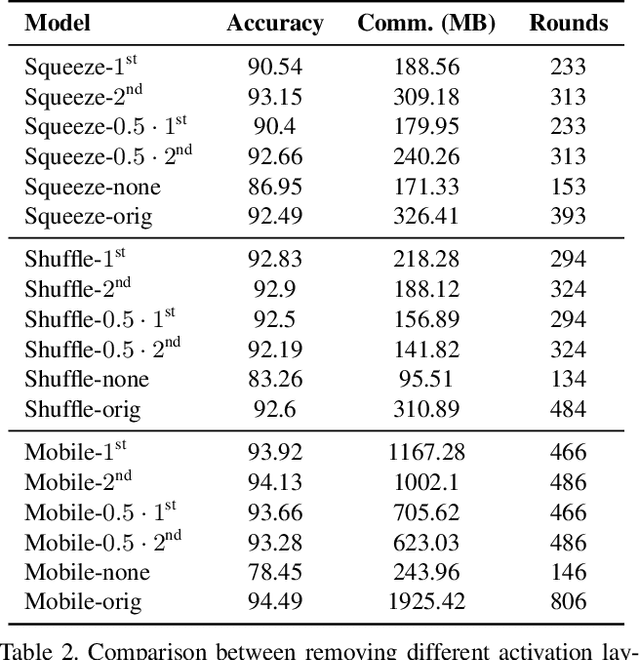

Abstract:As neural networks revolutionize many applications, significant privacy concerns emerge. Owners of private data wish to use remote neural network services while ensuring their data cannot be interpreted by others. Service providers wish to keep their model private to safeguard its intellectual property. Such privacy conflicts may slow down the adoption of neural networks in sensitive domains such as healthcare. Privacy issues have been addressed in the cryptography community in the context of secure computation. However, secure computation protocols have known performance issues. E.g., runtime of secure inference in deep neural networks is three orders of magnitude longer comparing to non-secure inference. Therefore, much research efforts address the optimization of cryptographic protocols for secure inference. We take a complementary approach, and provide design principles for optimizing the crypto-oriented neural network architectures to reduce the runtime of secure inference. The principles are evaluated on three state-of-the-art architectures: SqueezeNet, ShuffleNetV2, and MobileNetV2. Our novel method significantly improves the efficiency of secure inference on common evaluation metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge