Gil Luyet

Exploiting Low-dimensional Structures to Enhance DNN Based Acoustic Modeling in Speech Recognition

Jan 22, 2016

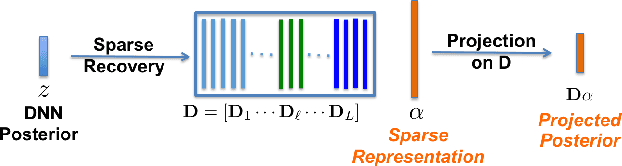

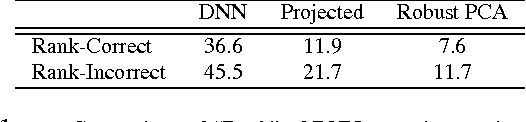

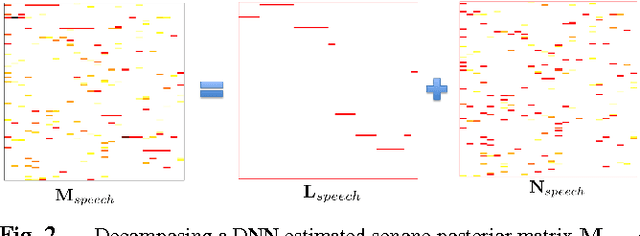

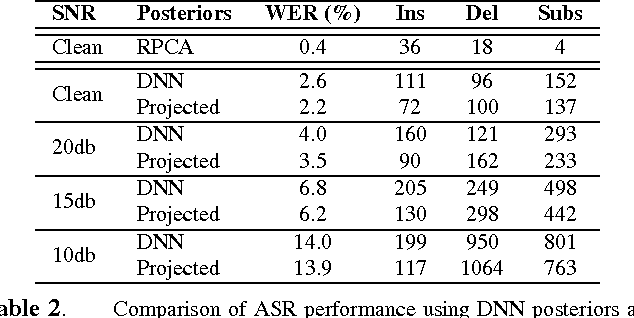

Abstract:We propose to model the acoustic space of deep neural network (DNN) class-conditional posterior probabilities as a union of low-dimensional subspaces. To that end, the training posteriors are used for dictionary learning and sparse coding. Sparse representation of the test posteriors using this dictionary enables projection to the space of training data. Relying on the fact that the intrinsic dimensions of the posterior subspaces are indeed very small and the matrix of all posteriors belonging to a class has a very low rank, we demonstrate how low-dimensional structures enable further enhancement of the posteriors and rectify the spurious errors due to mismatch conditions. The enhanced acoustic modeling method leads to improvements in continuous speech recognition task using hybrid DNN-HMM (hidden Markov model) framework in both clean and noisy conditions, where upto 15.4% relative reduction in word error rate (WER) is achieved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge