Ghodai Abdelrahman

DBE-KT22: A Knowledge Tracing Dataset Based on Online Student Evaluation

Aug 19, 2022

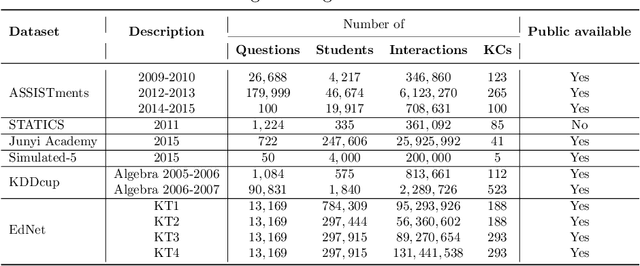

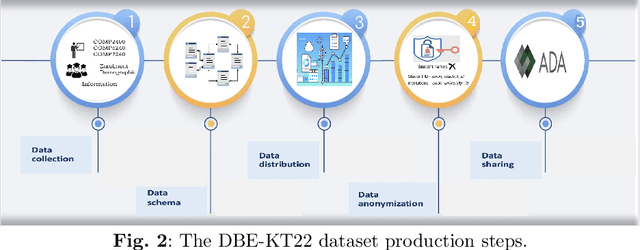

Abstract:Online education has gained an increasing importance over the last decade for providing affordable high-quality education to students worldwide. This has been further magnified during the global pandemic as more students switched to study online. The majority of online education tasks, e.g., course recommendation, exercise recommendation, or automated evaluation, depends on tracking students' knowledge progress. This is known as the \emph{Knowledge Tracing} problem in the literature. Addressing this problem requires collecting student evaluation data that can reflect their knowledge evolution over time. In this paper, we propose a new knowledge tracing dataset named Database Exercises for Knowledge Tracing (DBE-KT22) that is collected from an online student exercise system in a course taught at the Australian National University in Australia. We discuss the characteristics of the DBE-KT22 dataset and contrast it with the existing datasets in the knowledge tracing literature. Our dataset is available for public access through the Australian Data Archive platform.

Knowledge Tracing: A Survey

Jan 08, 2022Abstract:Humans ability to transfer knowledge through teaching is one of the essential aspects for human intelligence. A human teacher can track the knowledge of students to customize the teaching on students needs. With the rise of online education platforms, there is a similar need for machines to track the knowledge of students and tailor their learning experience. This is known as the Knowledge Tracing (KT) problem in the literature. Effectively solving the KT problem would unlock the potential of computer-aided education applications such as intelligent tutoring systems, curriculum learning, and learning materials' recommendation. Moreover, from a more general viewpoint, a student may represent any kind of intelligent agents including both human and artificial agents. Thus, the potential of KT can be extended to any machine teaching application scenarios which seek for customizing the learning experience for a student agent (i.e., a machine learning model). In this paper, we provide a comprehensive and systematic review for the KT literature. We cover a broad range of methods starting from the early attempts to the recent state-of-the-art methods using deep learning, while highlighting the theoretical aspects of models and the characteristics of benchmark datasets. Besides these, we shed light on key modelling differences between closely related methods and summarize them in an easy-to-understand format. Finally, we discuss current research gaps in the KT literature and possible future research and application directions.

Learning Data Teaching Strategies Via Knowledge Tracing

Nov 13, 2021

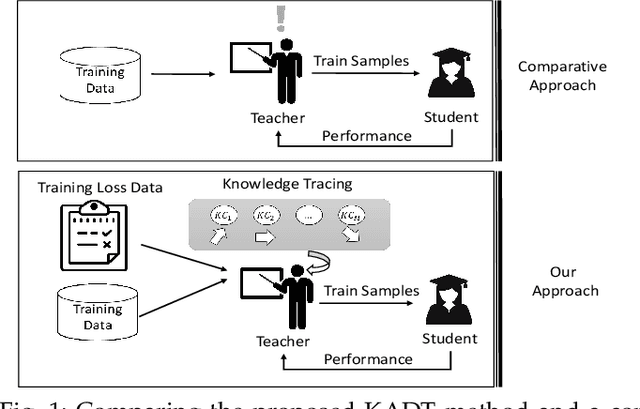

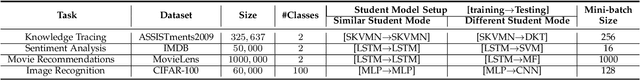

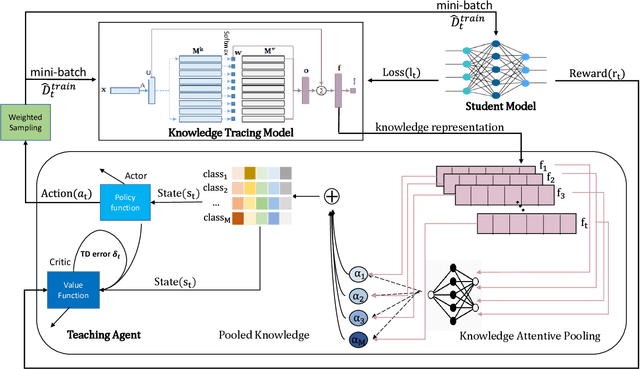

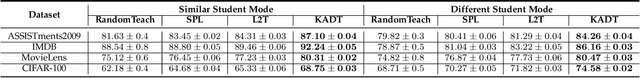

Abstract:Teaching plays a fundamental role in human learning. Typically, a human teaching strategy would involve assessing a student's knowledge progress for tailoring the teaching materials in a way that enhances the learning progress. A human teacher would achieve this by tracing a student's knowledge over important learning concepts in a task. Albeit, such teaching strategy is not well exploited yet in machine learning as current machine teaching methods tend to directly assess the progress on individual training samples without paying attention to the underlying learning concepts in a learning task. In this paper, we propose a novel method, called Knowledge Augmented Data Teaching (KADT), which can optimize a data teaching strategy for a student model by tracing its knowledge progress over multiple learning concepts in a learning task. Specifically, the KADT method incorporates a knowledge tracing model to dynamically capture the knowledge progress of a student model in terms of latent learning concepts. Then we develop an attention pooling mechanism to distill knowledge representations of a student model with respect to class labels, which enables to develop a data teaching strategy on critical training samples. We have evaluated the performance of the KADT method on four different machine learning tasks including knowledge tracing, sentiment analysis, movie recommendation, and image classification. The results comparing to the state-of-the-art methods empirically validate that KADT consistently outperforms others on all tasks.

Deep Graph Memory Networks for Forgetting-Robust Knowledge Tracing

Aug 18, 2021

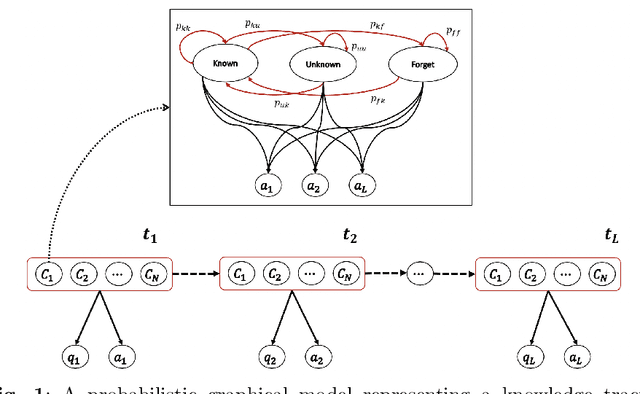

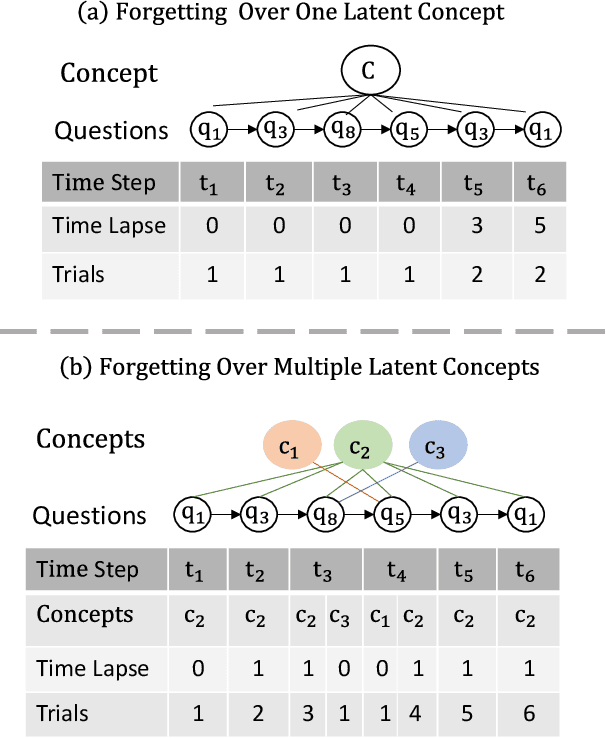

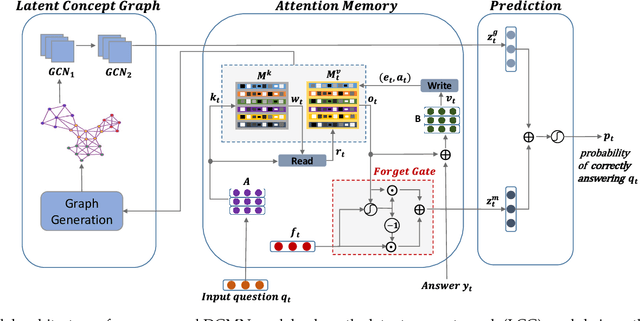

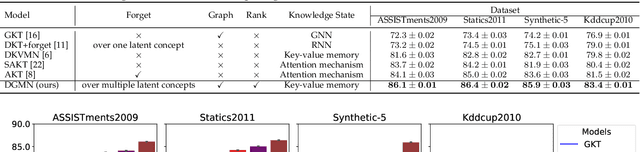

Abstract:Tracing a student's knowledge is vital for tailoring the learning experience. Recent knowledge tracing methods tend to respond to these challenges by modelling knowledge state dynamics across learning concepts. However, they still suffer from several inherent challenges including: modelling forgetting behaviours and identifying relationships among latent concepts. To address these challenges, in this paper, we propose a novel knowledge tracing model, namely \emph{Deep Graph Memory Network} (DGMN). In this model, we incorporate a forget gating mechanism into an attention memory structure in order to capture forgetting behaviours dynamically during the knowledge tracing process. Particularly, this forget gating mechanism is built upon attention forgetting features over latent concepts considering their mutual dependencies. Further, this model has the capability of learning relationships between latent concepts from a dynamic latent concept graph in light of a student's evolving knowledge states. A comprehensive experimental evaluation has been conducted using four well-established benchmark datasets. The results show that DGMN consistently outperforms the state-of-the-art KT models over all the datasets. The effectiveness of modelling forgetting behaviours and learning latent concept graphs has also been analyzed in our experiments.

Knowledge Tracing with Sequential Key-Value Memory Networks

Oct 29, 2019

Abstract:Can machines trace human knowledge like humans? Knowledge tracing (KT) is a fundamental task in a wide range of applications in education, such as massive open online courses (MOOCs), intelligent tutoring systems, educational games, and learning management systems. It models dynamics in a student's knowledge states in relation to different learning concepts through their interactions with learning activities. Recently, several attempts have been made to use deep learning models for tackling the KT problem. Although these deep learning models have shown promising results, they have limitations: either lack the ability to go deeper to trace how specific concepts in a knowledge state are mastered by a student, or fail to capture long-term dependencies in an exercise sequence. In this paper, we address these limitations by proposing a novel deep learning model for knowledge tracing, namely Sequential Key-Value Memory Networks (SKVMN). This model unifies the strengths of recurrent modelling capacity and memory capacity of the existing deep learning KT models for modelling student learning. We have extensively evaluated our proposed model on five benchmark datasets. The experimental results show that (1) SKVMN outperforms the state-of-the-art KT models on all datasets, (2) SKVMN can better discover the correlation between latent concepts and questions, and (3) SKVMN can trace the knowledge state of students dynamics, and a leverage sequential dependencies in an exercise sequence for improved predication accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge