Ghananeel Rotithor

Stitching Dynamic Movement Primitives and Image-based Visual Servo Control

Oct 29, 2021

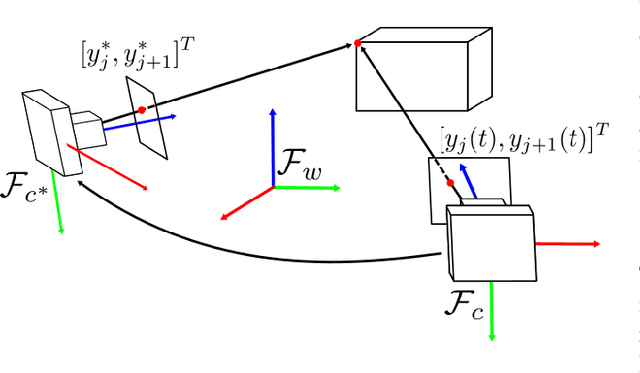

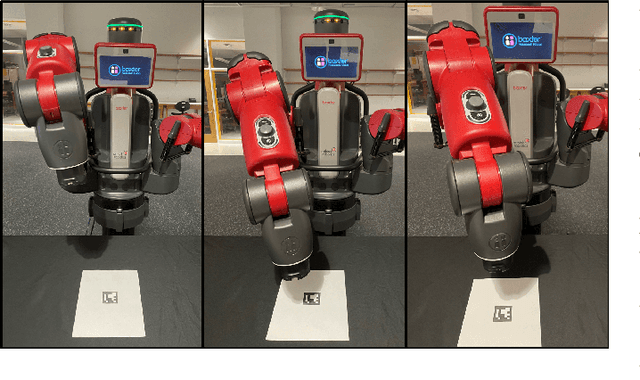

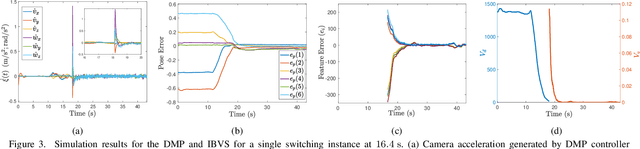

Abstract:Utilizing perception for feedback control in combination with Dynamic Movement Primitive (DMP)-based motion generation for a robot's end-effector control is a useful solution for many robotic manufacturing tasks. For instance, while performing an insertion task when the hole or the recipient part is not visible in the eye-in-hand camera, a learning-based movement primitive method can be used to generate the end-effector path. Once the recipient part is in the field of view (FOV), Image-based Visual Servo (IBVS) can be used to control the motion of the robot. Inspired by such applications, this paper presents a generalized control scheme that switches between motion generation using DMPs and IBVS control. To facilitate the design, a common state space representation for the DMP and the IBVS systems is first established. Stability analysis of the switched system using multiple Lyapunov functions shows that the state trajectories converge to a bound asymptotically. The developed method is validated by two real world experiments using the eye-in-hand configuration on a Baxter research robot.

Extension of Full and Reduced Order Observers for Image-based Depth Estimation using Concurrent Learning

Aug 12, 2020

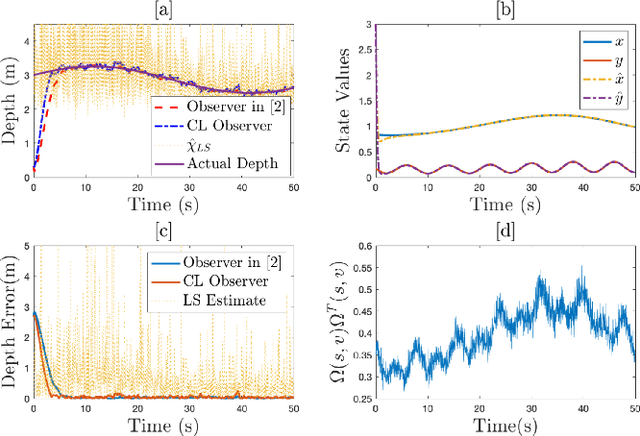

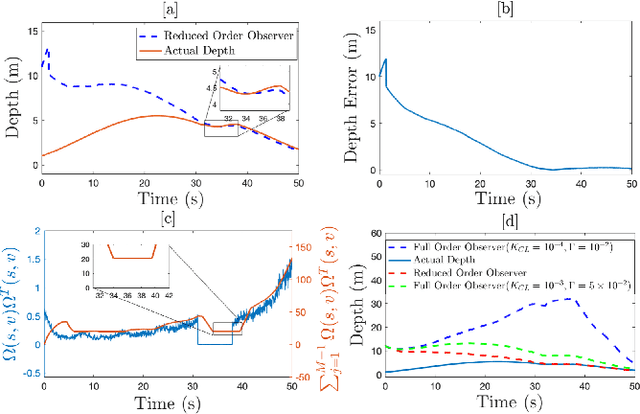

Abstract:In this paper concurrent learning (CL)-based full and reduced order observers for a perspective dynamical system (PDS) are developed. The PDS is a widely used model for estimating the depth of a feature point from a sequence of camera images. Building on the current progress of CL for parameter estimation in adaptive control, a state observer is developed for the PDS model where the inverse depth appears as a time-varying parameter in the dynamics. The data recorded over a sliding time window in the near past is used in the CL term to design the full and the reduced order state observers. A Lyapunov-based stability analysis is carried out to prove the uniformly ultimately bounded (UUB) stability of the developed observers. Simulation results are presented to validate the accuracy and convergence of the developed observers in terms of convergence time, root mean square error (RMSE) and mean absolute percentage error (MAPE) metrics. Real world depth estimation experiments are performed to demonstrate the performance of the observers using aforementioned metrics on a 7-DoF manipulator with an eye-in-hand configuration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge