Ashwin P. Dani

Constrained Parameter Update Law for Adaptive Control

Apr 28, 2025Abstract:In this paper, a constrained parameter update law is derived in the context of adaptive control. The parameter update law is based on constrained optimization technique where a Lagrangian is formulated to incorporate the constraints on the parameters using inverse Barrier function. The constrained parameter update law is used to develop a adaptive tracking controller and the overall stability of the adaptive controller along with the constrained parameter update law is shown using Lyapunov analysis and development in stability of constrained primal-dual dynamics. The performance of the constrained parameter update law is tested in simulation for keeping the parameters within constraints and convergence to true parameters.

Adaptive Actor-Critic Based Optimal Regulation for Drift-Free Uncertain Nonlinear Systems

Jun 13, 2024Abstract:In this paper, a continuous-time adaptive actor-critic reinforcement learning (RL) controller is developed for drift-free nonlinear systems. Practical examples of such systems are image-based visual servoing (IBVS) and wheeled mobile robots (WMR), where the system dynamics includes a parametric uncertainty in the control effectiveness matrix with no drift term. The uncertainty in the input term poses a challenge for developing a continuous-time RL controller using existing methods. In this paper, an actor-critic or synchronous policy iteration (PI)-based RL controller is presented with a concurrent learning (CL)-based parameter update law for estimating the unknown parameters of the control effectiveness matrix. An infinite-horizon value function minimization objective is achieved by regulating the current states to the desired with near-optimal control efforts. The proposed controller guarantees closed-loop stability and simulation results validate the proposed theory using IBVS and WMR examples.

Stitching Dynamic Movement Primitives and Image-based Visual Servo Control

Oct 29, 2021

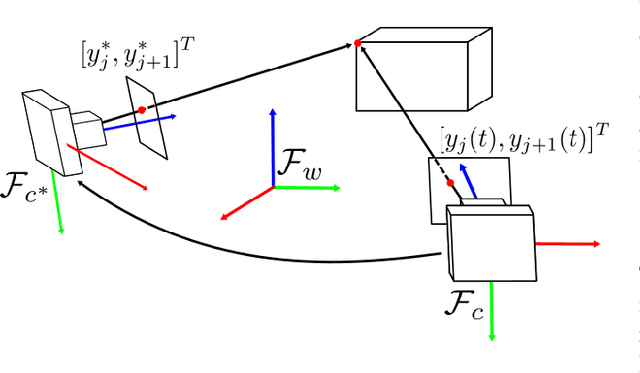

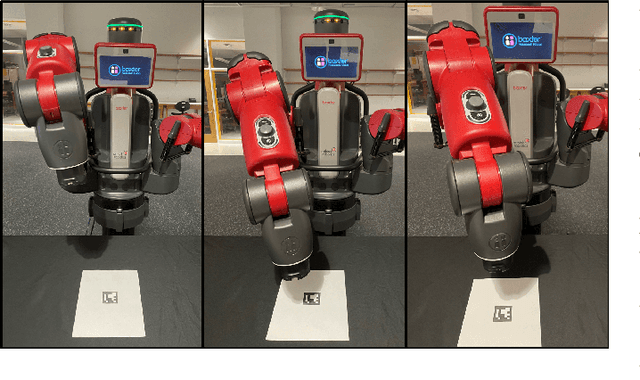

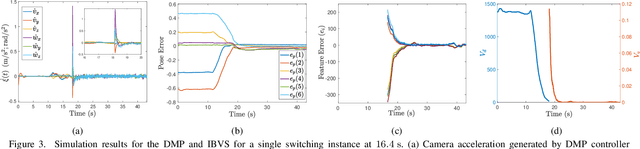

Abstract:Utilizing perception for feedback control in combination with Dynamic Movement Primitive (DMP)-based motion generation for a robot's end-effector control is a useful solution for many robotic manufacturing tasks. For instance, while performing an insertion task when the hole or the recipient part is not visible in the eye-in-hand camera, a learning-based movement primitive method can be used to generate the end-effector path. Once the recipient part is in the field of view (FOV), Image-based Visual Servo (IBVS) can be used to control the motion of the robot. Inspired by such applications, this paper presents a generalized control scheme that switches between motion generation using DMPs and IBVS control. To facilitate the design, a common state space representation for the DMP and the IBVS systems is first established. Stability analysis of the switched system using multiple Lyapunov functions shows that the state trajectories converge to a bound asymptotically. The developed method is validated by two real world experiments using the eye-in-hand configuration on a Baxter research robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge