Georgios Georgalis

UQ of 2D Slab Burner DNS: Surrogates, Uncertainty Propagation, and Parameter Calibration

Nov 09, 2024Abstract:The goal of this paper is to demonstrate and address challenges related to all aspects of performing a complete uncertainty quantification (UQ) analysis of a complicated physics-based simulation like a 2D slab burner direct numerical simulation (DNS). The UQ framework includes the development of data-driven surrogate models, propagation of parametric uncertainties to the fuel regression rate--the primary quantity of interest--and Bayesian calibration of critical parameters influencing the regression rate using experimental data. Specifically, the parameters calibrated include the latent heat of sublimation and a chemical reaction temperature exponent. Two surrogate models, a Gaussian Process (GP) and a Hierarchical Multiscale Surrogate (HMS) were constructed using an ensemble of 64 simulations generated via Latin Hypercube sampling. Both models exhibited comparable performance during cross-validation. However, the HMS was more stable due to its ability to handle multiscale effects, in contrast with the GP which was very sensitive to kernel choice. Analysis revealed that the surrogates do not accurately predict all spatial locations of the slab burner as-is. Subsequent Bayesian calibration of the physical parameters against experimental observations resulted in regression rate predictions that closer align with experimental observation in specific regions. This study highlights the importance of surrogate model selection and parameter calibration in quantifying uncertainty in predictions of fuel regression rates in complex combustion systems.

An Ensemble-Based Deep Framework for Estimating Thermo-Chemical State Variables from Flamelet Generated Manifolds

Nov 25, 2022

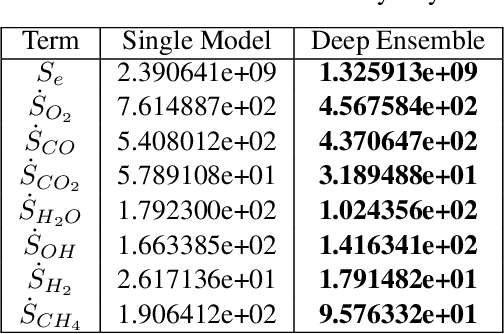

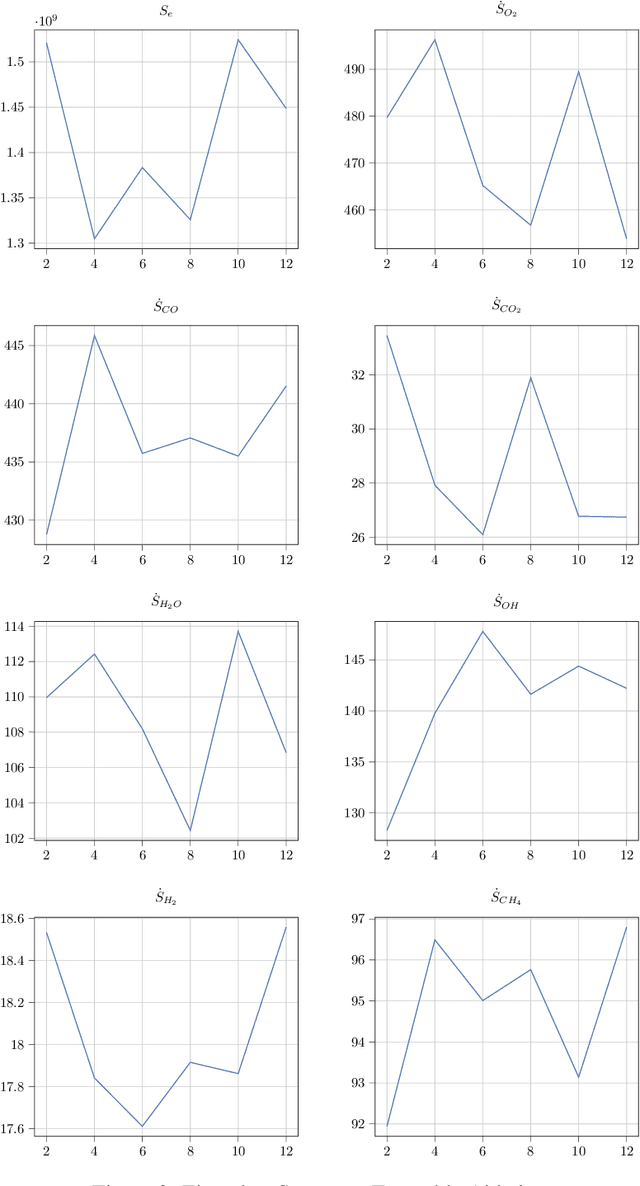

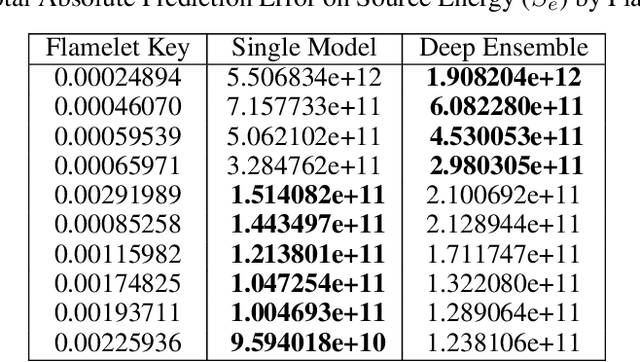

Abstract:Complete computation of turbulent combustion flow involves two separate steps: mapping reaction kinetics to low-dimensional manifolds and looking-up this approximate manifold during CFD run-time to estimate the thermo-chemical state variables. In our previous work, we showed that using a deep architecture to learn the two steps jointly, instead of separately, is 73% more accurate at estimating the source energy, a key state variable, compared to benchmarks and can be integrated within a DNS turbulent combustion framework. In their natural form, such deep architectures do not allow for uncertainty quantification of the quantities of interest: the source energy and key species source terms. In this paper, we expand on such architectures, specifically ChemTab, by introducing deep ensembles to approximate the posterior distribution of the quantities of interest. We investigate two strategies of creating these ensemble models: one that keeps the flamelet origin information (Flamelets strategy) and one that ignores the origin and considers all the data independently (Points strategy). To train these models we used flamelet data generated by the GRI--Mech 3.0 methane mechanism, which consists of 53 chemical species and 325 reactions. Our results demonstrate that the Flamelets strategy is superior in terms of the absolute prediction error for the quantities of interest, but is reliant on the types of flamelets used to train the ensemble. The Points strategy is best at capturing the variability of the quantities of interest, independent of the flamelet types. We conclude that, overall, ChemTab Deep Ensembles allows for a more accurate representation of the source energy and key species source terms, compared to the model without these modifications.

Combined Data and Deep Learning Model Uncertainties: An Application to the Measurement of Solid Fuel Regression Rate

Oct 25, 2022

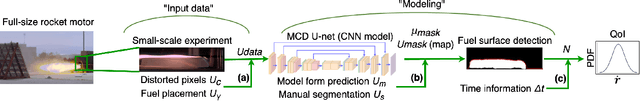

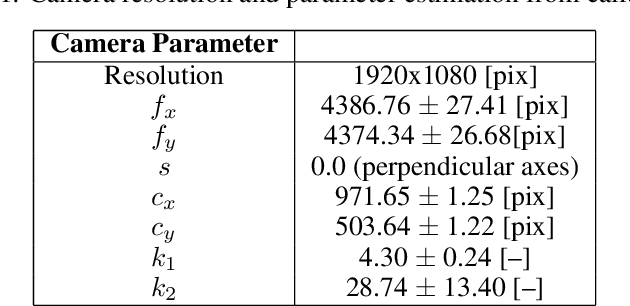

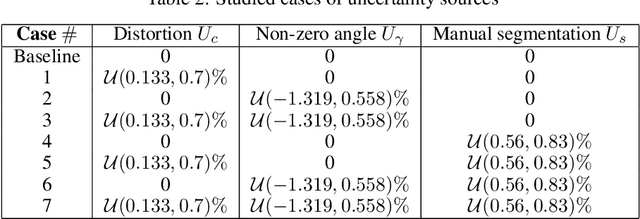

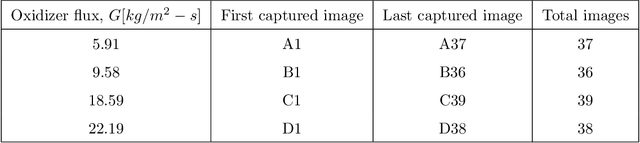

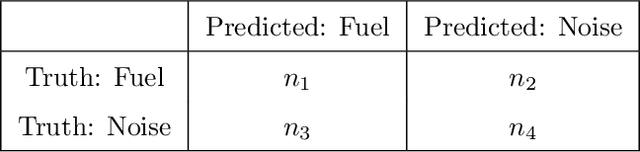

Abstract:In complex physical process characterization, such as the measurement of the regression rate for solid hybrid rocket fuels, where both the observation data and the model used have uncertainties originating from multiple sources, combining these in a systematic way for quantities of interest(QoI) remains a challenge. In this paper, we present a forward propagation uncertainty quantification (UQ) process to produce a probabilistic distribution for the observed regression rate $\dot{r}$. We characterized two input data uncertainty sources from the experiment (the distortion from the camera $U_c$ and the non-zero angle fuel placement $U_\gamma$), the prediction and model form uncertainty from the deep neural network ($U_m$), as well as the variability from the manually segmented images used for training it ($U_s$). We conducted seven case studies on combinations of these uncertainty sources with the model form uncertainty. The main contribution of this paper is the investigation and inclusion of the experimental image data uncertainties involved, and how to include them in a workflow when the QoI is the result of multiple sequential processes.

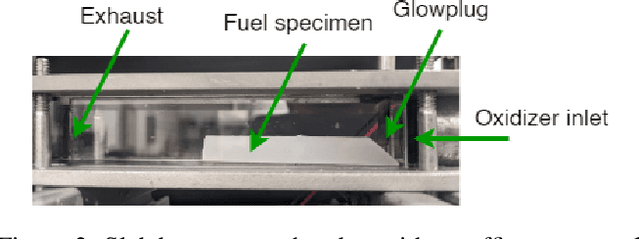

Measurement of Hybrid Rocket Solid Fuel Regression Rate for a Slab Burner using Deep Learning

Aug 25, 2021

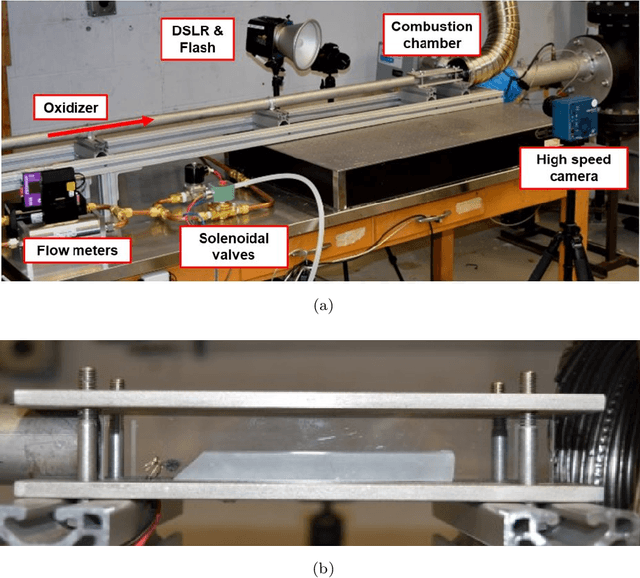

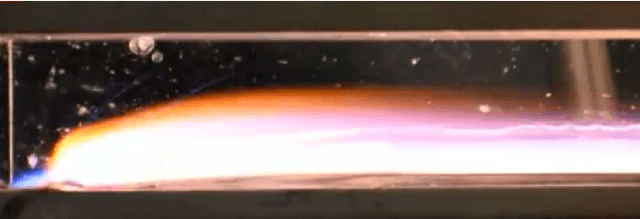

Abstract:This study presents an imaging-based deep learning tool to measure the fuel regression rate in a 2D slab burner experiment for hybrid rocket fuels. The slab burner experiment is designed to verify mechanistic models of reacting boundary layer combustion in hybrid rockets by the measurement of fuel regression rates. A DSLR camera with a high intensity flash is used to capture images throughout the burn and the images are then used to find the fuel boundary to calculate the regression rate. A U-net convolutional neural network architecture is explored to segment the fuel from the experimental images. A Monte-Carlo Dropout process is used to quantify the regression rate uncertainty produced from the network. The U-net computed regression rates are compared with values from other techniques from literature and show error less than 10%. An oxidizer flux dependency study is performed and shows the U-net predictions of regression rates are accurate and independent of the oxidizer flux, when the images in the training set are not over-saturated. Training with monochrome images is explored and is not successful at predicting the fuel regression rate from images with high noise. The network is superior at filtering out noise introduced by soot, pitting, and wax deposition on the chamber glass as well as the flame when compared to traditional image processing techniques, such as threshold binary conversion and spatial filtering. U-net consistently provides low error image segmentations to allow accurate computation of the regression rate of the fuel.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge