George Stamatescu

Dynamic mean field programming

Jun 10, 2022

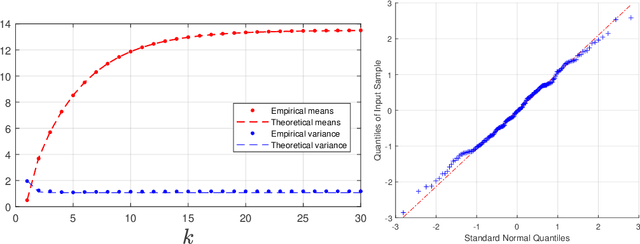

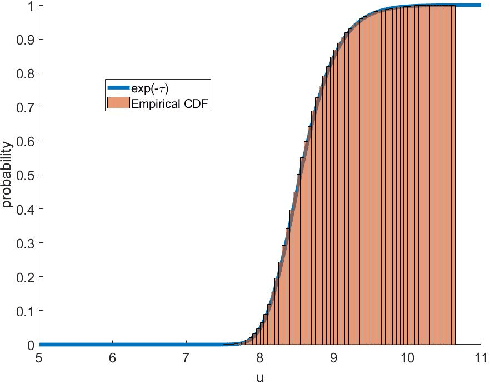

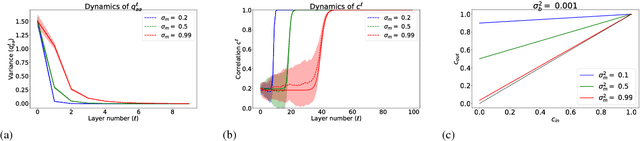

Abstract:A dynamic mean field theory is developed for model based Bayesian reinforcement learning in the large state space limit. In an analogy with the statistical physics of disordered systems, the transition probabilities are interpreted as couplings, and value functions as deterministic spins, and thus the sampled transition probabilities are considered to be quenched random variables. The results reveal that, under standard assumptions, the posterior over Q-values is asymptotically independent and Gaussian across state-action pairs, for infinite horizon problems. The finite horizon case exhibits the same behaviour for all state-actions pairs at each time but has an additional correlation across time, for each state-action pair. The results also hold for policy evaluation. The Gaussian statistics can be computed from a set of coupled mean field equations derived from the Bellman equation, which we call dynamic mean field programming (DMFP). For Q-value iteration, approximate equations are obtained by appealing to extreme value theory, and closed form expressions are found in the independent and identically distributed case. The Lyapunov stability of these closed form equations is studied.

Signal propagation in continuous approximations of binary neural networks

Feb 01, 2019

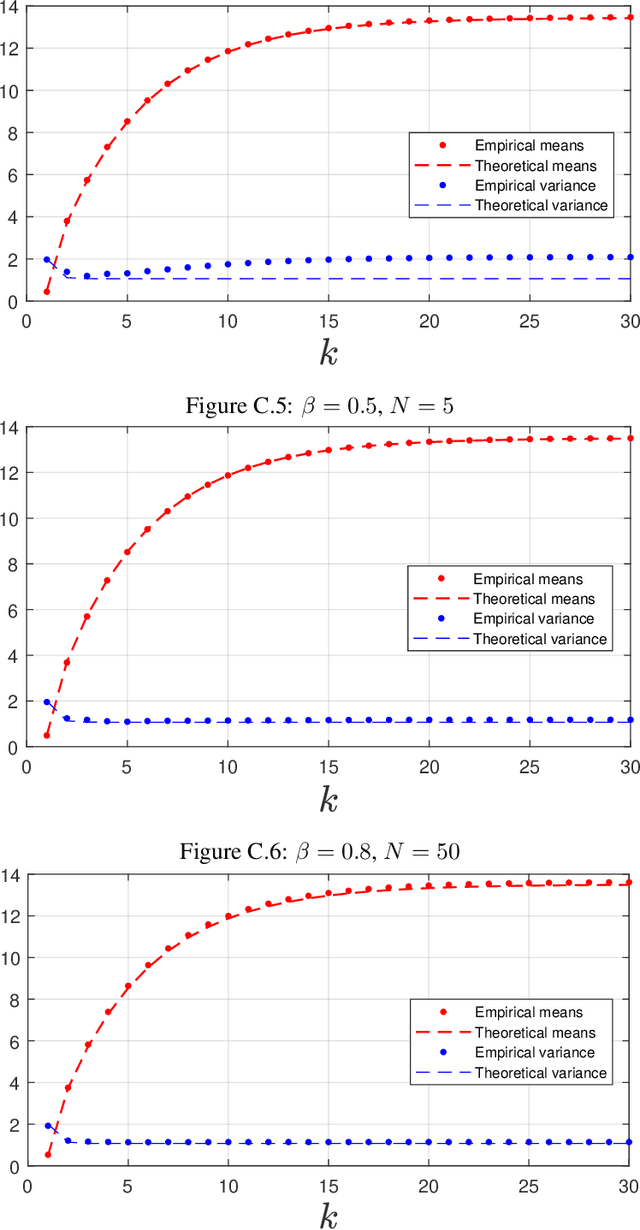

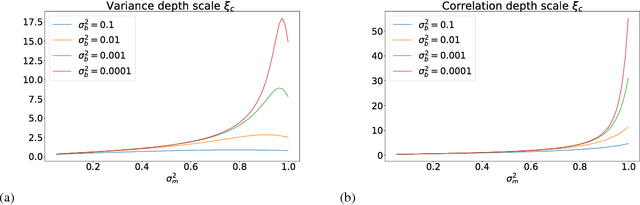

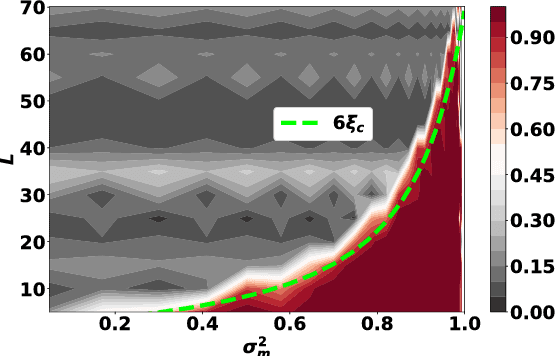

Abstract:The training of stochastic neural network models with binary ($\pm1$) weights and activations via a deterministic and continuous surrogate network is investigated. We derive, using mean field theory, a set of scalar equations describing how input signals propagate through the surrogate network. The equations reveal that these continuous models exhibit an order to chaos transition, and the presence of depth scales that limit the maximum trainable depth. Moreover, we predict theoretically and confirm numerically, that common weight initialization schemes used in standard continuous networks, when applied to the mean values of the stochastic binary weights, yield poor training performance. This study shows that, contrary to common intuition, the means of the stochastic binary weights should be initialised close to $\pm 1$ for deeper networks to be trainable.

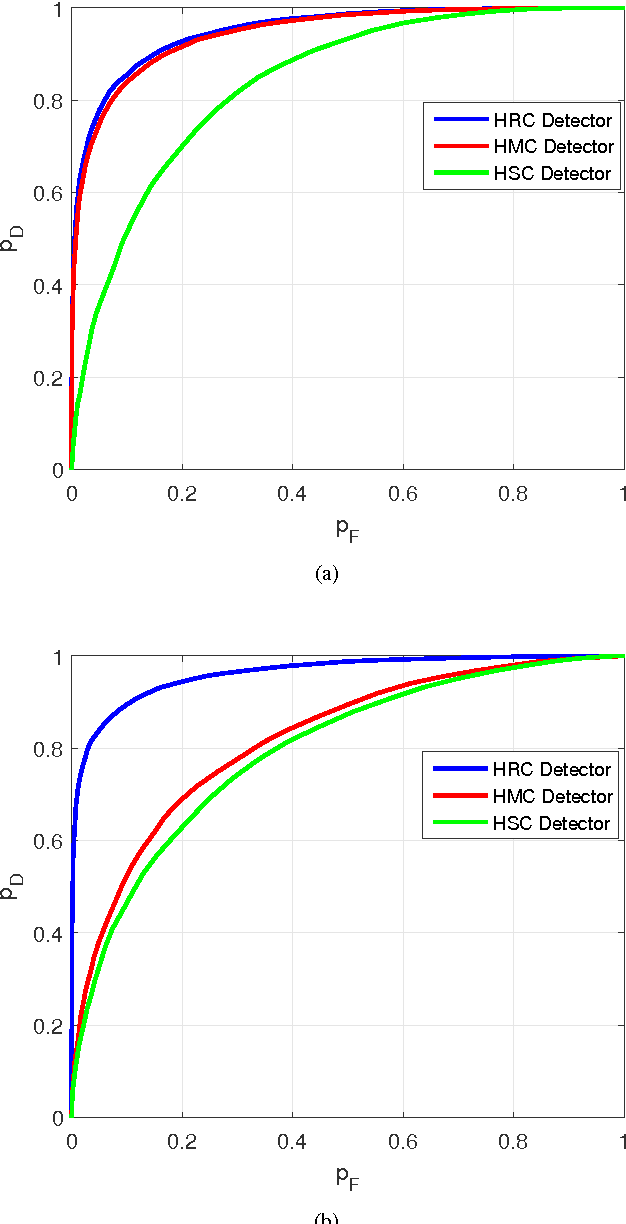

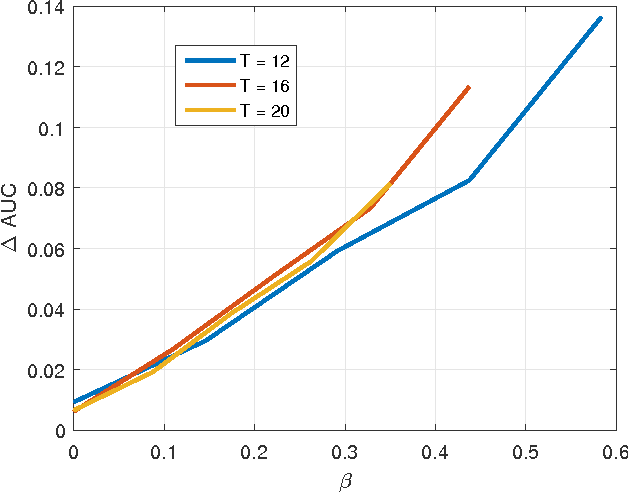

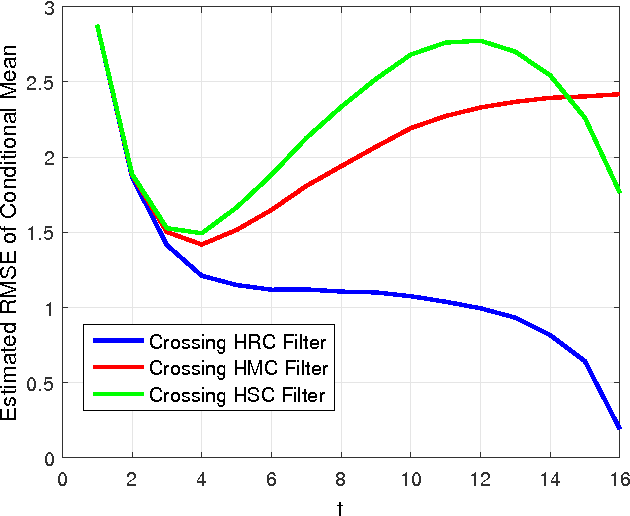

Track Extraction with Hidden Reciprocal Chain Models

May 13, 2016

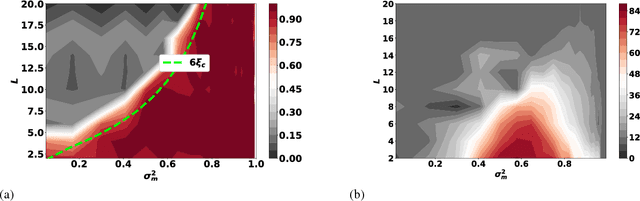

Abstract:This paper develops Bayesian track extraction algorithms for targets modelled as hidden reciprocal chains (HRC). HRC are a class of finite-state random process models that generalise the familiar hidden Markov chains (HMC). HRC are able to model the "intention" of a target to proceed from a given origin to a destination, behaviour which cannot be properly captured by a HMC. While Bayesian estimation problems for HRC have previously been studied, this paper focusses principally on the problem of track extraction, of which the primary task is confirming target existence in a set of detections obtained from thresholding sensor measurements. Simulation examples are presented which show that the additional model information contained in a HRC improves detection performance when compared to HMC models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge