George Nousias

Efficient UAV Coverage in Large Convex Quadrilateral Areas with Elliptical Footprints

Feb 18, 2025

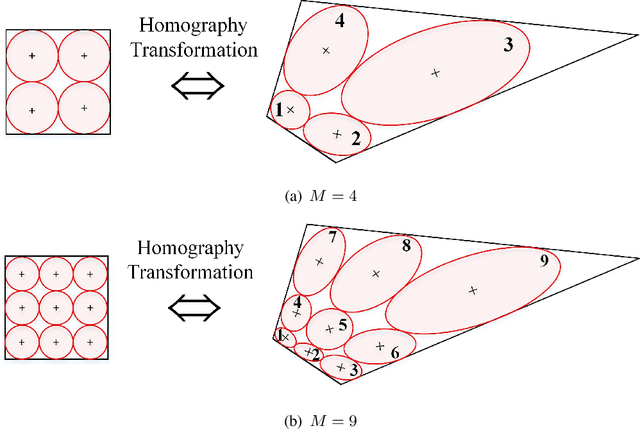

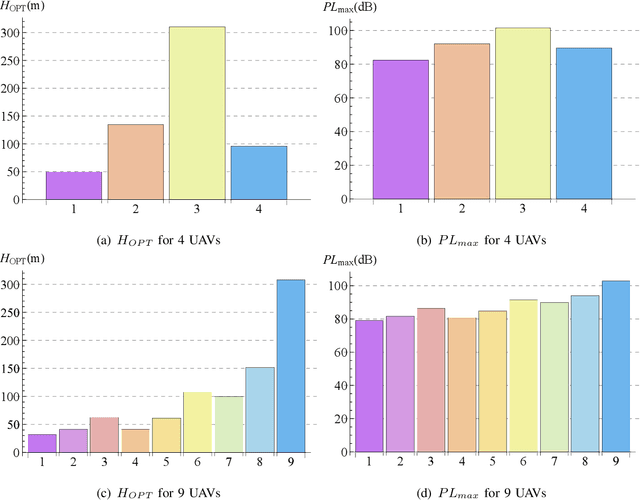

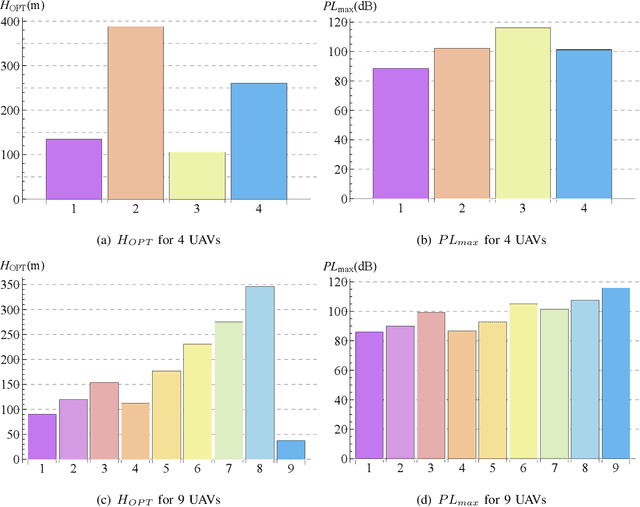

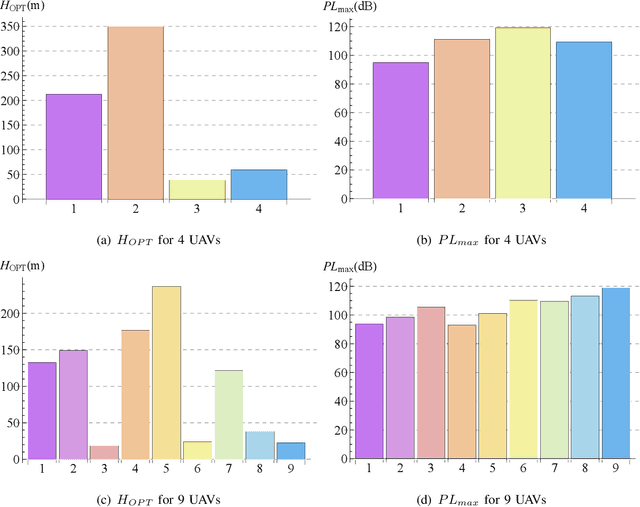

Abstract:Unmanned Aerial Vehicles (UAVs) have gained significant attention for improving wireless communication, especially in emergencies or as a complement to existing cellular infrastructure. This letter addresses the problem of efficiently covering a large convex quadrilateral using multiple UAVs, where each UAV generates elliptical coverage footprints based on its altitude and antenna tilt. The challenge is approached using circle-packing techniques within a unit square to arrange UAVs in an optimal configuration. Subsequently, a homography transformation is applied to map the unit square onto the quadrilateral area, ensuring that the UAVs' elliptical footprints cover the entire region. Numerical simulations demonstrate the effectiveness of the proposed method, providing insight into coverage density and optimal altitude configurations for different placement scenarios. The results highlight the scalability and potential for improving UAV-based communication systems, focusing on maximizing coverage efficiency in large areas with irregular shapes.

$H$-RANSAC, an algorithmic variant for Homography image transform from featureless point sets: application to video-based football analytics

Oct 07, 2023Abstract:Estimating homography matrix between two images has various applications like image stitching or image mosaicing and spatial information retrieval from multiple camera views, but has been proved to be a complicated problem, especially in cases of radically different camera poses and zoom factors. Many relevant approaches have been proposed, utilizing direct feature based, or deep learning methodologies. In this paper, we propose a generalized RANSAC algorithm, H-RANSAC, to retrieve homography image transformations from sets of points without descriptive local feature vectors and point pairing. We allow the points to be optionally labelled in two classes. We propose a robust criterion that rejects implausible point selection before each iteration of RANSAC, based on the type of the quadrilaterals formed by random point pair selection (convex or concave and (non)-self-intersecting). A similar post-hoc criterion rejects implausible homography transformations is included at the end of each iteration. The expected maximum iterations of $H$-RANSAC are derived for different probabilities of success, according to the number of points per image and per class, and the percentage of outliers. The proposed methodology is tested on a large dataset of images acquired by 12 cameras during real football matches, where radically different views at each timestamp are to be matched. Comparisons with state-of-the-art implementations of RANSAC combined with classic and deep learning image salient point detection indicates the superiority of the proposed $H$-RANSAC, in terms of average reprojection error and number of successfully processed pairs of frames, rendering it the method of choice in cases of image homography alignment with few tens of points, while local features are not available, or not descriptive enough. The implementation of $H$-RANSAC is available in https://github.com/gnousias/H-RANSAC

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge