George F R Ellis

Brain-inspired Distributed Cognitive Architecture

May 18, 2020

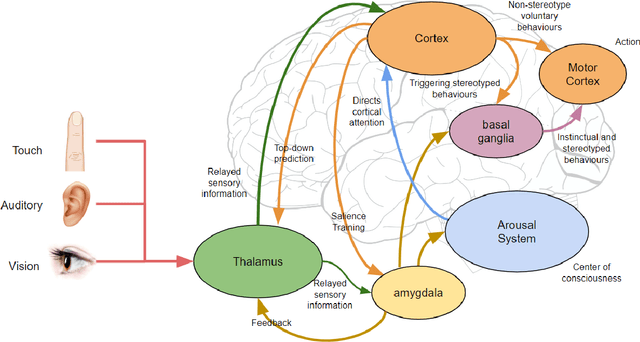

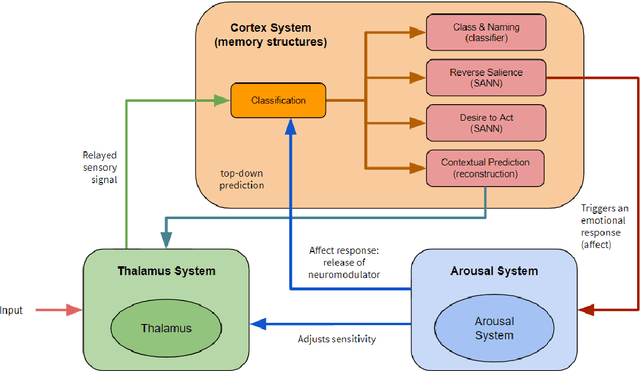

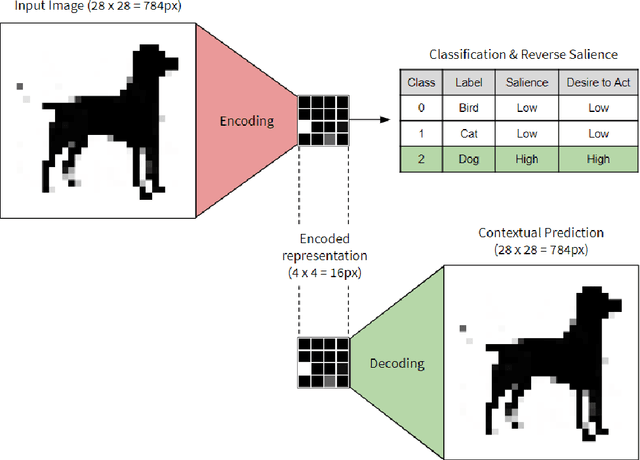

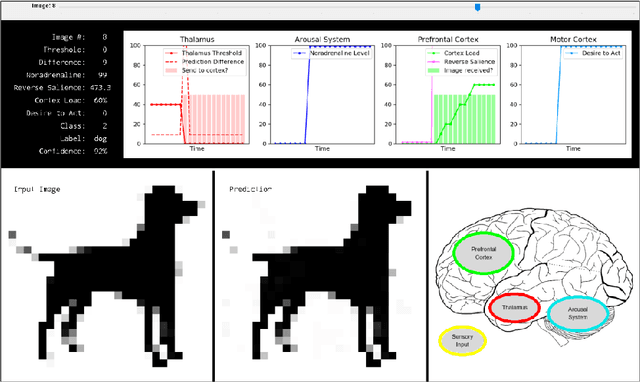

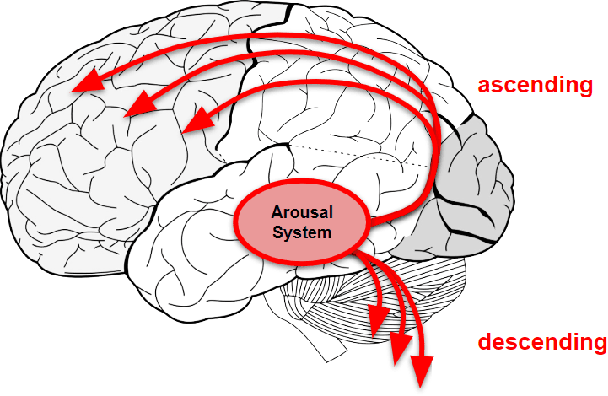

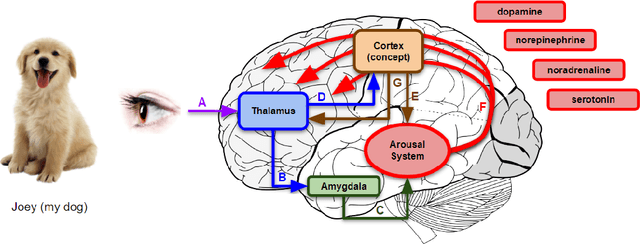

Abstract:In this paper we present a brain-inspired cognitive architecture that incorporates sensory processing, classification, contextual prediction, and emotional tagging. The cognitive architecture is implemented as three modular web-servers, meaning that it can be deployed centrally or across a network for servers. The experiments reveal two distinct operations of behaviour, namely high- and low-salience modes of operations, which closely model attention in the brain. In addition to modelling the cortex, we have demonstrated that a bio-inspired architecture introduced processing efficiencies. The software has been published as an open source platform, and can be easily extended by future research teams. This research lays the foundations for bio-realistic attention direction and sensory selection, and we believe that it is a key step towards achieving a bio-realistic artificial intelligent system.

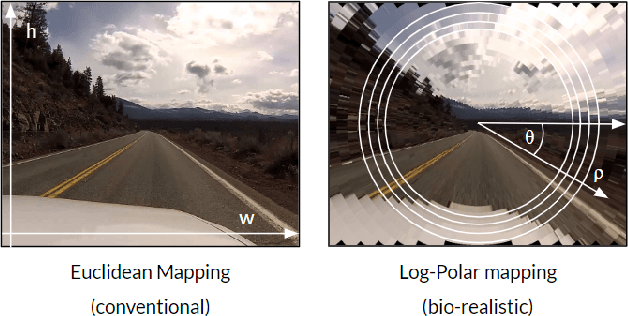

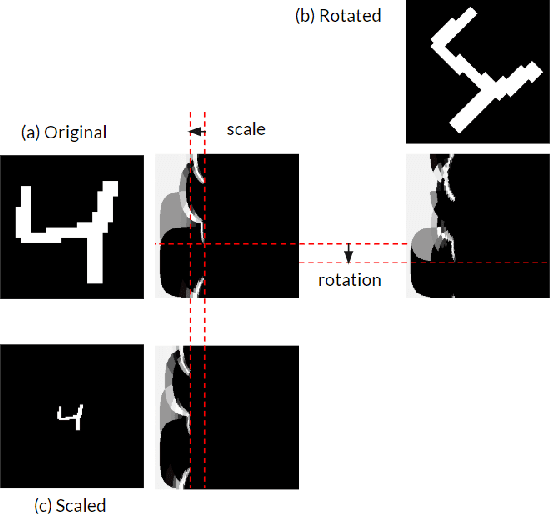

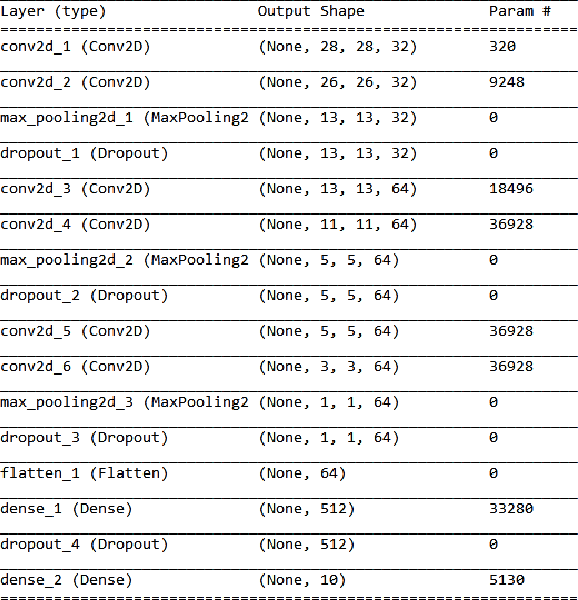

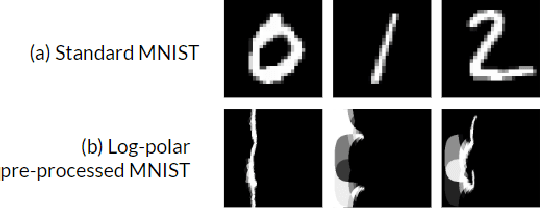

Human eye inspired log-polar pre-processing for neural networks

Nov 04, 2019

Abstract:In this paper we draw inspiration from the human visual system, and present a bio-inspired pre-processing stage for neural networks. We implement this by applying a log-polar transformation as a pre-processing step, and to demonstrate, we have used a naive convolutional neural network (CNN). We demonstrate that a bio-inspired pre-processing stage can achieve rotation and scale robustness in CNNs. A key point in this paper is that the CNN does not need to be trained to identify rotation or scaling permutations; rather it is the log-polar pre-processing step that converts the image into a format that allows the CNN to handle rotation and scaling permutations. In addition we demonstrate how adding a log-polar transformation as a pre-processing step can reduce the image size to ~20\% of the Euclidean image size, without significantly compromising classification accuracy of the CNN. The pre-processing stage presented in this paper is modelled after the retina and therefore is only tested against an image dataset. Note: This paper has been submitted for SAUPEC/RobMech/PRASA 2020.

CTNN: Corticothalamic-inspired neural network

Oct 28, 2019

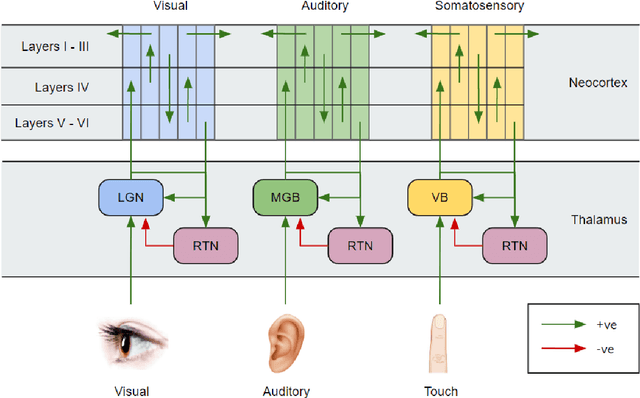

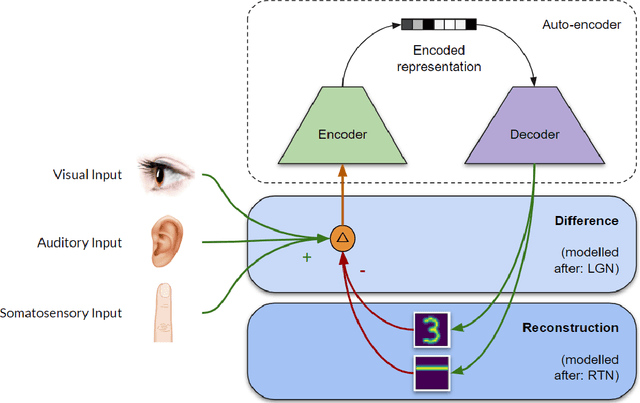

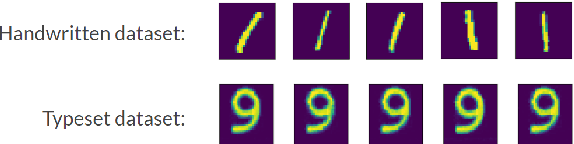

Abstract:Sensory predictions by the brain in all modalities take place as a result of bottom-up and top-down connections both in the neocortex and between the neocortex and the thalamus. The bottom-up connections in the cortex are responsible for learning, pattern recognition, and object classification, and have been widely modelled using artificial neural networks (ANNs). Current neural network models (such as predictive coding models) have poor processing efficiency, and are limited to one input type, neither of which is bio-realistic. Here, we present a neural network architecture modelled on the corticothalamic connections and the behaviour of the thalamus: a corticothalamic neural network (CTNN). The CTNN presented in this paper consists of an auto-encoder connected to a difference engine, which is inspired by the behaviour of the thalamus. We demonstrate that the CTNN is input agnostic, multi-modal, robust during partial occlusion of one or more sensory inputs, and has significantly higher processing efficiency than other predictive coding models, proportional to the number of sequentially similar inputs in a sequence. This research helps us understand how the human brain is able to provide contextual awareness to an object in the field of perception, handle robustness in a case of partial sensory occlusion, and achieve a high degree of autonomous behaviour while completing complex tasks such as driving a car.

One-time learning in a biologically-inspired Salience-affected Artificial Neural Network

Aug 23, 2019

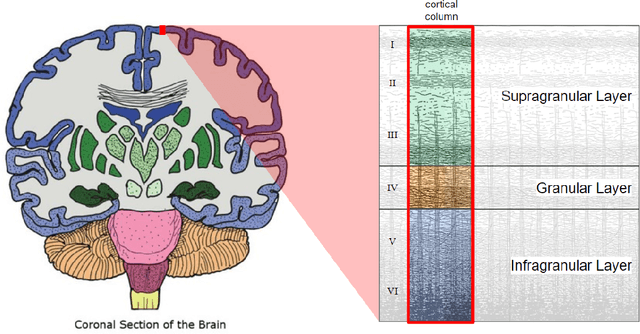

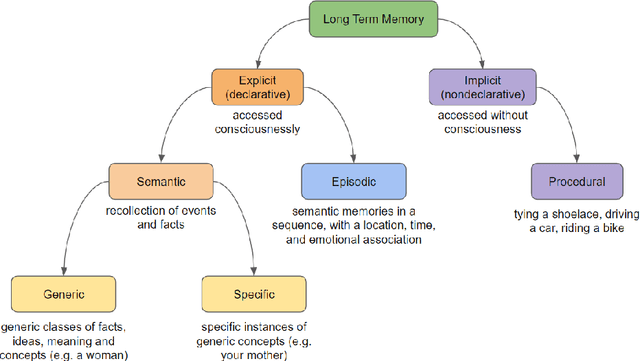

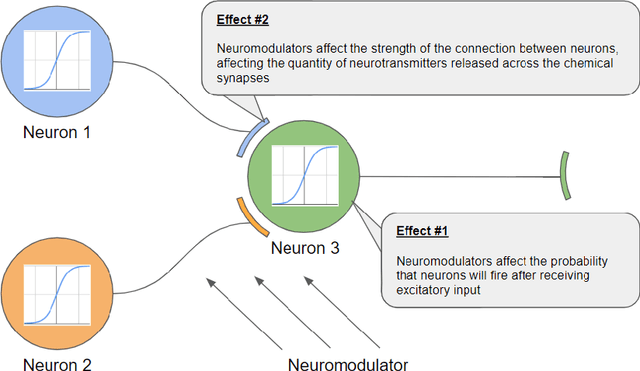

Abstract:Standard artificial neural networks (ANNs), loosely based on the structure of columns in the cortex, model key cognitive aspects of brain function such as learning and classification, but do not model the affective (emotional) aspects of brain function. However these are a key feature of the brain (the associated `ascending systems' have been hard-wired into the brain by evolutionary processes). These emotions are associated with memories when neuromodulators such as dopamine and noradrenaline affect entire patterns of synaptically activated neurons. Here we present a bio-inspired ANN architecture which we call a Salience-Affected Neural Network (SANN), which, at the same time as local network processing of task-specific information, includes non-local salience (significance) effects responding to an input salience signal. During pattern recognition, inputs similar to the salience-affected inputs in the training data will produce reverse salience signals corresponding to those experienced when the memories were laid down. In addition, training with salience affects the weights of connections between nodes, and improves the overall accuracy of a classification of images similar to the salience-tagged input after just a single iteration of training. Note that we are not aiming to present an accurate model of the biological salience system; rather we present an artificial neural network inspired by those biological systems in the human brain, that has unique strengths.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge