CTNN: Corticothalamic-inspired neural network

Paper and Code

Oct 28, 2019

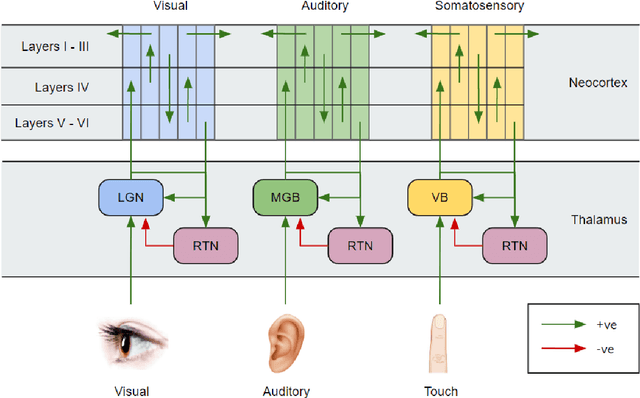

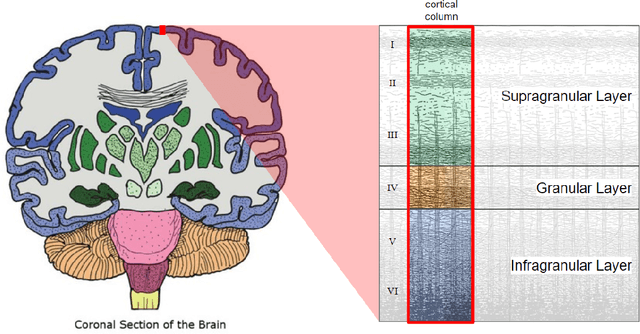

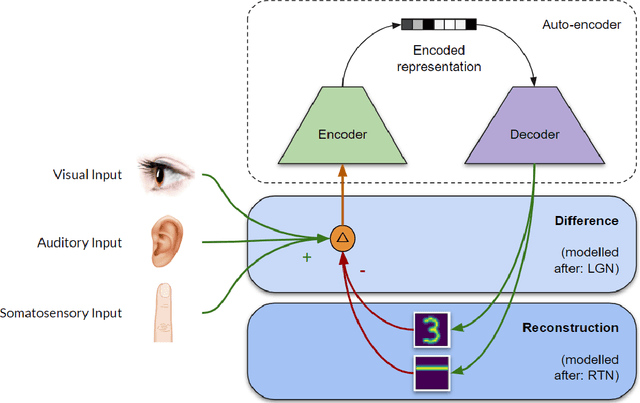

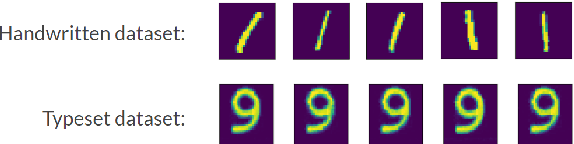

Sensory predictions by the brain in all modalities take place as a result of bottom-up and top-down connections both in the neocortex and between the neocortex and the thalamus. The bottom-up connections in the cortex are responsible for learning, pattern recognition, and object classification, and have been widely modelled using artificial neural networks (ANNs). Current neural network models (such as predictive coding models) have poor processing efficiency, and are limited to one input type, neither of which is bio-realistic. Here, we present a neural network architecture modelled on the corticothalamic connections and the behaviour of the thalamus: a corticothalamic neural network (CTNN). The CTNN presented in this paper consists of an auto-encoder connected to a difference engine, which is inspired by the behaviour of the thalamus. We demonstrate that the CTNN is input agnostic, multi-modal, robust during partial occlusion of one or more sensory inputs, and has significantly higher processing efficiency than other predictive coding models, proportional to the number of sequentially similar inputs in a sequence. This research helps us understand how the human brain is able to provide contextual awareness to an object in the field of perception, handle robustness in a case of partial sensory occlusion, and achieve a high degree of autonomous behaviour while completing complex tasks such as driving a car.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge