Amit Mishra

User Logic Development for the Muon Identifier Common Readout Unit for the ALICE Experiment at the Large Hadron Collider

Apr 12, 2021

Abstract:The Large Hadron Collider (LHC) at CERN is undergoing a major upgrade with the goal of increasing the luminosity as more statistics are needed for precision measurements. The presented work pertains to the corresponding upgrade of the ALICE Muon Trigger (MTR) Detector, now named the Muon Identifier (MID). Previously operated in a triggered readout manner, this detector has transitioned to continuous readout with time-delimited data payloads. However, this results in data rates much higher than the previous operation and hence a new Online-Offline (O2) computing system is also being developed for real-time data processing to reduce the storage requirements. Part of the O2 System is based on FPGA technology and is known as the Common Readout Unit (CRU). Being common to many detectors necessitates the development of custom user logic per detector. This work concerns the development of the ALICE MID user logic which will interface to the core CRU firmware and perform the required data processing. It presents the development of a conceptual design and a prototype for the user logic. The resulting prototype shows the ability to meet the established requirements in an effective and optimized manner. Additionally, the modular design approach employed, allows for more features to be easily introduced.

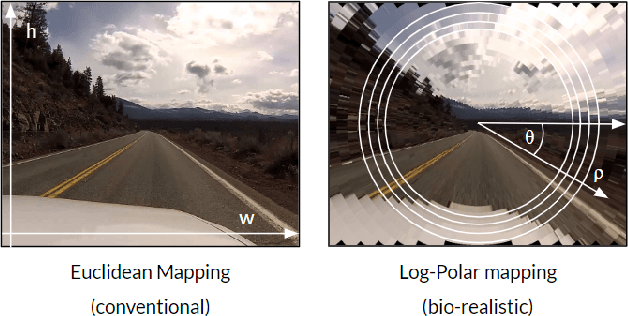

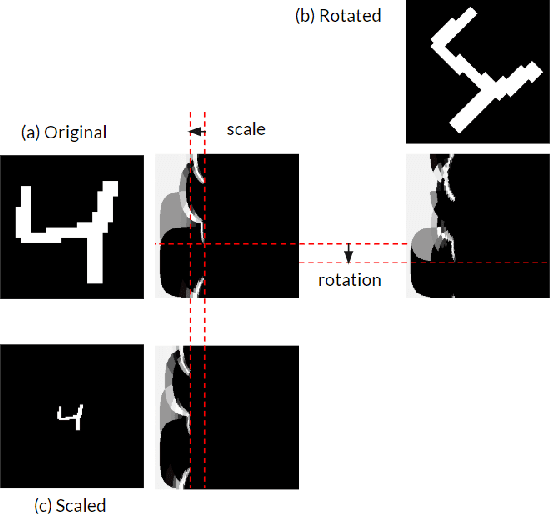

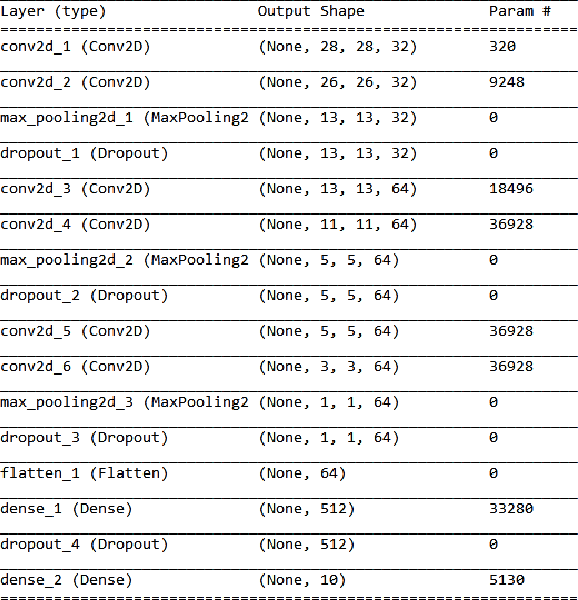

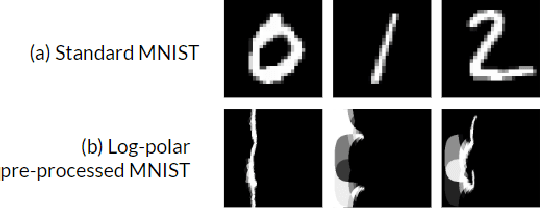

Human eye inspired log-polar pre-processing for neural networks

Nov 04, 2019

Abstract:In this paper we draw inspiration from the human visual system, and present a bio-inspired pre-processing stage for neural networks. We implement this by applying a log-polar transformation as a pre-processing step, and to demonstrate, we have used a naive convolutional neural network (CNN). We demonstrate that a bio-inspired pre-processing stage can achieve rotation and scale robustness in CNNs. A key point in this paper is that the CNN does not need to be trained to identify rotation or scaling permutations; rather it is the log-polar pre-processing step that converts the image into a format that allows the CNN to handle rotation and scaling permutations. In addition we demonstrate how adding a log-polar transformation as a pre-processing step can reduce the image size to ~20\% of the Euclidean image size, without significantly compromising classification accuracy of the CNN. The pre-processing stage presented in this paper is modelled after the retina and therefore is only tested against an image dataset. Note: This paper has been submitted for SAUPEC/RobMech/PRASA 2020.

CTNN: Corticothalamic-inspired neural network

Oct 28, 2019

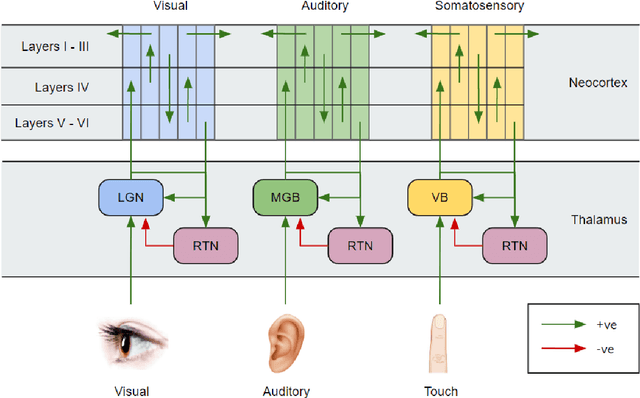

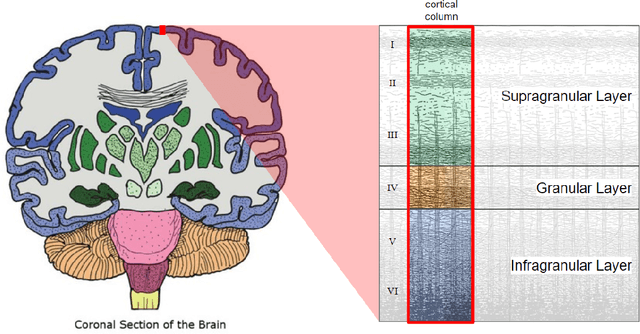

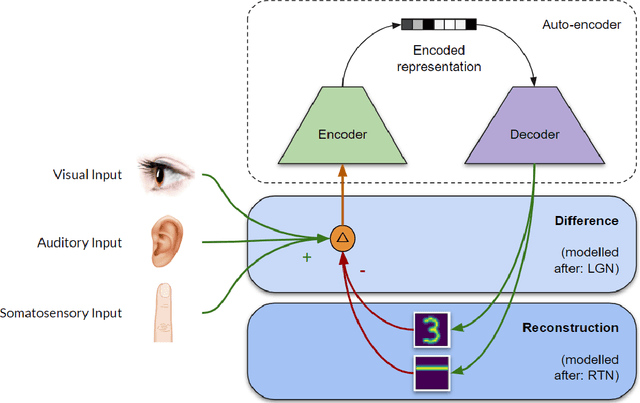

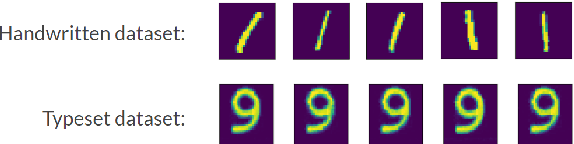

Abstract:Sensory predictions by the brain in all modalities take place as a result of bottom-up and top-down connections both in the neocortex and between the neocortex and the thalamus. The bottom-up connections in the cortex are responsible for learning, pattern recognition, and object classification, and have been widely modelled using artificial neural networks (ANNs). Current neural network models (such as predictive coding models) have poor processing efficiency, and are limited to one input type, neither of which is bio-realistic. Here, we present a neural network architecture modelled on the corticothalamic connections and the behaviour of the thalamus: a corticothalamic neural network (CTNN). The CTNN presented in this paper consists of an auto-encoder connected to a difference engine, which is inspired by the behaviour of the thalamus. We demonstrate that the CTNN is input agnostic, multi-modal, robust during partial occlusion of one or more sensory inputs, and has significantly higher processing efficiency than other predictive coding models, proportional to the number of sequentially similar inputs in a sequence. This research helps us understand how the human brain is able to provide contextual awareness to an object in the field of perception, handle robustness in a case of partial sensory occlusion, and achieve a high degree of autonomous behaviour while completing complex tasks such as driving a car.

CMB-GAN: Fast Simulations of Cosmic Microwave background anisotropy maps using Deep Learning

Aug 11, 2019

Abstract:Cosmic Microwave Background (CMB) has been a cornerstone in many cosmology experiments and studies since it was discovered back in 1964. Traditional computational models like CAMB that are used for generating CMB anisotropy maps are extremely resource intensive and act as a bottleneck in cosmology experiments that require a large amount of CMB data for analysis. In this paper, we present a new approach to the generation of CMB anisotropy maps using a machine learning technique called Generative Adversarial Network (GAN). We train our deep generative model to learn the complex distribution of CMB maps and efficiently generate new sets of CMB data in the form of 2D patches of anisotropy maps. We limit our experiment to the generation of 56{\deg} and 112{\deg} patches of CMB maps. We have also trained a Multilayer perceptron model for estimation of baryon density from a CMB map, we will be using this model for the performance evaluation of our generative model using diagnostic measures like Histogram of pixel intensities, the standard deviation of pixel intensity distribution, Power Spectrum, Cross power spectrum, Correlation matrix of the power spectrum and Peak count.

ExGate: Externally Controlled Gating for Feature-based Attention in Artificial Neural Networks

Nov 08, 2018

Abstract:Perceptual capabilities of artificial systems have come a long way since the advent of deep learning. These methods have proven to be effective, however they are not as efficient as their biological counterparts. Visual attention is a set of mechanisms that are employed in biological visual systems to ease computational load by only processing pertinent parts of the stimuli. This paper addresses the implementation of top-down, feature-based attention in an artificial neural network by use of externally controlled neuron gating. Our results showed a 5% increase in classification accuracy on the CIFAR-10 dataset versus a non-gated version, while adding very few parameters. Our gated model also produces more reasonable errors in predictions by drastically reducing prediction of classes that belong to a different category to the true class.

A Dictionary Approach to Identifying Transient RFI

Nov 23, 2017

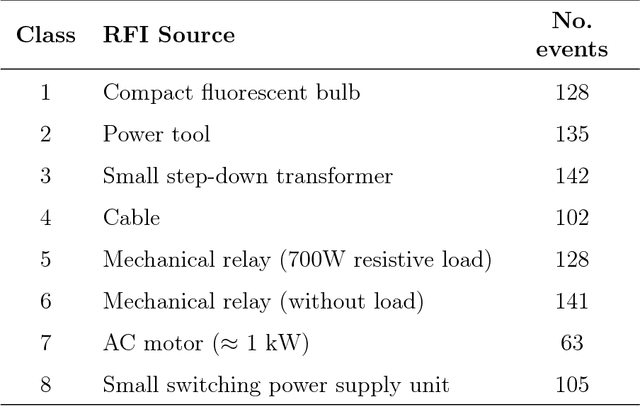

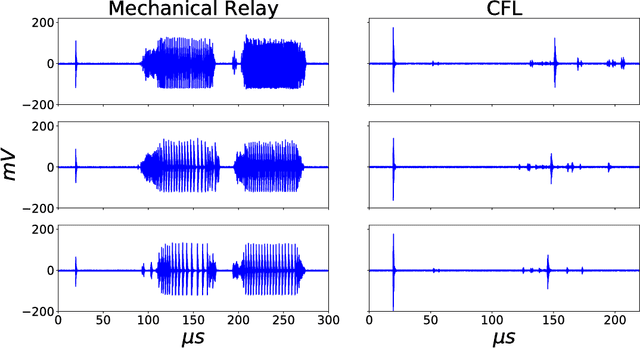

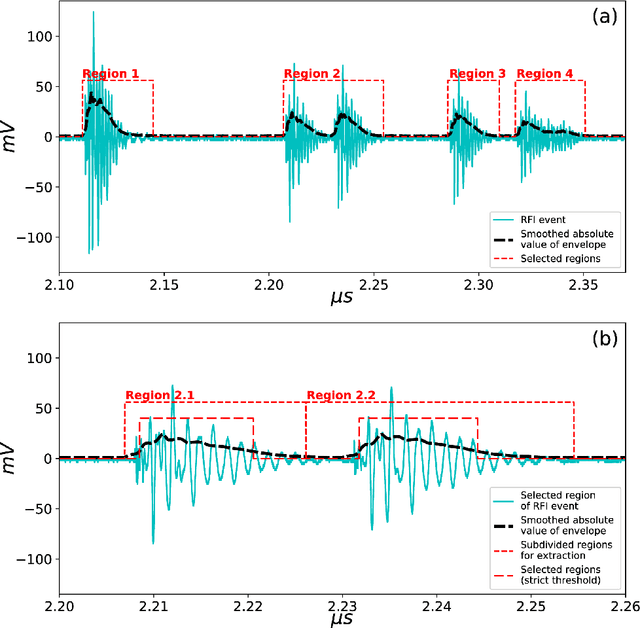

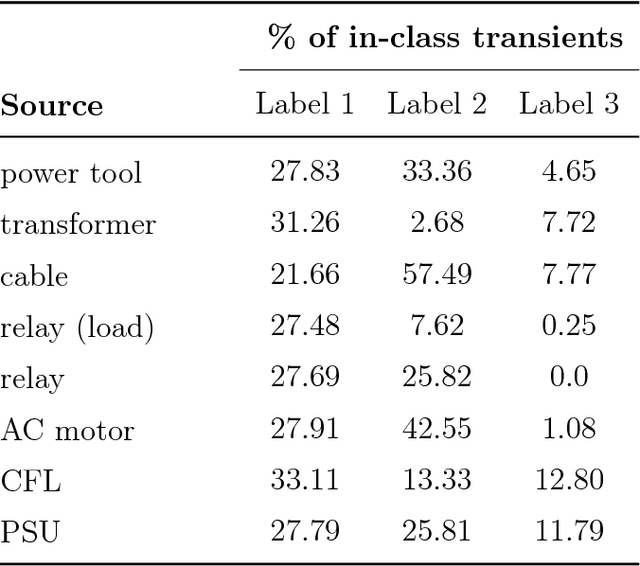

Abstract:As radio telescopes become more sensitive, the damaging effects of radio frequency interference (RFI) become more apparent. Near radio telescope arrays, RFI sources are often easily removed or replaced; the challenge lies in identifying them. Transient (impulsive) RFI is particularly difficult to identify. We propose a novel dictionary-based approach to transient RFI identification. RFI events are treated as sequences of sub-events, drawn from particular labelled classes. We demonstrate an automated method of extracting and labelling sub-events using a dataset of transient RFI. A dictionary of labels may be used in conjunction with hidden Markov models to identify the sources of RFI events reliably. We attain improved classification accuracy over traditional approaches such as SVMs or a na\"ive kNN classifier. Finally, we investigate why transient RFI is difficult to classify. We show that cluster separation in the principal components domain is influenced by the mains supply phase for certain sources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge