Gary Miller

Spectral Clustering on Large Datasets: When Does it Work? Theory from Continuous Clustering and Density Cheeger-Buser

May 11, 2023Abstract:Spectral clustering is one of the most popular clustering algorithms that has stood the test of time. It is simple to describe, can be implemented using standard linear algebra, and often finds better clusters than traditional clustering algorithms like $k$-means and $k$-centers. The foundational algorithm for two-way spectral clustering, by Shi and Malik, creates a geometric graph from data and finds a spectral cut of the graph. In modern machine learning, many data sets are modeled as a large number of points drawn from a probability density function. Little is known about when spectral clustering works in this setting -- and when it doesn't. Past researchers justified spectral clustering by appealing to the graph Cheeger inequality (which states that the spectral cut of a graph approximates the ``Normalized Cut''), but this justification is known to break down on large data sets. We provide theoretically-informed intuition about spectral clustering on large data sets drawn from probability densities, by proving when a continuous form of spectral clustering considered by past researchers (the unweighted spectral cut of a probability density) finds good clusters of the underlying density itself. Our work suggests that Shi-Malik spectral clustering works well on data drawn from mixtures of Laplace distributions, and works poorly on data drawn from certain other densities, such as a density we call the `square-root trough'. Our core theorem proves that weighted spectral cuts have low weighted isoperimetry for all probability densities. Our key tool is a new Cheeger-Buser inequality for all probability densities, including discontinuous ones.

Functions that Preserve Manhattan Distances

Nov 23, 2020Abstract:What functions, when applied to the pairwise Manhattan distances between any $n$ points, result in the Manhattan distances between another set of $n$ points? In this paper, we show that a function has this property if and only if it is Bernstein. This class of functions admits several classical analytic characterizations and includes $f(x) = x^s$ for $0 \leq s \leq 1$ as well as $f(x) = 1-e^{-xt}$ for any $t \geq 0$. While it was previously known that Bernstein functions had this property, it was not known that these were the only such functions. Our results are a natural extension of the work of Schoenberg from 1938, who addressed this question for Euclidean distances. Schoenberg's work has been applied in probability theory, harmonic analysis, machine learning, theoretical computer science, and more. We additionally show that if and only if $f$ is completely monotone, there exists \mbox{$F:\ell_1 \rightarrow \mathbb{R}^n$} for any $x_1, \ldots x_n \in \ell_1$ such that $f(\|x_i - x_j\|_1) = \langle F(x_i), F(x_j) \rangle$. Previously, it was known that completely monotone functions had this property, but it was not known they were the only such functions. The same result but with negative type distances instead of $\ell_1$ is the foundation of all kernel methods in machine learning, and was proven by Schoenberg in 1942.

Runtime Guarantees for Regression Problems

Sep 07, 2012

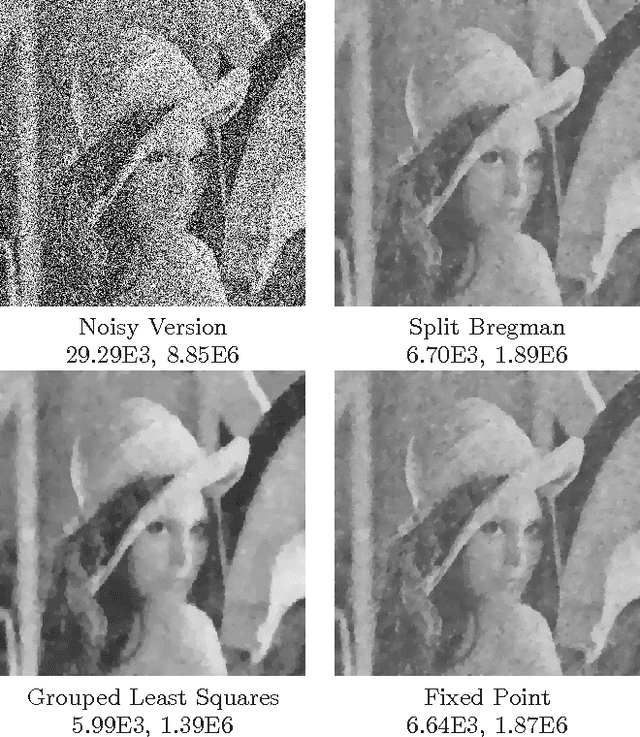

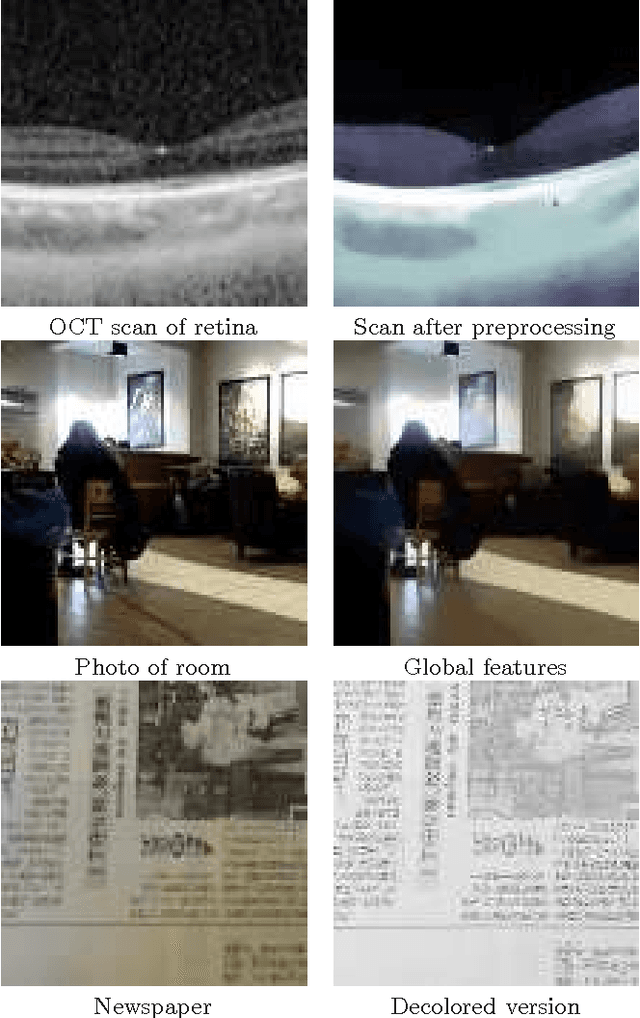

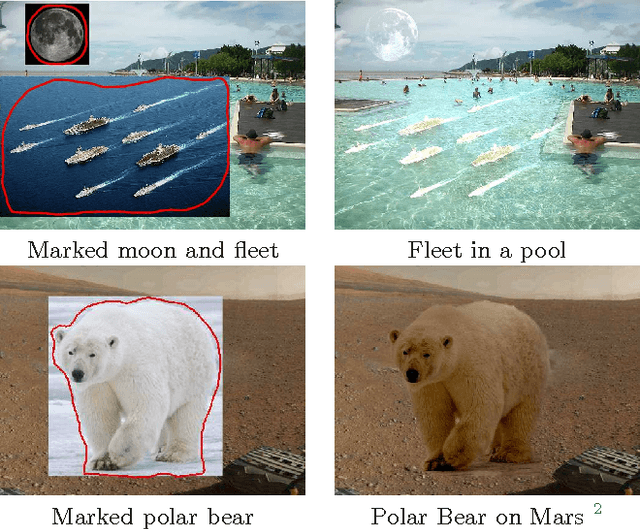

Abstract:We study theoretical runtime guarantees for a class of optimization problems that occur in a wide variety of inference problems. these problems are motivated by the lasso framework and have applications in machine learning and computer vision. Our work shows a close connection between these problems and core questions in algorithmic graph theory. While this connection demonstrates the difficulties of obtaining runtime guarantees, it also suggests an approach of using techniques originally developed for graph algorithms. We then show that most of these problems can be formulated as a grouped least squares problem, and give efficient algorithms for this formulation. Our algorithms rely on routines for solving quadratic minimization problems, which in turn are equivalent to solving linear systems. Finally we present some experimental results on applying our approximation algorithm to image processing problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge