Gary A. McCully

Impact of Data Snooping on Deep Learning Models for Locating Vulnerabilities in Lifted Code

Dec 03, 2024

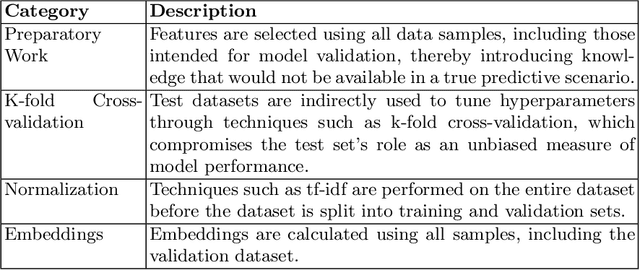

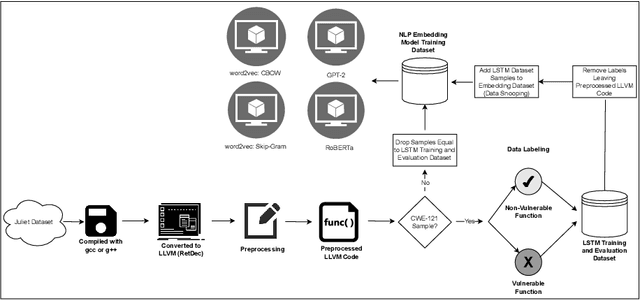

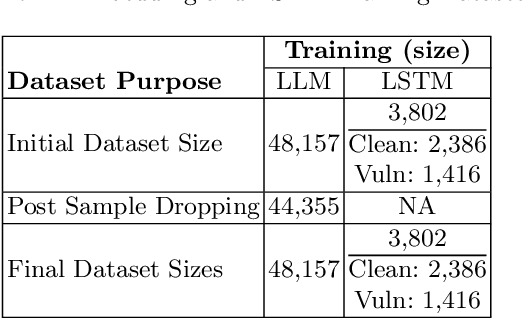

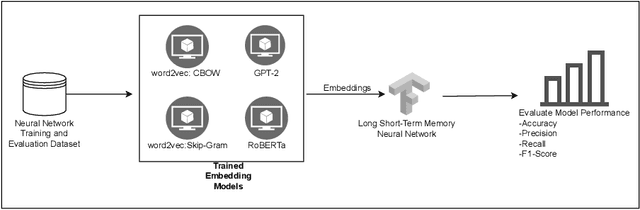

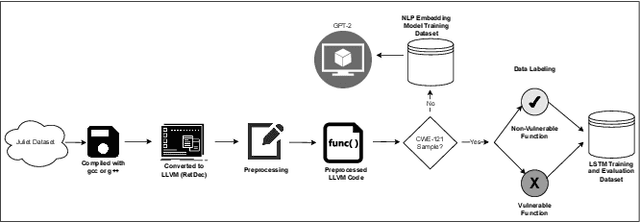

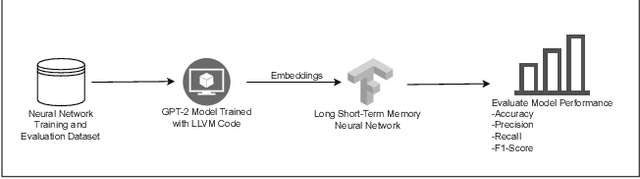

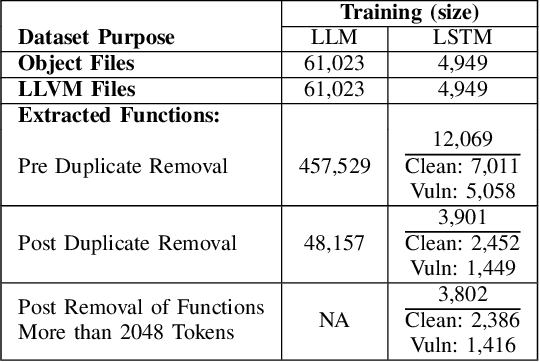

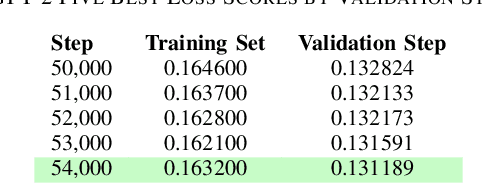

Abstract:This study examines the impact of data snooping on neural networks for vulnerability detection in lifted code, building on previous research which used word2vec, and unidirectional and bidirectional transformer-based embeddings. The research specifically focuses on how model performance is affected when embedding models are trained on datasets, including samples also used for neural network training and validation. The results show that introducing data snooping did not significantly alter model performance, suggesting that data snooping had a minimal impact or that samples randomly dropped as part of the methodology contained hidden features critical to achieving optimal performance. In addition, the findings reinforce the conclusions of previous research, which found that models trained with GPT-2 embeddings consistently outperformed neural networks trained with other embeddings. The fact that this holds even when data snooping is introduced into the embedding model indicates GPT-2's robustness in representing complex code features, even under less-than-ideal conditions.

Comparing Unidirectional, Bidirectional, and Word2vec Models for Discovering Vulnerabilities in Compiled Lifted Code

Sep 26, 2024

Abstract:Ransomware and other forms of malware cause significant financial and operational damage to organizations by exploiting long-standing and often difficult-to-detect software vulnerabilities. To detect vulnerabilities such as buffer overflows in compiled code, this research investigates the application of unidirectional transformer-based embeddings, specifically GPT-2. Using a dataset of LLVM functions, we trained a GPT-2 model to generate embeddings, which were subsequently used to build LSTM neural networks to differentiate between vulnerable and non-vulnerable code. Our study reveals that embeddings from the GPT-2 model significantly outperform those from bidirectional models of BERT and RoBERTa, achieving an accuracy of 92.5% and an F1-score of 89.7%. LSTM neural networks were developed with both frozen and unfrozen embedding model layers. The model with the highest performance was achieved when the embedding layers were unfrozen. Further, the research finds that, in exploring the impact of different optimizers within this domain, the SGD optimizer demonstrates superior performance over Adam. Overall, these findings reveal important insights into the potential of unidirectional transformer-based approaches in enhancing cybersecurity defenses.

Bi-Directional Transformers vs. word2vec: Discovering Vulnerabilities in Lifted Compiled Code

May 31, 2024

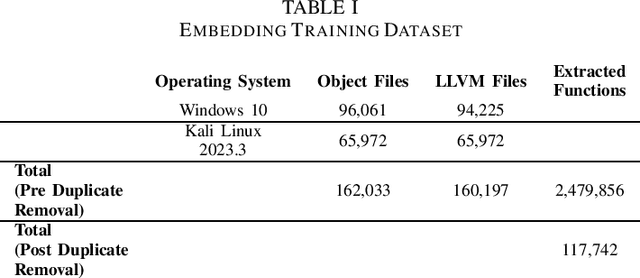

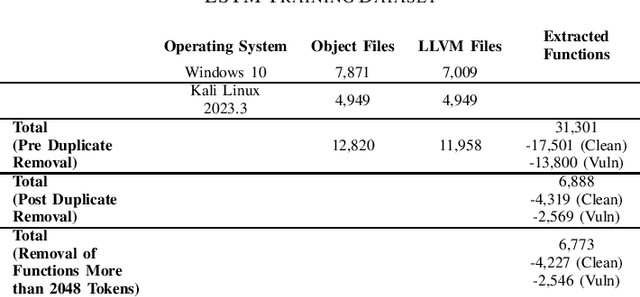

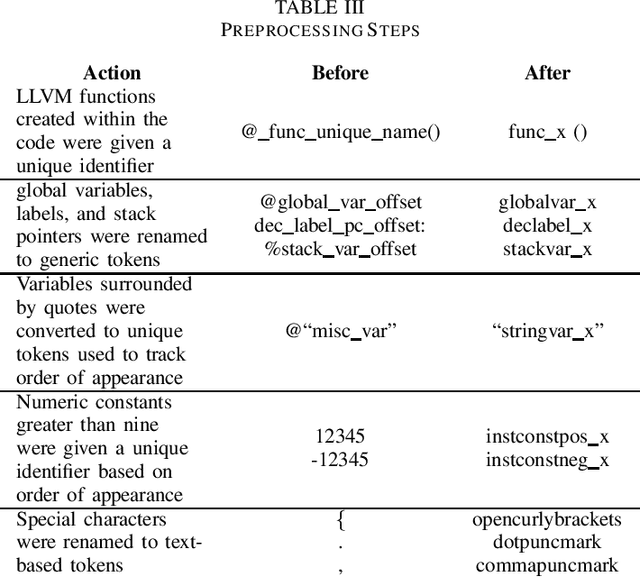

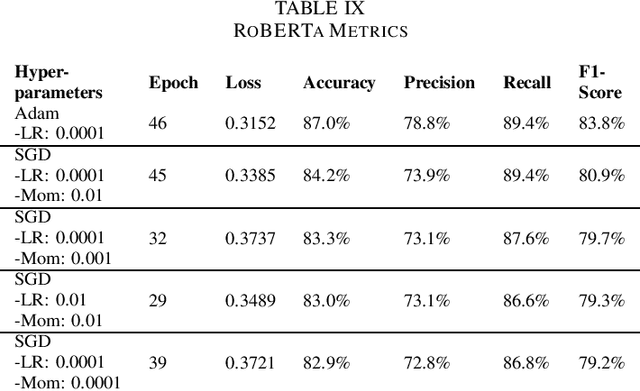

Abstract:Detecting vulnerabilities within compiled binaries is challenging due to lost high-level code structures and other factors such as architectural dependencies, compilers, and optimization options. To address these obstacles, this research explores vulnerability detection by using natural language processing (NLP) embedding techniques with word2vec, BERT, and RoBERTa to learn semantics from intermediate representation (LLVM) code. Long short-term memory (LSTM) neural networks were trained on embeddings from encoders created using approximately 118k LLVM functions from the Juliet dataset. This study is pioneering in its comparison of word2vec models with multiple bidirectional transformer (BERT, RoBERTa) embeddings built using LLVM code to train neural networks to detect vulnerabilities in compiled binaries. word2vec Continuous Bag of Words (CBOW) models achieved 92.3% validation accuracy in detecting vulnerabilities, outperforming word2vec Skip-Gram, BERT, and RoBERTa. This suggests that complex contextual NLP embeddings may not provide advantages over simpler word2vec models for this task when a limited number (e.g. 118K) of data samples are used to train the bidirectional transformer-based models. The comparative results provide novel insights into selecting optimal embeddings for learning compiler-independent semantic code representations to advance machine learning detection of vulnerabilities in compiled binaries.

Confronting the Reproducibility Crisis: A Case Study in Validating Certified Robustness

May 29, 2024Abstract:Reproducibility is a cornerstone of scientific research, enabling validation, extension, and progress. However, the rapidly evolving nature of software and dependencies poses significant challenges to reproducing research results, particularly in fields like adversarial robustness for deep neural networks, where complex codebases and specialized toolkits are utilized. This paper presents a case study of attempting to validate the results on certified adversarial robustness in "SoK: Certified Robustness for Deep Neural Networks" using the VeriGauge toolkit. Despite following the documented methodology, numerous software and hardware compatibility issues were encountered, including outdated or unavailable dependencies, version conflicts, and driver incompatibilities. While a subset of the original results could be run, key findings related to the empirical robust accuracy of various verification methods proved elusive due to these technical obstacles, as well as slight discrepancies in the test results. This practical experience sheds light on the reproducibility crisis afflicting adversarial robustness research, where a lack of reproducibility threatens scientific integrity and hinders progress. The paper discusses the broader implications of this crisis, proposing potential solutions such as containerization, software preservation, and comprehensive documentation practices. Furthermore, it highlights the need for collaboration and standardization efforts within the research community to develop robust frameworks for reproducible research. By addressing the reproducibility crisis head-on, this work aims to contribute to the ongoing discourse on scientific reproducibility and advocate for best practices that ensure the reliability and validity of research findings within not only adversarial robustness, but security and technology research as a whole.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge