Gahye Lee

Camera Splatting for Continuous View Optimization

Sep 19, 2025

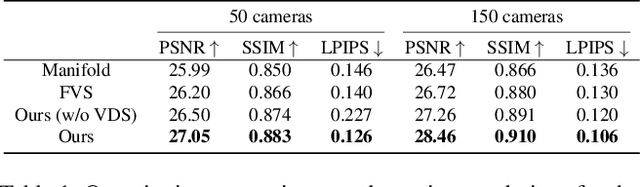

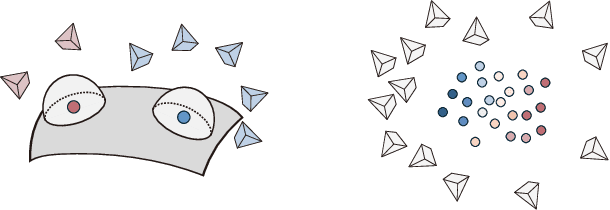

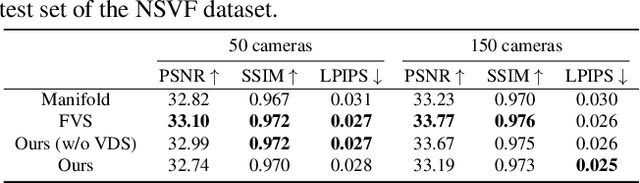

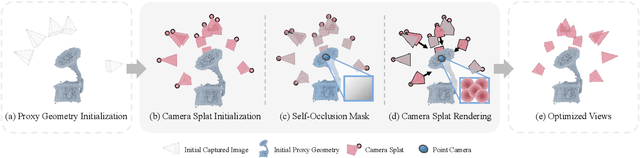

Abstract:We propose Camera Splatting, a novel view optimization framework for novel view synthesis. Each camera is modeled as a 3D Gaussian, referred to as a camera splat, and virtual cameras, termed point cameras, are placed at 3D points sampled near the surface to observe the distribution of camera splats. View optimization is achieved by continuously and differentiably refining the camera splats so that desirable target distributions are observed from the point cameras, in a manner similar to the original 3D Gaussian splatting. Compared to the Farthest View Sampling (FVS) approach, our optimized views demonstrate superior performance in capturing complex view-dependent phenomena, including intense metallic reflections and intricate textures such as text.

Deep Polycuboid Fitting for Compact 3D Representation of Indoor Scenes

Mar 19, 2025Abstract:This paper presents a novel framework for compactly representing a 3D indoor scene using a set of polycuboids through a deep learning-based fitting method. Indoor scenes mainly consist of man-made objects, such as furniture, which often exhibit rectilinear geometry. This property allows indoor scenes to be represented using combinations of polycuboids, providing a compact representation that benefits downstream applications like furniture rearrangement. Our framework takes a noisy point cloud as input and first detects six types of cuboid faces using a transformer network. Then, a graph neural network is used to validate the spatial relationships of the detected faces to form potential polycuboids. Finally, each polycuboid instance is reconstructed by forming a set of boxes based on the aggregated face labels. To train our networks, we introduce a synthetic dataset encompassing a diverse range of cuboid and polycuboid shapes that reflect the characteristics of indoor scenes. Our framework generalizes well to real-world indoor scene datasets, including Replica, ScanNet, and scenes captured with an iPhone. The versatility of our method is demonstrated through practical applications, such as virtual room tours and scene editing.

Sub-clusters of Normal Data for Anomaly Detection

Nov 17, 2020

Abstract:Anomaly detection in data analysis is an interesting but still challenging research topic in real world applications. As the complexity of data dimension increases, it requires to understand the semantic contexts in its description for effective anomaly characterization. However, existing anomaly detection methods show limited performances with high dimensional data such as ImageNet. Existing studies have evaluated their performance on low dimensional, clean and well separated data set such as MNIST and CIFAR-10. In this paper, we study anomaly detection with high dimensional and complex normal data. Our observation is that, in general, anomaly data is defined by semantically explainable features which are able to be used in defining semantic sub-clusters of normal data as well. We hypothesize that if there exists reasonably good feature space semantically separating sub-clusters of given normal data, unseen anomaly also can be well distinguished in the space from the normal data. We propose to perform semantic clustering on given normal data and train a classifier to learn the discriminative feature space where anomaly detection is finally performed. Based on our careful and extensive experimental evaluations with MNIST, CIFAR-10, and ImageNet with various combinations of normal and anomaly data, we show that our anomaly detection scheme outperforms state of the art methods especially with high dimensional real world images.

Mode Penalty Generative Adversarial Network with adapted Auto-encoder

Nov 16, 2020

Abstract:Generative Adversarial Networks (GAN) are trained to generate sample images of interest distribution. To this end, generator network of GAN learns implicit distribution of real data set from the classification with candidate generated samples. Recently, various GANs have suggested novel ideas for stable optimizing of its networks. However, in real implementation, sometimes they still represent a only narrow part of true distribution or fail to converge. We assume this ill posed problem comes from poor gradient from objective function of discriminator, which easily trap the generator in a bad situation. To address this problem, we propose a mode penalty GAN combined with pre-trained auto encoder for explicit representation of generated and real data samples in the encoded space. In this space, we make a generator manifold to follow a real manifold by finding entire modes of target distribution. In addition, penalty for uncovered modes of target distribution is given to the generator which encourages it to find overall target distribution. We demonstrate that applying the proposed method to GANs helps generator's optimization becoming more stable and having faster convergence through experimental evaluations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge