Gabriel Sepulveda

A Behavioral Approach to Visual Navigation with Graph Localization Networks

Mar 01, 2019

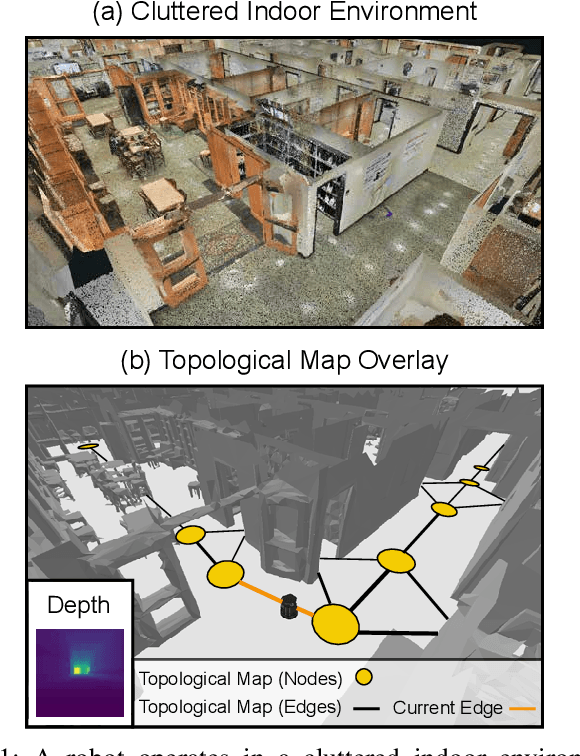

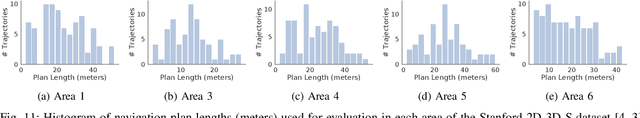

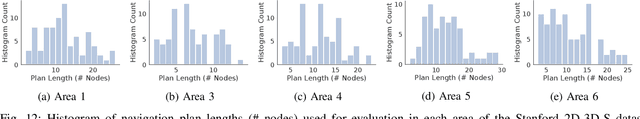

Abstract:Inspired by research in psychology, we introduce a behavioral approach for visual navigation using topological maps. Our goal is to enable a robot to navigate from one location to another, relying only on its visual input and the topological map of the environment. We propose using graph neural networks for localizing the agent in the map, and decompose the action space into primitive behaviors implemented as convolutional or recurrent neural networks. Using the Gibson simulator, we verify that our approach outperforms relevant baselines and is able to navigate in both seen and unseen environments.

A Deep Learning Based Behavioral Approach to Indoor Autonomous Navigation

Mar 12, 2018

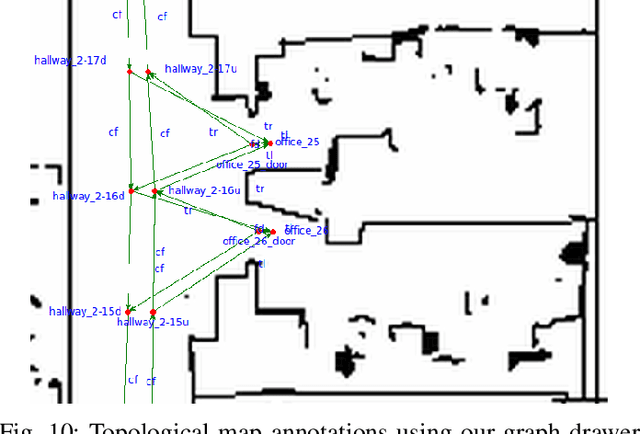

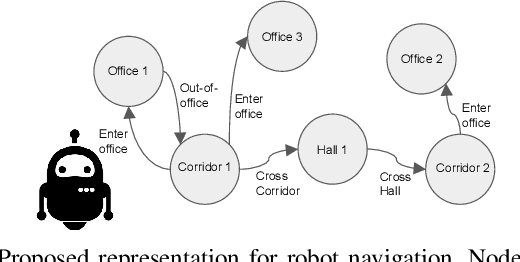

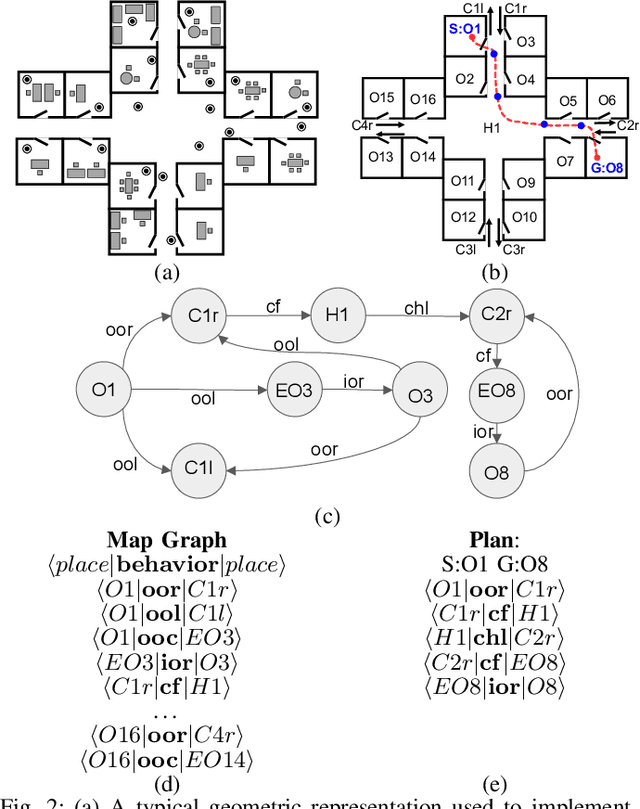

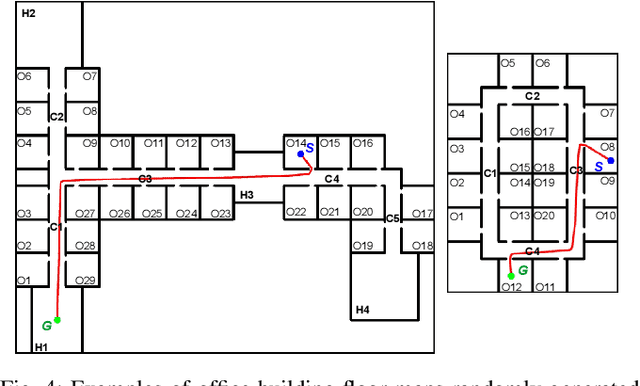

Abstract:We present a semantically rich graph representation for indoor robotic navigation. Our graph representation encodes: semantic locations such as offices or corridors as nodes, and navigational behaviors such as enter office or cross a corridor as edges. In particular, our navigational behaviors operate directly from visual inputs to produce motor controls and are implemented with deep learning architectures. This enables the robot to avoid explicit computation of its precise location or the geometry of the environment, and enables navigation at a higher level of semantic abstraction. We evaluate the effectiveness of our representation by simulating navigation tasks in a large number of virtual environments. Our results show that using a simple sets of perceptual and navigational behaviors, the proposed approach can successfully guide the way of the robot as it completes navigational missions such as going to a specific office. Furthermore, our implementation shows to be effective to control the selection and switching of behaviors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge