Gabriel Salomon

Image-based Automatic Dial Meter Reading in Unconstrained Scenarios

Jan 08, 2022

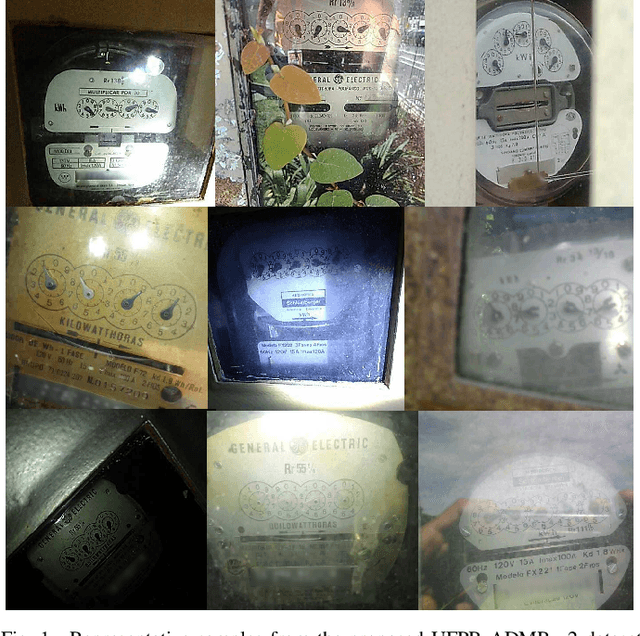

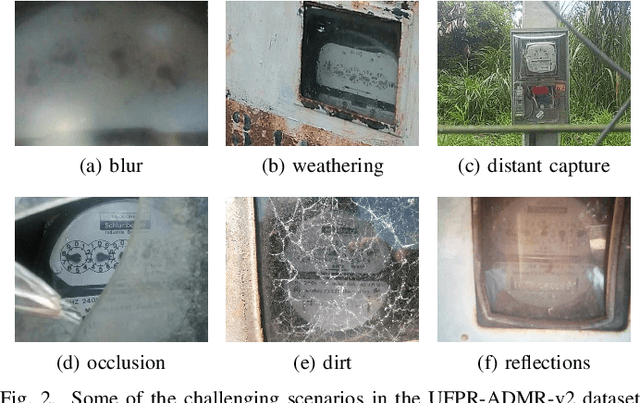

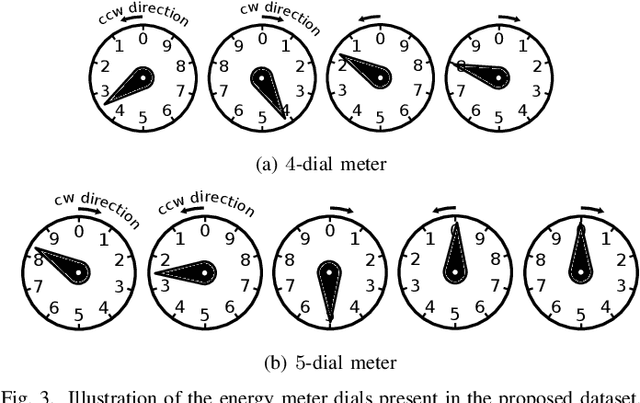

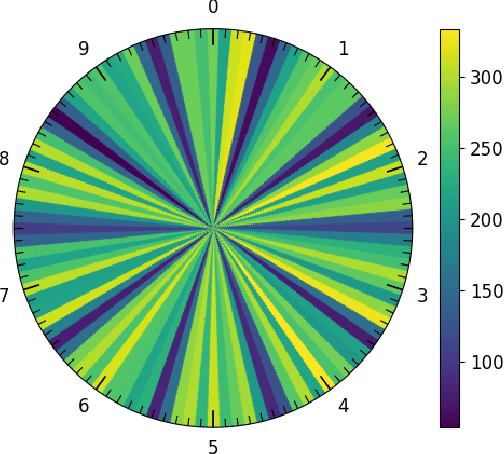

Abstract:The replacement of analog meters with smart meters is costly, laborious, and far from complete in developing countries. The Energy Company of Parana (Copel) (Brazil) performs more than 4 million meter readings (almost entirely of non-smart devices) per month, and we estimate that 850 thousand of them are from dial meters. Therefore, an image-based automatic reading system can reduce human errors, create a proof of reading, and enable the customers to perform the reading themselves through a mobile application. We propose novel approaches for Automatic Dial Meter Reading (ADMR) and introduce a new dataset for ADMR in unconstrained scenarios, called UFPR-ADMR-v2. Our best-performing method combines YOLOv4 with a novel regression approach (AngReg), and explores several postprocessing techniques. Compared to previous works, it decreased the Mean Absolute Error (MAE) from 1,343 to 129 and achieved a meter recognition rate (MRR) of 98.90% -- with an error tolerance of 1 Kilowatt-hour (kWh).

Open-set Face Recognition for Small Galleries Using Siamese Networks

May 14, 2021

Abstract:Face recognition has been one of the most relevant and explored fields of Biometrics. In real-world applications, face recognition methods usually must deal with scenarios where not all probe individuals were seen during the training phase (open-set scenarios). Therefore, open-set face recognition is a subject of increasing interest as it deals with identifying individuals in a space where not all faces are known in advance. This is useful in several applications, such as access authentication, on which only a few individuals that have been previously enrolled in a gallery are allowed. The present work introduces a novel approach towards open-set face recognition focusing on small galleries and in enrollment detection, not identity retrieval. A Siamese Network architecture is proposed to learn a model to detect if a face probe is enrolled in the gallery based on a verification-like approach. Promising results were achieved for small galleries on experiments carried out on Pubfig83, FRGCv1 and LFW datasets. State-of-the-art methods like HFCN and HPLS were outperformed on FRGCv1. Besides, a new evaluation protocol is introduced for experiments in small galleries on LFW.

Deep Learning for Image-based Automatic Dial Meter Reading: Dataset and Baselines

May 08, 2020

Abstract:Smart meters enable remote and automatic electricity, water and gas consumption reading and are being widely deployed in developed countries. Nonetheless, there is still a huge number of non-smart meters in operation. Image-based Automatic Meter Reading (AMR) focuses on dealing with this type of meter readings. We estimate that the Energy Company of Paran\'a (Copel), in Brazil, performs more than 850,000 readings of dial meters per month. Those meters are the focus of this work. Our main contributions are: (i) a public real-world dial meter dataset (shared upon request) called UFPR-ADMR; (ii) a deep learning-based recognition baseline on the proposed dataset; and (iii) a detailed error analysis of the main issues present in AMR for dial meters. To the best of our knowledge, this is the first work to introduce deep learning approaches to multi-dial meter reading, and perform experiments on unconstrained images. We achieved a 100.0% F1-score on the dial detection stage with both Faster R-CNN and YOLO, while the recognition rates reached 93.6% for dials and 75.25% for meters using Faster R-CNN (ResNext-101).

Can Giraffes Become Birds? An Evaluation of Image-to-image Translation for Data Generation

Jan 10, 2020

Abstract:There is an increasing interest in image-to-image translation with applications ranging from generating maps from satellite images to creating entire clothes' images from only contours. In the present work, we investigate image-to-image translation using Generative Adversarial Networks (GANs) for generating new data, taking as a case study the morphing of giraffes images into bird images. Morphing a giraffe into a bird is a challenging task, as they have different scales, textures, and morphology. An unsupervised cross-domain translator entitled InstaGAN was trained on giraffes and birds, along with their respective masks, to learn translation between both domains. A dataset of synthetic bird images was generated using translation from originally giraffe images while preserving the original spatial arrangement and background. It is important to stress that the generated birds do not exist, being only the result of a latent representation learned by InstaGAN. Two subsets of common literature datasets were used for training the GAN and generating the translated images: COCO and Caltech-UCSD Birds 200-2011. To evaluate the realness and quality of the generated images and masks, qualitative and quantitative analyses were made. For the quantitative analysis, a pre-trained Mask R-CNN was used for the detection and segmentation of birds on Pascal VOC, Caltech-UCSD Birds 200-2011, and our new dataset entitled FakeSet. The generated dataset achieved detection and segmentation results close to the real datasets, suggesting that the generated images are realistic enough to be detected and segmented by a state-of-the-art deep neural network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge