Gabriel Murray

Visual Analytics for Generative Transformer Models

Nov 21, 2023Abstract:While transformer-based models have achieved state-of-the-art results in a variety of classification and generation tasks, their black-box nature makes them challenging for interpretability. In this work, we present a novel visual analytical framework to support the analysis of transformer-based generative networks. In contrast to previous work, which has mainly focused on encoder-based models, our framework is one of the first dedicated to supporting the analysis of transformer-based encoder-decoder models and decoder-only models for generative and classification tasks. Hence, we offer an intuitive overview that allows the user to explore different facets of the model through interactive visualization. To demonstrate the feasibility and usefulness of our framework, we present three detailed case studies based on real-world NLP research problems.

Mixture-of-Linguistic-Experts Adapters for Improving and Interpreting Pre-trained Language Models

Oct 24, 2023

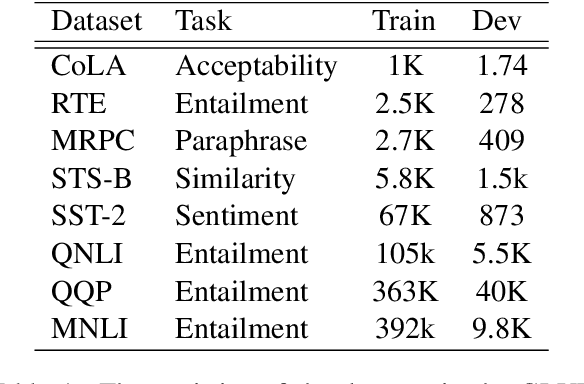

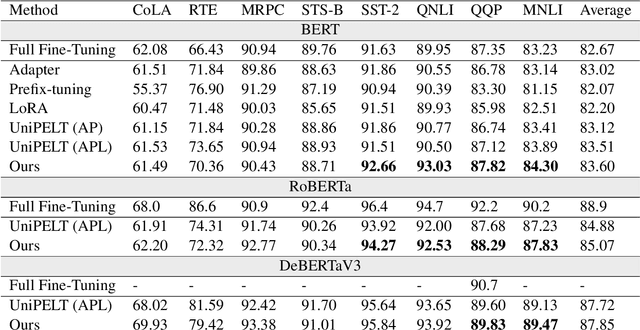

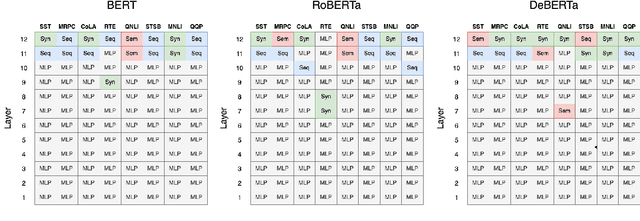

Abstract:In this work, we propose a method that combines two popular research areas by injecting linguistic structures into pre-trained language models in the parameter-efficient fine-tuning (PEFT) setting. In our approach, parallel adapter modules encoding different linguistic structures are combined using a novel Mixture-of-Linguistic-Experts architecture, where Gumbel-Softmax gates are used to determine the importance of these modules at each layer of the model. To reduce the number of parameters, we first train the model for a fixed small number of steps before pruning the experts based on their importance scores. Our experiment results with three different pre-trained models show that our approach can outperform state-of-the-art PEFT methods with a comparable number of parameters. In addition, we provide additional analysis to examine the experts selected by each model at each layer to provide insights for future studies.

Diversity-Aware Coherence Loss for Improving Neural Topic Models

May 26, 2023Abstract:The standard approach for neural topic modeling uses a variational autoencoder (VAE) framework that jointly minimizes the KL divergence between the estimated posterior and prior, in addition to the reconstruction loss. Since neural topic models are trained by recreating individual input documents, they do not explicitly capture the coherence between topic words on the corpus level. In this work, we propose a novel diversity-aware coherence loss that encourages the model to learn corpus-level coherence scores while maintaining a high diversity between topics. Experimental results on multiple datasets show that our method significantly improves the performance of neural topic models without requiring any pretraining or additional parameters.

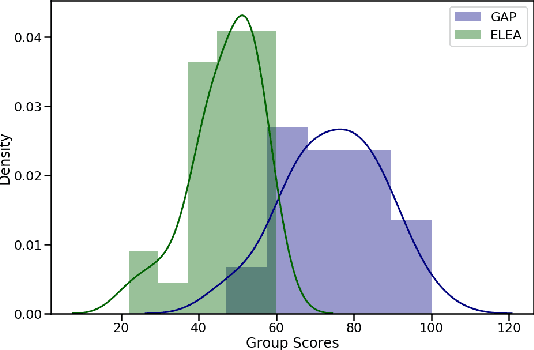

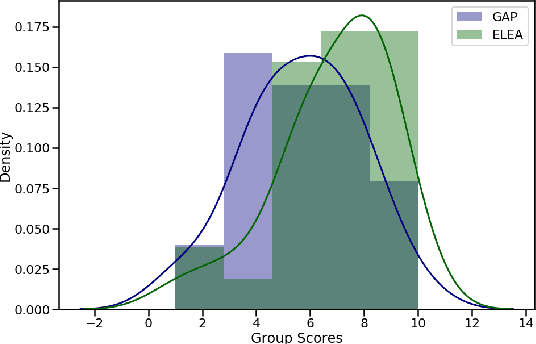

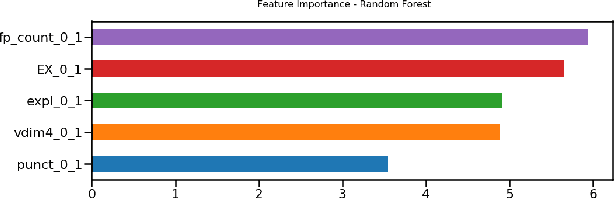

Analyzing Verbal and Nonverbal Features for Predicting Group Performance

Jul 03, 2019

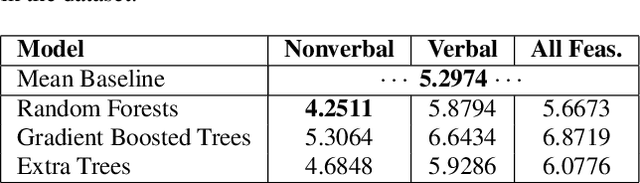

Abstract:This work analyzes the efficacy of verbal and nonverbal features of group conversation for the task of automatic prediction of group task performance. We describe a new publicly available survival task dataset that was collected and annotated to facilitate this prediction task. In these experiments, the new dataset is merged with an existing survival task dataset, allowing us to compare feature sets on a much larger amount of data than has been used in recent related work. This work is also distinct from related research on social signal processing (SSP) in that we compare verbal and nonverbal features, whereas SSP is almost exclusively concerned with nonverbal aspects of social interaction. A key finding is that nonverbal features from the speech signal are extremely effective for this task, even on their own. However, the most effective individual features are verbal features, and we highlight the most important ones.

Stoic Ethics for Artificial Agents

Mar 28, 2017Abstract:We present a position paper advocating the notion that Stoic philosophy and ethics can inform the development of ethical A.I. systems. This is in sharp contrast to most work on building ethical A.I., which has focused on Utilitarian or Deontological ethical theories. We relate ethical A.I. to several core Stoic notions, including the dichotomy of control, the four cardinal virtues, the ideal Sage, Stoic practices, and Stoic perspectives on emotion or affect. More generally, we put forward an ethical view of A.I. that focuses more on internal states of the artificial agent rather than on external actions of the agent. We provide examples relating to near-term A.I. systems as well as hypothetical superintelligent agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge