Gabriel Hartmann

Meta-Reinforcement Learning Using Model Parameters

Oct 27, 2022

Abstract:In meta-reinforcement learning, an agent is trained in multiple different environments and attempts to learn a meta-policy that can efficiently adapt to a new environment. This paper presents RAMP, a Reinforcement learning Agent using Model Parameters that utilizes the idea that a neural network trained to predict environment dynamics encapsulates the environment information. RAMP is constructed in two phases: in the first phase, a multi-environment parameterized dynamic model is learned. In the second phase, the model parameters of the dynamic model are used as context for the multi-environment policy of the model-free reinforcement learning agent.

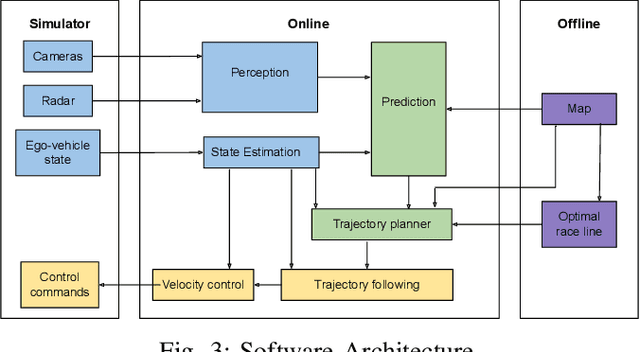

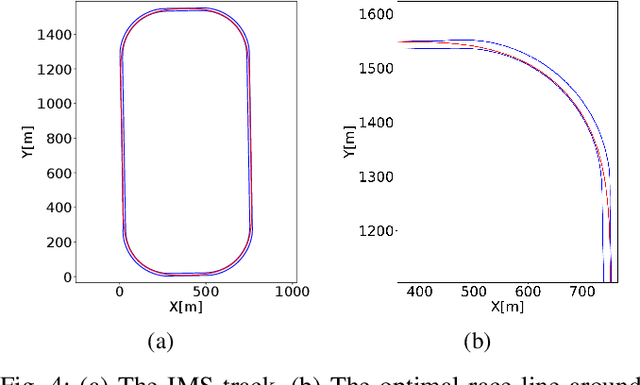

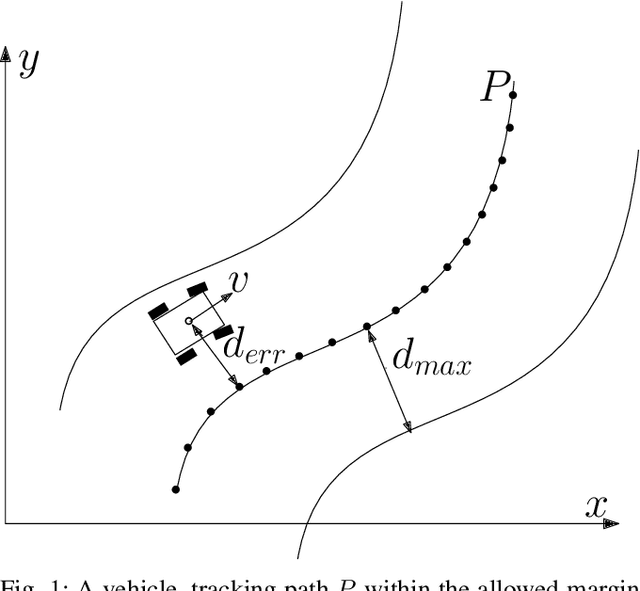

Autonomous Head-to-Head Racing in the Indy Autonomous Challenge Simulation Race

Sep 12, 2021

Abstract:This paper describes Ariel Team's autonomous racing controller for the Indy Autonomous Challenge (IAC) simulation race \cite{INDY}. IAC is the first multi-vehicle autonomous head-to-head competition, reaching speeds of 300 km/h along an oval track, modeled after the Indianapolis Motor Speedway (IMS). Our racing controller attempts to maximize progress along the track while avoiding collisions with opponent vehicles and obeying the race rules. To this end, the racing controller first computes a race line offline. Then, it repeatedly computes online a small set of dynamically feasible maneuver candidates, each tested for collision with the opponent vehicles. Finally, it selects the maneuver that maximizes progress along the track, taking into account the race line. The maneuver candidates, as well as the predicted trajectories of the opponent vehicles, are approximated using a point mass model. Despite the simplicity of this racing controller, it managed to drive competitively and with no collision with any of the opponent vehicles in the IAC final simulation race.

Deep Reinforcement Learning for Time Optimal Velocity Control using Prior Knowledge

Mar 12, 2019

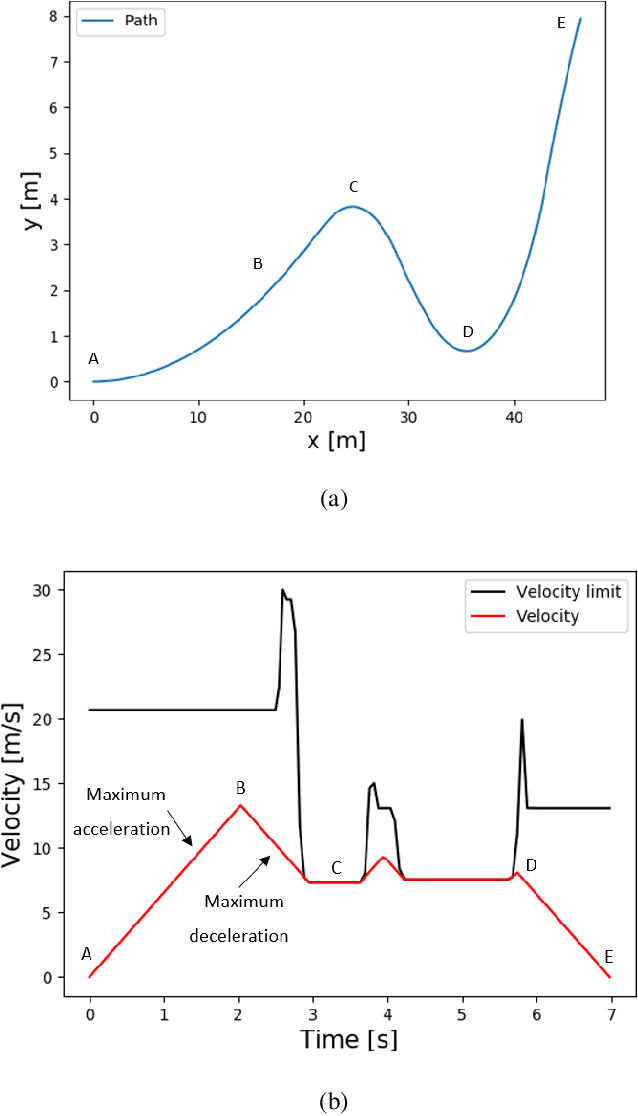

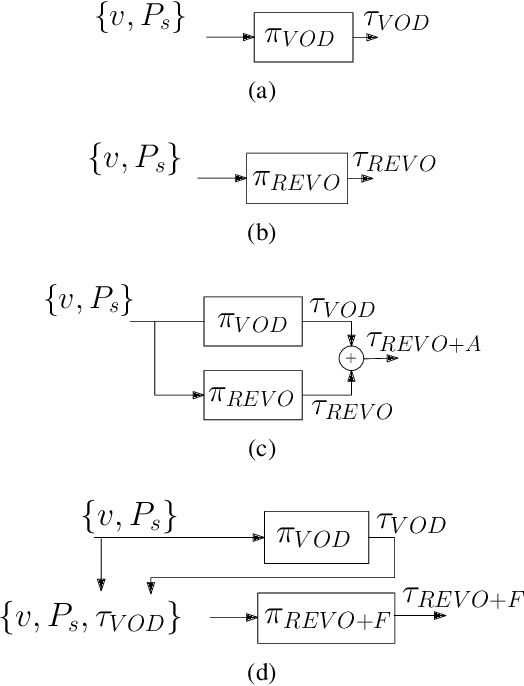

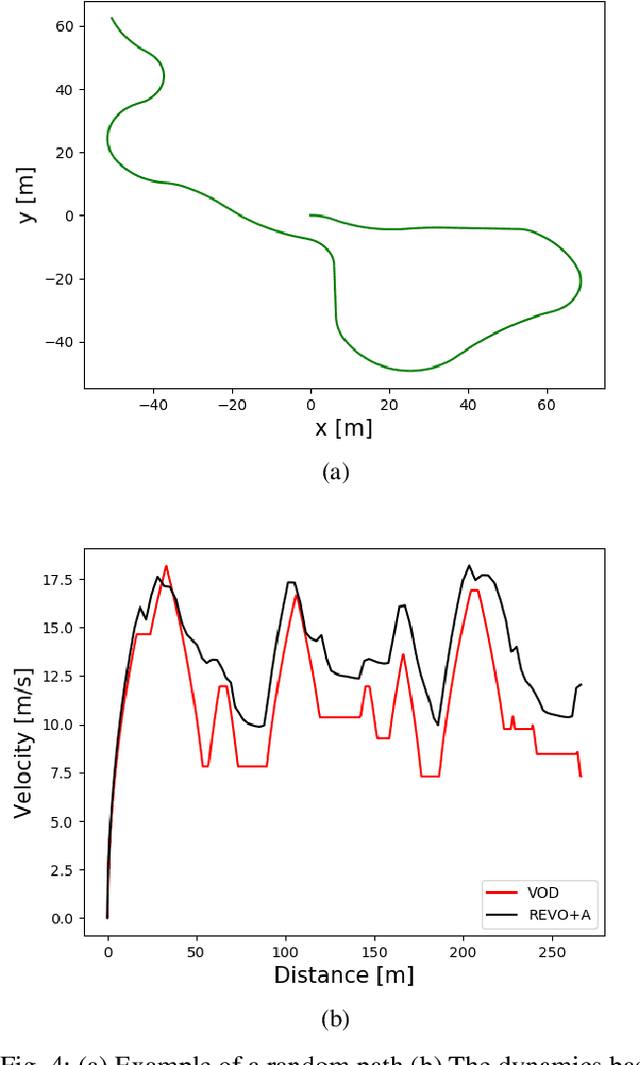

Abstract:While autonomous navigation has recently gained great interest in the field of reinforcement learning, only a few works in this field have focused on the time optimal velocity control problem, i.e. controlling a vehicle such that it travels at the maximal speed without becoming dynamically unstable. Achieving maximal speed is important in many situations, such as emergency vehicles traveling at high speeds to their destinations, and regular vehicles executing emergency maneuvers to avoid imminent collisions. Time optimal velocity control can be solved numerically using existing methods that are based on optimal control and vehicle dynamics. In this paper, we use deep reinforcement learning to generate the time optimal velocity control. Furthermore, we use the numerical solution to further improve the performance of the reinforcement learner. It is shown that the reinforcement learner outperforms the numerically derived solution, and that the hybrid approach (combining learning with the numerical solution) speeds up the learning process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge