Furkan Pala

UnifiedFL: A Dynamic Unified Learning Framework for Equitable Federation

Oct 30, 2025Abstract:Federated learning (FL) has emerged as a key paradigm for collaborative model training across multiple clients without sharing raw data, enabling privacy-preserving applications in areas such as radiology and pathology. However, works on collaborative training across clients with fundamentally different neural architectures and non-identically distributed datasets remain scarce. Existing FL frameworks face several limitations. Despite claiming to support architectural heterogeneity, most recent FL methods only tolerate variants within a single model family (e.g., shallower, deeper, or wider CNNs), still presuming a shared global architecture and failing to accommodate federations where clients deploy fundamentally different network types (e.g., CNNs, GNNs, MLPs). Moreover, existing approaches often address only statistical heterogeneity while overlooking the domain-fracture problem, where each client's data distribution differs markedly from that faced at testing time, undermining model generalizability. When clients use different architectures, have non-identically distributed data, and encounter distinct test domains, current methods perform poorly. To address these challenges, we propose UnifiedFL, a dynamic federated learning framework that represents heterogeneous local networks as nodes and edges in a directed model graph optimized by a shared graph neural network (GNN). UnifiedFL introduces (i) a common GNN to parameterize all architectures, (ii) distance-driven clustering via Euclidean distances between clients' parameters, and (iii) a two-tier aggregation policy balancing convergence and diversity. Experiments on MedMNIST classification and hippocampus segmentation benchmarks demonstrate UnifiedFL's superior performance. Code and data: https://github.com/basiralab/UnifiedFL

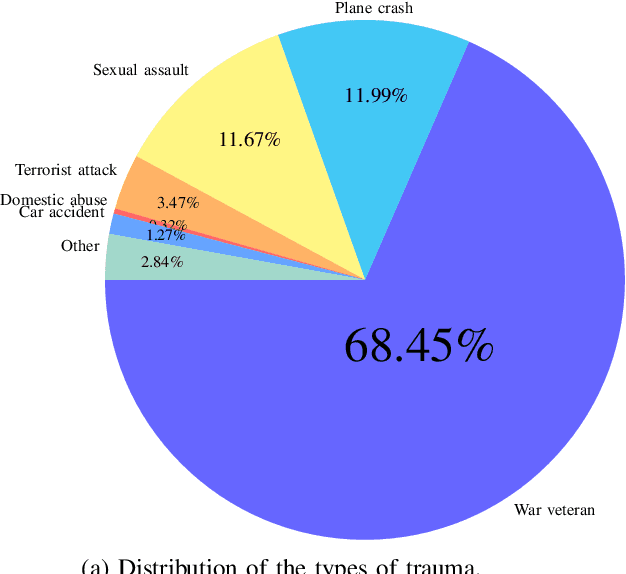

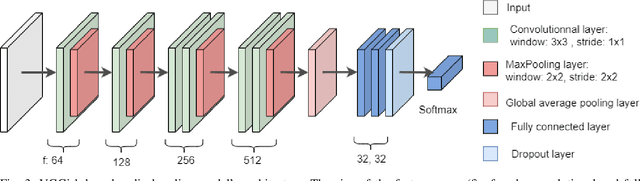

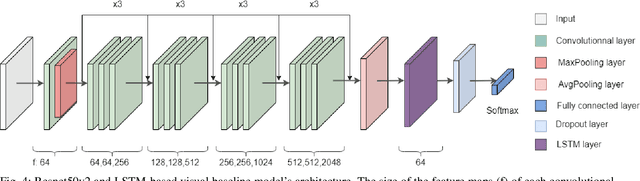

PTSD in the Wild: A Video Database for Studying Post-Traumatic Stress Disorder Recognition in Unconstrained Environments

Sep 28, 2022

Abstract:POST-traumatic stress disorder (PTSD) is a chronic and debilitating mental condition that is developed in response to catastrophic life events, such as military combat, sexual assault, and natural disasters. PTSD is characterized by flashbacks of past traumatic events, intrusive thoughts, nightmares, hypervigilance, and sleep disturbance, all of which affect a person's life and lead to considerable social, occupational, and interpersonal dysfunction. The diagnosis of PTSD is done by medical professionals using self-assessment questionnaire of PTSD symptoms as defined in the Diagnostic and Statistical Manual of Mental Disorders (DSM). In this paper, and for the first time, we collected, annotated, and prepared for public distribution a new video database for automatic PTSD diagnosis, called PTSD in the wild dataset. The database exhibits "natural" and big variability in acquisition conditions with different pose, facial expression, lighting, focus, resolution, age, gender, race, occlusions and background. In addition to describing the details of the dataset collection, we provide a benchmark for evaluating computer vision and machine learning based approaches on PTSD in the wild dataset. In addition, we propose and we evaluate a deep learning based approach for PTSD detection in respect to the given benchmark. The proposed approach shows very promising results. Interested researcher can download a copy of PTSD-in-the wild dataset from: http://www.lissi.fr/PTSD-Dataset/

Predicting Brain Multigraph Population From a Single Graph Template for Boosting One-Shot Classification

Sep 13, 2022

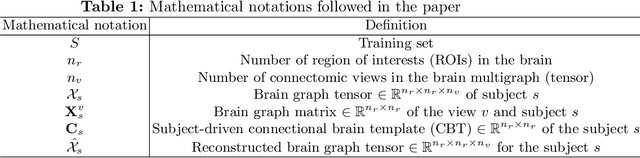

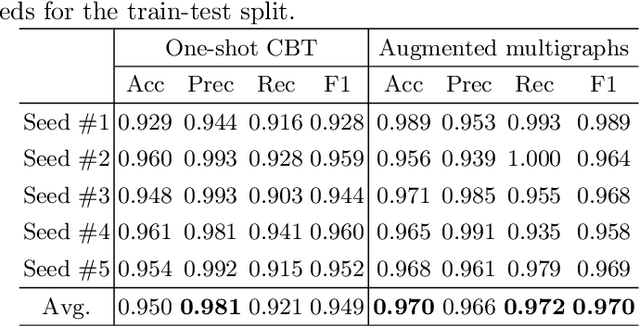

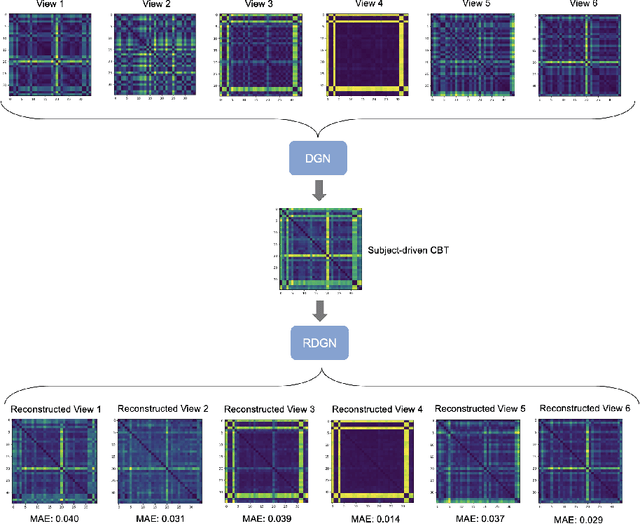

Abstract:A central challenge in training one-shot learning models is the limited representativeness of the available shots of the data space. Particularly in the field of network neuroscience where the brain is represented as a graph, such models may lead to low performance when classifying brain states (e.g., typical vs. autistic). To cope with this, most of the existing works involve a data augmentation step to increase the size of the training set, its diversity and representativeness. Though effective, such augmentation methods are limited to generating samples with the same size as the input shots (e.g., generating brain connectivity matrices from a single shot matrix). To the best of our knowledge, the problem of generating brain multigraphs capturing multiple types of connectivity between pairs of nodes (i.e., anatomical regions) from a single brain graph remains unsolved. In this paper, we unprecedentedly propose a hybrid graph neural network (GNN) architecture, namely Multigraph Generator Network or briefly MultigraphGNet, comprising two subnetworks: (1) a many-to-one GNN which integrates an input population of brain multigraphs into a single template graph, namely a connectional brain temple (CBT), and (2) a reverse one-to-many U-Net network which takes the learned CBT in each training step and outputs the reconstructed input multigraph population. Both networks are trained in an end-to-end way using a cyclic loss. Experimental results demonstrate that our MultigraphGNet boosts the performance of an independent classifier when trained on the augmented brain multigraphs in comparison with training on a single CBT from each class. We hope that our framework can shed some light on the future research of multigraph augmentation from a single graph. Our MultigraphGNet source code is available at https://github.com/basiralab/MultigraphGNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge