Francisco Jáñez Martino

Classifying Suspicious Content in Tor Darknet

May 21, 2020

Abstract:One of the tasks of law enforcement agencies is to find evidence of criminal activity in the Darknet. However, visiting thousands of domains to locate visual information containing illegal acts manually requires a considerable amount of time and resources. Furthermore, the background of the images can pose a challenge when performing classification. To solve this problem, in this paper, we explore the automatic classification Tor Darknet images using Semantic Attention Keypoint Filtering, a strategy that filters non-significant features at a pixel level that do not belong to the object of interest, by combining saliency maps with Bag of Visual Words (BoVW). We evaluated SAKF on a custom Tor image dataset against CNN features: MobileNet v1 and Resnet50, and BoVW using dense SIFT descriptors, achieving a result of 87.98% accuracy and outperforming all other approaches.

Perceptual Hashing applied to Tor domains recognition

May 21, 2020

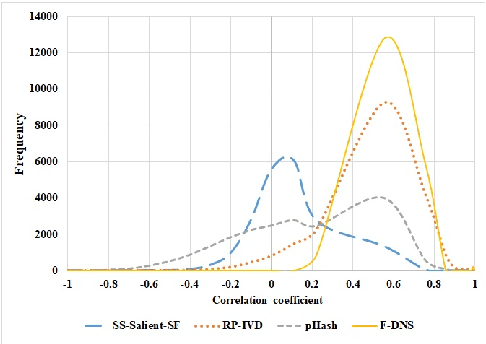

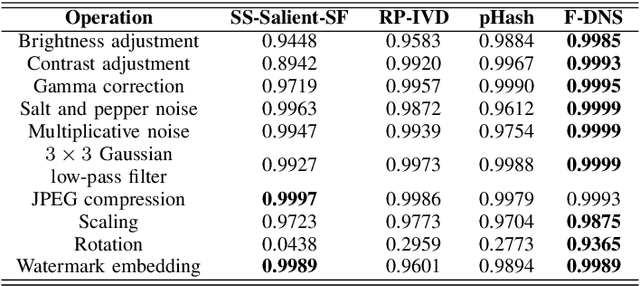

Abstract:The Tor darknet hosts different types of illegal content, which are monitored by cybersecurity agencies. However, manually classifying Tor content can be slow and error-prone. To support this task, we introduce Frequency-Dominant Neighborhood Structure (F-DNS), a new perceptual hashing method for automatically classifying domains by their screenshots. First, we evaluated F-DNS using images subject to various content preserving operations. We compared them with their original images, achieving better correlation coefficients than other state-of-the-art methods, especially in the case of rotation. Then, we applied F-DNS to categorize Tor domains using the Darknet Usage Service Images-2K (DUSI-2K), a dataset with screenshots of active Tor service domains. Finally, we measured the performance of F-DNS against an image classification approach and a state-of-the-art hashing method. Our proposal obtained 98.75% accuracy in Tor images, surpassing all other methods compared.

Classification of Industrial Control Systems screenshots using Transfer Learning

May 21, 2020

Abstract:Industrial Control Systems depend heavily on security and monitoring protocols. Several tools are available for this purpose, which scout vulnerabilities and take screenshots from various control panels for later analysis. However, they do not adequately classify images into specific control groups, which can difficult operations performed by manual operators. In order to solve this problem, we use transfer learning with five CNN architectures, pre-trained on Imagenet, to determine which one best classifies screenshots obtained from Industrial Controls Systems. Using 337 manually labeled images, we train these architectures and study their performance both in accuracy and CPU and GPU time. We find out that MobilenetV1 is the best architecture based on its 97,95% of F1-Score, and its speed on CPU with 0.47 seconds per image. In systems where time is critical and GPU is available, VGG16 is preferable because it takes 0.04 seconds to process images, but dropping performance to 87,67%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge