Francisco Ibarrola

Affect-Conditioned Image Generation

Feb 20, 2023

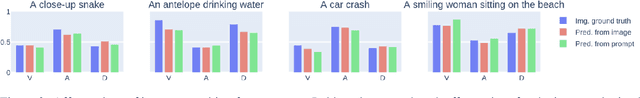

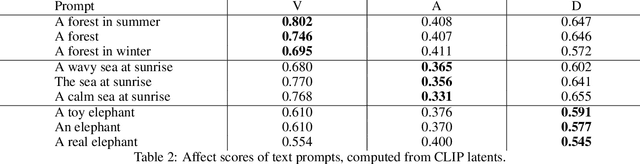

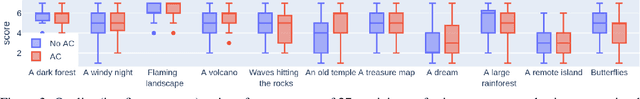

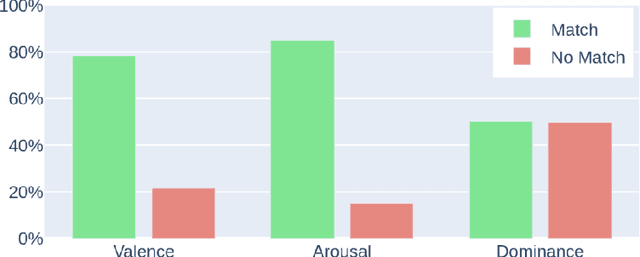

Abstract:In creativity support and computational co-creativity contexts, the task of discovering appropriate prompts for use with text-to-image generative models remains difficult. In many cases the creator wishes to evoke a certain impression with the image, but the task of conferring that succinctly in a text prompt poses a challenge: affective language is nuanced, complex, and model-specific. In this work we introduce a method for generating images conditioned on desired affect, quantified using a psychometrically validated three-component approach, that can be combined with conditioning on text descriptions. We first train a neural network for estimating the affect content of text and images from semantic embeddings, and then demonstrate how this can be used to exert control over a variety of generative models. We show examples of how affect modifies the outputs, provide quantitative and qualitative analysis of its capabilities, and discuss possible extensions and use cases.

Extreme Learning Machine design for dealing with unrepresentative features

Dec 04, 2019

Abstract:Extreme Learning Machines (ELMs) have become a popular tool in the field of Artificial Intelligence due to their very high training speed and generalization capabilities. Another advantage is that they have a single hyper-parameter that must be tuned up: the number of hidden nodes. Most traditional approaches dictate that this parameter should be chosen smaller than the number of available training samples in order to avoid over-fitting. In fact, it has been proved that choosing the number of hidden nodes equal to the number of training samples yields a perfect training classification with probability 1 (w.r.t. the random parameter initialization). In this article we argue that in spite of this, in some cases it may be beneficial to choose a much larger number of hidden nodes, depending on certain properties of the data. We explain why this happens and show some examples to illustrate how the model behaves. In addition, we present a pruning algorithm to cope with the additional computational burden associated to the enlarged ELM. Experimental results using electroencephalography (EEG) signals show an improvement in performance with respect to traditional ELM approaches, while diminishing the extra computing time associated to the use of large architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge