Francis Tom

RespireNet: A Deep Neural Network for Accurately Detecting Abnormal Lung Sounds in Limited Data Setting

Oct 31, 2020

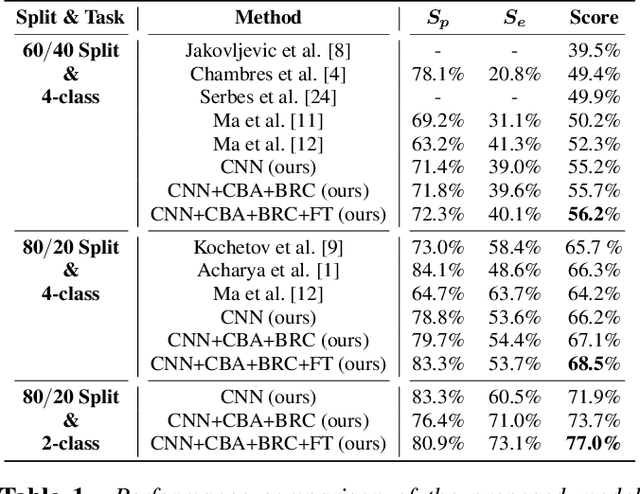

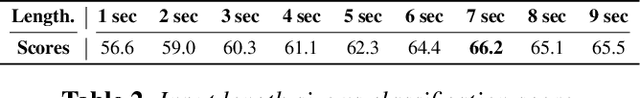

Abstract:Auscultation of respiratory sounds is the primary tool for screening and diagnosing lung diseases. Automated analysis, coupled with digital stethoscopes, can play a crucial role in enabling tele-screening of fatal lung diseases. Deep neural networks (DNNs) have shown a lot of promise for such problems, and are an obvious choice. However, DNNs are extremely data hungry, and the largest respiratory dataset ICBHI has only 6898 breathing cycles, which is still small for training a satisfactory DNN model. In this work, RespireNet, we propose a simple CNN-based model, along with a suite of novel techniques---device specific fine-tuning, concatenation-based augmentation, blank region clipping, and smart padding---enabling us to efficiently use the small-sized dataset. We perform extensive evaluation on the ICBHI dataset, and improve upon the state-of-the-art results for 4-class classification by 2.2%

Learning a Deep Convolution Network with Turing Test Adversaries for Microscopy Image Super Resolution

Jan 18, 2019

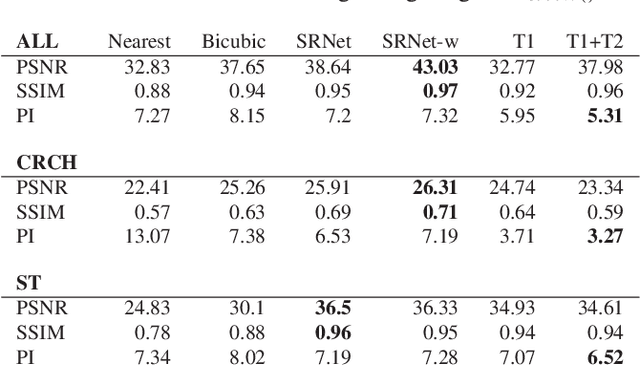

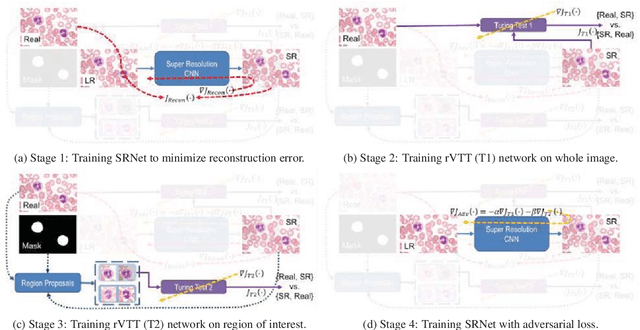

Abstract:Adversarially trained deep neural networks have significantly improved performance of single image super resolution, by hallucinating photorealistic local textures, thereby greatly reducing the perception difference between a real high resolution image and its super resolved (SR) counterpart. However, application to medical imaging requires preservation of diagnostically relevant features while refraining from introducing any diagnostically confusing artifacts. We propose using a deep convolutional super resolution network (SRNet) trained for (i) minimising reconstruction loss between the real and SR images, and (ii) maximally confusing learned relativistic visual Turing test (rVTT) networks to discriminate between (a) pair of real and SR images (T1) and (b) pair of patches in real and SR selected from region of interest (T2). The adversarial loss of T1 and T2 while backpropagated through SRNet helps it learn to reconstruct pathorealism in the regions of interest such as white blood cells (WBC) in peripheral blood smears or epithelial cells in histopathology of cancerous biopsy tissues, which are experimentally demonstrated here. Experiments performed for measuring signal distortion loss using peak signal to noise ratio (pSNR) and structural similarity (SSIM) with variation of SR scale factors, impact of rVTT adversarial losses, and impact on reporting using SR on a commercially available artificial intelligence (AI) digital pathology system substantiate our claims.

UltraCompression: Framework for High Density Compression of Ultrasound Volumes using Physics Modeling Deep Neural Networks

Jan 17, 2019

Abstract:Ultrasound image compression by preserving speckle-based key information is a challenging task. In this paper, we introduce an ultrasound image compression framework with the ability to retain realism of speckle appearance despite achieving very high-density compression factors. The compressor employs a tissue segmentation method, transmitting segments along with transducer frequency, number of samples and image size as essential information required for decompression. The decompressor is based on a convolutional network trained to generate patho-realistic ultrasound images which convey essential information pertinent to tissue pathology visible in the images. We demonstrate generalizability of the building blocks using two variants to build the compressor. We have evaluated the quality of decompressed images using distortion losses as well as perception loss and compared it with other off the shelf solutions. The proposed method achieves a compression ratio of $725:1$ while preserving the statistical distribution of speckles. This enables image segmentation on decompressed images to achieve dice score of $0.89 \pm 0.11$, which evidently is not so accurately achievable when images are compressed with current standards like JPEG, JPEG 2000, WebP and BPG. We envision this frame work to serve as a roadmap for speckle image compression standards.

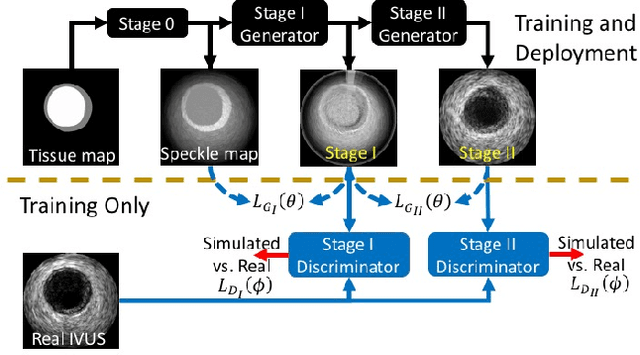

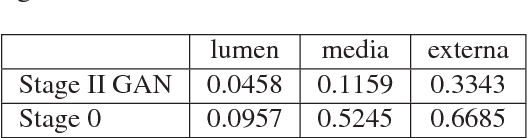

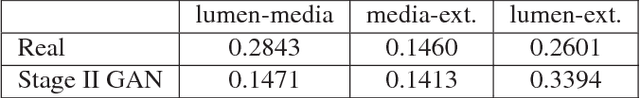

Simulating Patho-realistic Ultrasound Images using Deep Generative Networks with Adversarial Learning

Jan 08, 2018

Abstract:Ultrasound imaging makes use of backscattering of waves during their interaction with scatterers present in biological tissues. Simulation of synthetic ultrasound images is a challenging problem on account of inability to accurately model various factors of which some include intra-/inter scanline interference, transducer to surface coupling, artifacts on transducer elements, inhomogeneous shadowing and nonlinear attenuation. Current approaches typically solve wave space equations making them computationally expensive and slow to operate. We propose a generative adversarial network (GAN) inspired approach for fast simulation of patho-realistic ultrasound images. We apply the framework to intravascular ultrasound (IVUS) simulation. A stage 0 simulation performed using pseudo B-mode ultrasound image simulator yields speckle mapping of a digitally defined phantom. The stage I GAN subsequently refines them to preserve tissue specific speckle intensities. The stage II GAN further refines them to generate high resolution images with patho-realistic speckle profiles. We evaluate patho-realism of simulated images with a visual Turing test indicating an equivocal confusion in discriminating simulated from real. We also quantify the shift in tissue specific intensity distributions of the real and simulated images to prove their similarity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge