Francesco Ventura

Explaining the Deep Natural Language Processing by Mining Textual Interpretable Features

Jun 12, 2021

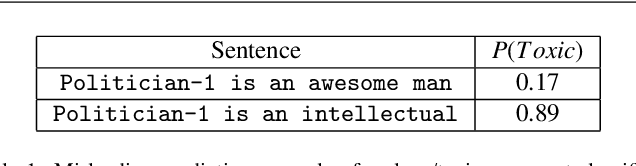

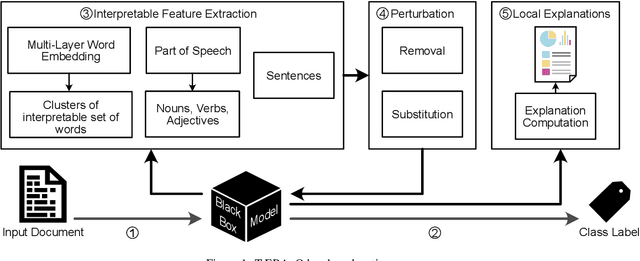

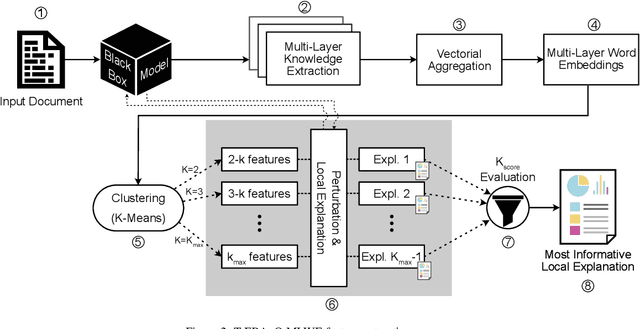

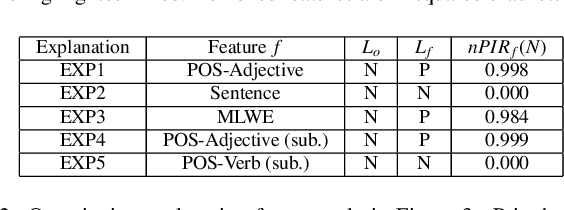

Abstract:Despite the high accuracy offered by state-of-the-art deep natural-language models (e.g. LSTM, BERT), their application in real-life settings is still widely limited, as they behave like a black-box to the end-user. Hence, explainability is rapidly becoming a fundamental requirement of future-generation data-driven systems based on deep-learning approaches. Several attempts to fulfill the existing gap between accuracy and interpretability have been done. However, robust and specialized xAI (Explainable Artificial Intelligence) solutions tailored to deep natural-language models are still missing. We propose a new framework, named T-EBAnO, which provides innovative prediction-local and class-based model-global explanation strategies tailored to black-box deep natural-language models. Given a deep NLP model and the textual input data, T-EBAnO provides an objective, human-readable, domain-specific assessment of the reasons behind the automatic decision-making process. Specifically, the framework extracts sets of interpretable features mining the inner knowledge of the model. Then, it quantifies the influence of each feature during the prediction process by exploiting the novel normalized Perturbation Influence Relation index at the local level and the novel Global Absolute Influence and Global Relative Influence indexes at the global level. The effectiveness and the quality of the local and global explanations obtained with T-EBAnO are proved on (i) a sentiment analysis task performed by a fine-tuned BERT model, and (ii) a toxic comment classification task performed by an LSTM model.

What's in the box? Explaining the black-box model through an evaluation of its interpretable features

Jul 31, 2019

Abstract:Algorithms are powerful and necessary tools behind a large part of the information we use every day. However, they may introduce new sources of bias, discrimination and other unfair practices that affect people who are unaware of it. Greater algorithm transparency is indispensable to provide more credible and reliable services. Moreover, requiring developers to design transparent algorithm-driven applications allows them to keep the model accessible and human understandable, increasing the trust of end users. In this paper we present EBAnO, a new engine able to produce prediction-local explanations for a black-box model exploiting interpretable feature perturbations. EBAnO exploits the hypercolumns representation together with the cluster analysis to identify a set of interpretable features of images. Furthermore two indices have been proposed to measure the influence of input features on the final prediction made by a CNN model. EBAnO has been preliminarily tested on a set of heterogeneous images. The results highlight the effectiveness of EBAnO in explaining the CNN classification through the evaluation of interpretable features influence.

Automating concept-drift detection by self-evaluating predictive model degradation

Jul 18, 2019

Abstract:A key aspect of automating predictive machine learning entails the capability of properly triggering the update of the trained model. To this aim, suitable automatic solutions to self-assess the prediction quality and the data distribution drift between the original training set and the new data have to be devised. In this paper, we propose a novel methodology to automatically detect prediction-quality degradation of machine learning models due to class-based concept drift, i.e., when new data contains samples that do not fit the set of class labels known by the currently-trained predictive model. Experiments on synthetic and real-world public datasets show the effectiveness of the proposed methodology in automatically detecting and describing concept drift caused by changes in the class-label data distributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge