Francesco Rovida

SkiROS2: A skill-based Robot Control Platform for ROS

Jun 29, 2023Abstract:The need for autonomous robot systems in both the service and the industrial domain is larger than ever. In the latter, the transition to small batches or even "batch size 1" in production created a need for robot control system architectures that can provide the required flexibility. Such architectures must not only have a sufficient knowledge integration framework. It must also support autonomous mission execution and allow for interchangeability and interoperability between different tasks and robot systems. We introduce SkiROS2, a skill-based robot control platform on top of ROS. SkiROS2 proposes a layered, hybrid control structure for automated task planning, and reactive execution, supported by a knowledge base for reasoning about the world state and entities. The scheduling formulation builds on the extended behavior tree model that merges task-level planning and execution. This allows for a high degree of modularity and a fast reaction to changes in the environment. The skill formulation based on pre-, hold- and post-conditions allows to organize robot programs and to compose diverse skills reaching from perception to low-level control and the incorporation of external tools. We relate SkiROS2 to the field and outline three example use cases that cover task planning, reasoning, multisensory input, integration in a manufacturing execution system and reinforcement learning.

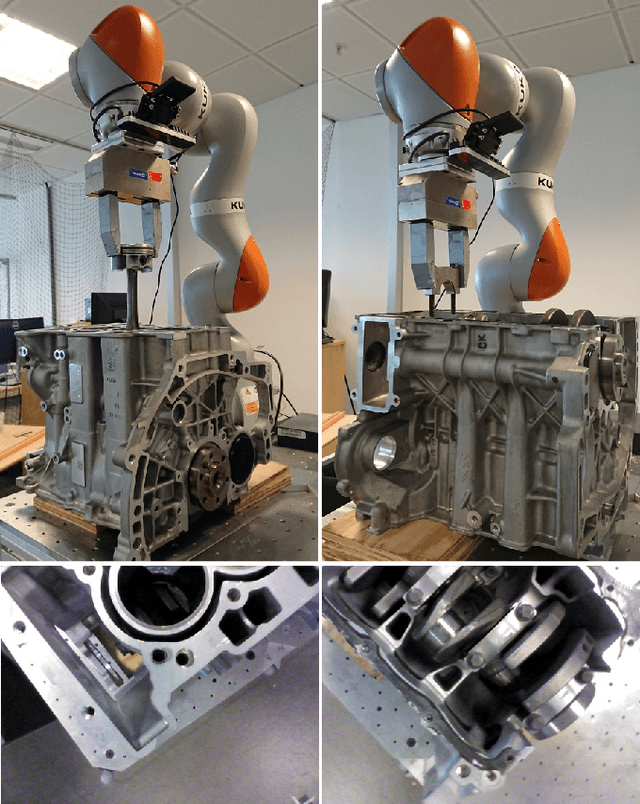

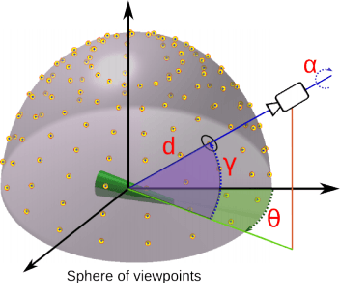

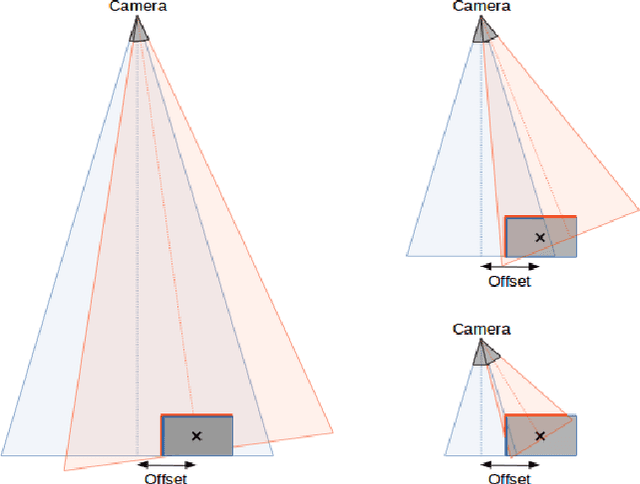

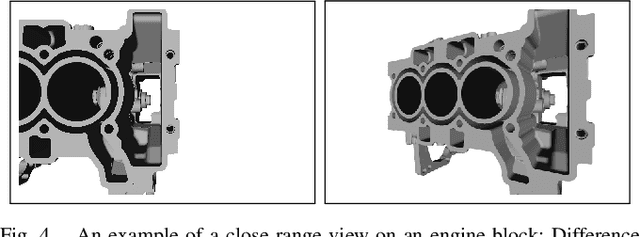

Continuous close-range 3D object pose estimation

Oct 02, 2020

Abstract:In the context of future manufacturing lines, removing fixtures will be a fundamental step to increase the flexibility of autonomous systems in assembly and logistic operations. Vision-based 3D pose estimation is a necessity to accurately handle objects that might not be placed at fixed positions during the robot task execution. Industrial tasks bring multiple challenges for the robust pose estimation of objects such as difficult object properties, tight cycle times and constraints on camera views. In particular, when interacting with objects, we have to work with close-range partial views of objects that pose a new challenge for typical view-based pose estimation methods. In this paper, we present a 3D pose estimation method based on a gradient-ascend particle filter that integrates new observations on-the-fly to improve the pose estimate. Thereby, we can apply this method online during task execution to save valuable cycle time. In contrast to other view-based pose estimation methods, we model potential views in full 6- dimensional space that allows us to cope with close-range partial objects views. We demonstrate the approach on a real assembly task, in which the algorithm usually converges to the correct pose within 10-15 iterations with an average accuracy of less than 8mm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge