Francesco Messina

Towards Safer Planetary Exploration: A Hybrid Architecture for Terrain Traversability Analysis in Mars Rovers

Oct 23, 2024Abstract:The field of autonomous navigation for unmanned ground vehicles (UGVs) is in continuous growth and increasing levels of autonomy have been reached in the last few years. However, the task becomes more challenging when the focus is on the exploration of planet surfaces such as Mars. In those situations, UGVs are forced to navigate through unstable and rugged terrains which, inevitably, open the vehicle to more hazards, accidents, and, in extreme cases, complete mission failure. The paper addresses the challenges of autonomous navigation for unmanned ground vehicles in planetary exploration, particularly on Mars, introducing a hybrid architecture for terrain traversability analysis that combines two approaches: appearance-based and geometry-based. The appearance-based method uses semantic segmentation via deep neural networks to classify different terrain types. This is further refined by pixel-level terrain roughness classification obtained from the same RGB image, assigning different costs based on the physical properties of the soil. The geometry-based method complements the appearance-based approach by evaluating the terrain's geometrical features, identifying hazards that may not be detectable by the appearance-based side. The outputs of both methods are combined into a comprehensive hybrid cost map. The proposed architecture was trained on synthetic datasets and developed as a ROS2 application to integrate into broader autonomous navigation systems for harsh environments. Simulations have been performed in Unity, showing the ability of the method to assess online traversability analysis.

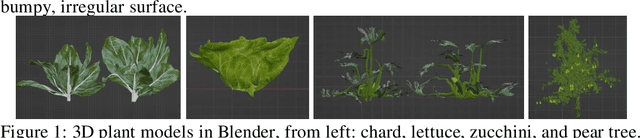

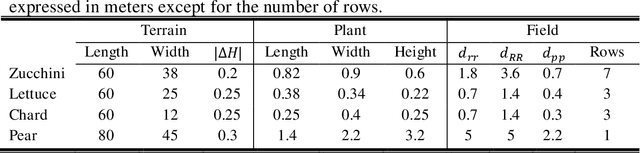

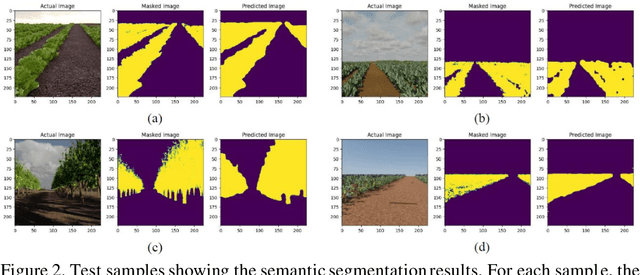

Enhancing Navigation Benchmarking and Perception Data Generation for Row-based Crops in Simulation

Jun 27, 2023

Abstract:Service robotics is recently enhancing precision agriculture enabling many automated processes based on efficient autonomous navigation solutions. However, data generation and infield validation campaigns hinder the progress of large-scale autonomous platforms. Simulated environments and deep visual perception are spreading as successful tools to speed up the development of robust navigation with low-cost RGB-D cameras. In this context, the contribution of this work is twofold: a synthetic dataset to train deep semantic segmentation networks together with a collection of virtual scenarios for a fast evaluation of navigation algorithms. Moreover, an automatic parametric approach is developed to explore different field geometries and features. The simulation framework and the dataset have been evaluated by training a deep segmentation network on different crops and benchmarking the resulting navigation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge