Francesca De Piano

Application of the nnU-Net for automatic segmentation of lung lesion on CT images, and implication on radiomic models

Sep 24, 2022

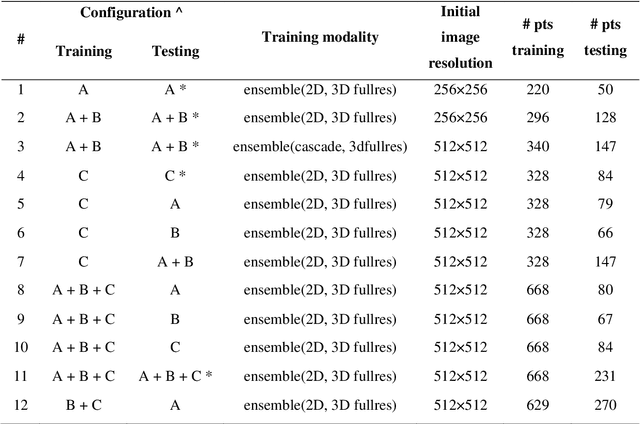

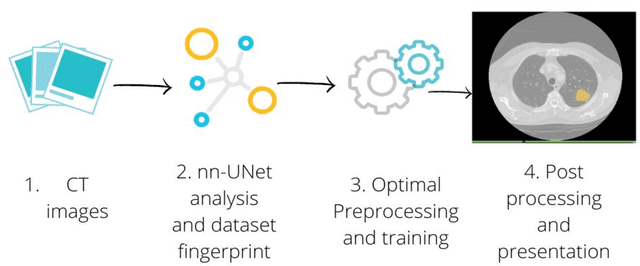

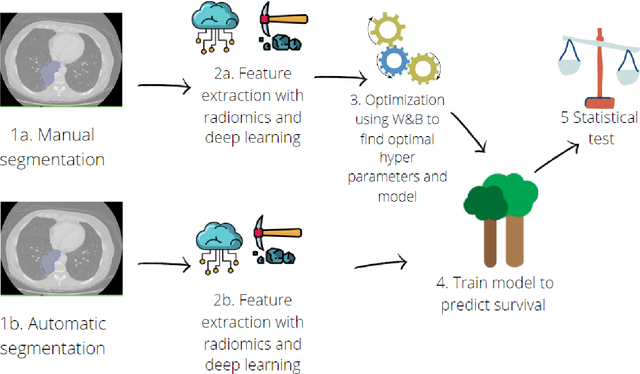

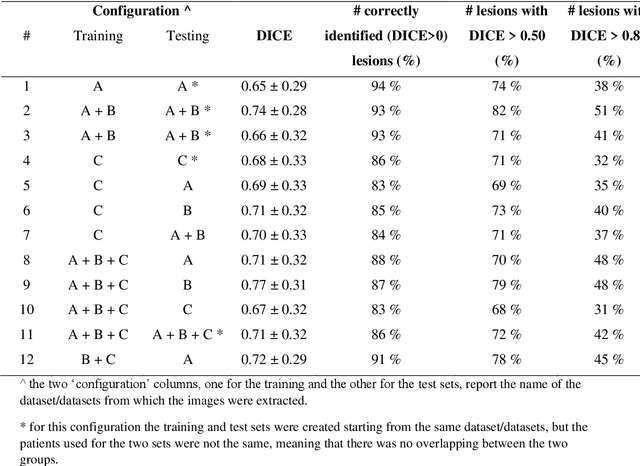

Abstract:Lesion segmentation is a crucial step of the radiomic workflow. Manual segmentation requires long execution time and is prone to variability, impairing the realisation of radiomic studies and their robustness. In this study, a deep-learning automatic segmentation method was applied on computed tomography images of non-small-cell lung cancer patients. The use of manual vs automatic segmentation in the performance of survival radiomic models was assessed, as well. METHODS A total of 899 NSCLC patients were included (2 proprietary: A and B, 1 public datasets: C). Automatic segmentation of lung lesions was performed by training a previously developed architecture, the nnU-Net, including 2D, 3D and cascade approaches. The quality of automatic segmentation was evaluated with DICE coefficient, considering manual contours as reference. The impact of automatic segmentation on the performance of a radiomic model for patient survival was explored by extracting radiomic hand-crafted and deep-learning features from manual and automatic contours of dataset A, and feeding different machine learning algorithms to classify survival above/below median. Models' accuracies were assessed and compared. RESULTS The best agreement between automatic and manual contours with DICE=0.78 +(0.12) was achieved by averaging predictions from 2D and 3D models, and applying a post-processing technique to extract the maximum connected component. No statistical differences were observed in the performances of survival models when using manual or automatic contours, hand-crafted, or deep features. The best classifier showed an accuracy between 0.65 and 0.78. CONCLUSION The promising role of nnU-Net for automatic segmentation of lung lesions was confirmed, dramatically reducing the time-consuming physicians' workload without impairing the accuracy of survival predictive models based on radiomics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge