Fragkiskos Malliaros

The Factuality of Large Language Models in the Legal Domain

Sep 18, 2024

Abstract:This paper investigates the factuality of large language models (LLMs) as knowledge bases in the legal domain, in a realistic usage scenario: we allow for acceptable variations in the answer, and let the model abstain from answering when uncertain. First, we design a dataset of diverse factual questions about case law and legislation. We then use the dataset to evaluate several LLMs under different evaluation methods, including exact, alias, and fuzzy matching. Our results show that the performance improves significantly under the alias and fuzzy matching methods. Further, we explore the impact of abstaining and in-context examples, finding that both strategies enhance precision. Finally, we demonstrate that additional pre-training on legal documents, as seen with SaulLM, further improves factual precision from 63% to 81%.

Higher-order Clustering and Pooling for Graph Neural Networks

Sep 02, 2022

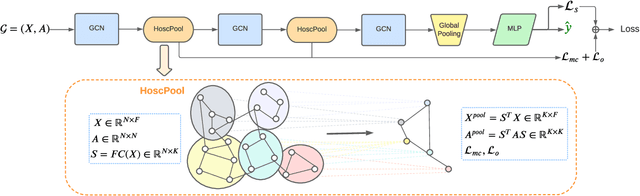

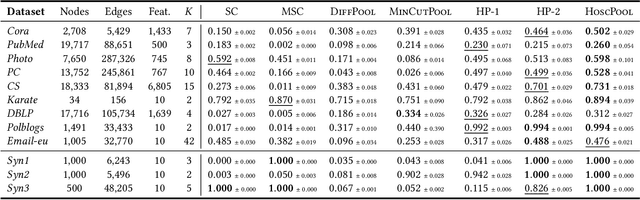

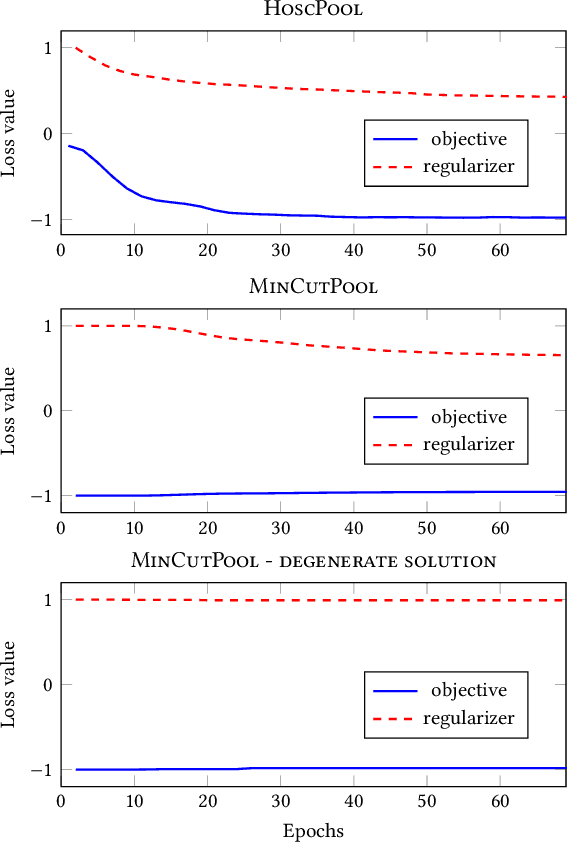

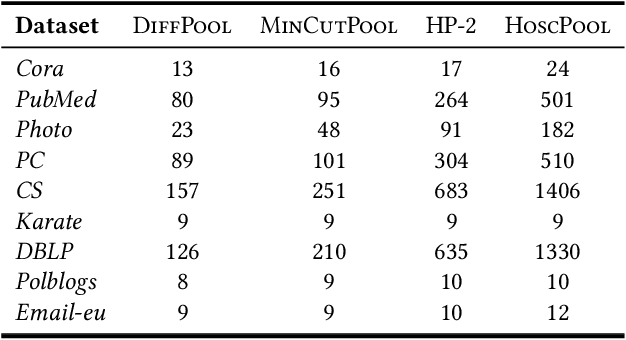

Abstract:Graph Neural Networks achieve state-of-the-art performance on a plethora of graph classification tasks, especially due to pooling operators, which aggregate learned node embeddings hierarchically into a final graph representation. However, they are not only questioned by recent work showing on par performance with random pooling, but also ignore completely higher-order connectivity patterns. To tackle this issue, we propose HoscPool, a clustering-based graph pooling operator that captures higher-order information hierarchically, leading to richer graph representations. In fact, we learn a probabilistic cluster assignment matrix end-to-end by minimising relaxed formulations of motif spectral clustering in our objective function, and we then extend it to a pooling operator. We evaluate HoscPool on graph classification tasks and its clustering component on graphs with ground-truth community structure, achieving best performance. Lastly, we provide a deep empirical analysis of pooling operators' inner functioning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge