Floris Ernst

Comparison of Representation Learning Techniques for Tracking in time resolved 3D Ultrasound

Jan 10, 2022

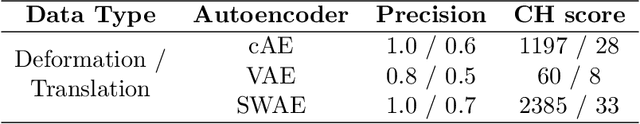

Abstract:3D ultrasound (3DUS) becomes more interesting for target tracking in radiation therapy due to its capability to provide volumetric images in real-time without using ionizing radiation. It is potentially usable for tracking without using fiducials. For this, a method for learning meaningful representations would be useful to recognize anatomical structures in different time frames in representation space (r-space). In this study, 3DUS patches are reduced into a 128-dimensional r-space using conventional autoencoder, variational autoencoder and sliced-wasserstein autoencoder. In the r-space, the capability of separating different ultrasound patches as well as recognizing similar patches is investigated and compared based on a dataset of liver images. Two metrics to evaluate the tracking capability in the r-space are proposed. It is shown that ultrasound patches with different anatomical structures can be distinguished and sets of similar patches can be clustered in r-space. The results indicate that the investigated autoencoders have different levels of usability for target tracking in 3DUS.

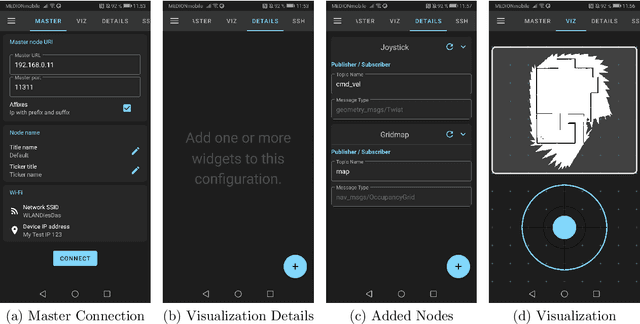

ROS-Mobile: An Android application for the Robot Operating System

Nov 05, 2020

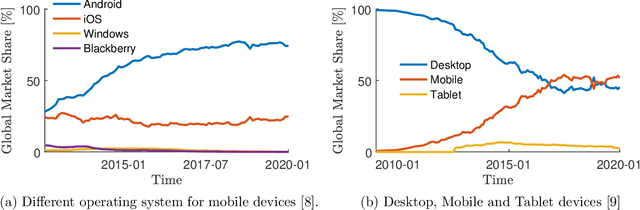

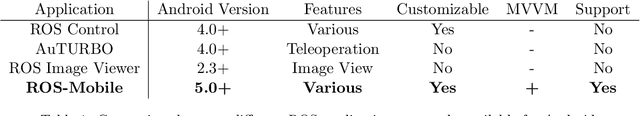

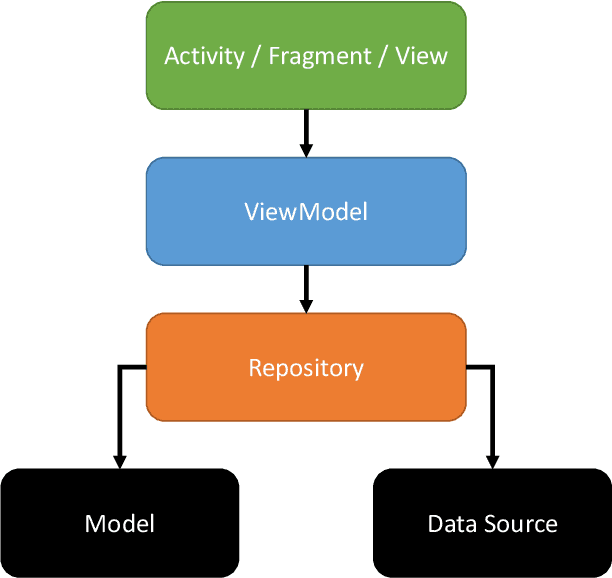

Abstract:Controlling and monitoring complex autonomous and semi autonomous robotic systems is a challenging task. The Robot Operating System (ROS) was developed to act as a robotic middleware system running on Ubuntu Linux which allows, amongst others, hardware abstraction, message-passing between individual processes and package management. However, active support of ROS applications for mobile devices, such as smarthphones or tablets, are missing. We developed a ROS application for Android, which comes with an intuitive user interface for controlling and monitoring robotic systems. Our open source contribution can be used in a large variety of tasks and with many different kinds of robots. Moreover, it can easily be customized and new features added. In this paper, we give an outline over the software architecture, the main functionalities and show some possible use-cases on different mobile robotic systems.

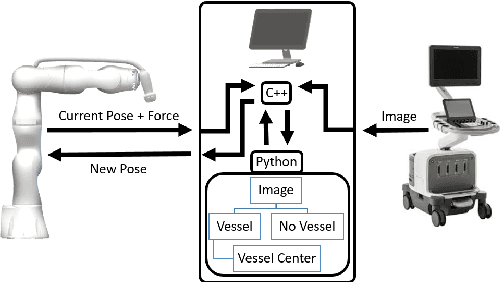

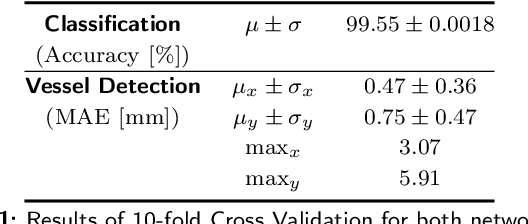

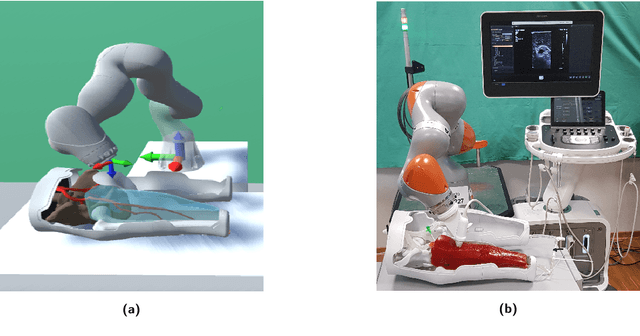

Robotized Ultrasound Imaging of the Peripheral Arteries -- a Phantom Study

Jul 13, 2020

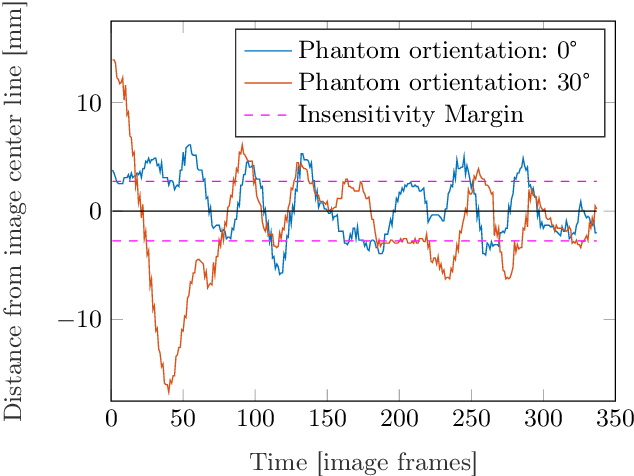

Abstract:The first choice in diagnostic imaging for patients suffering from peripheral arterial disease is 2D ultrasound (US). However, for a proper imaging process, a skilled and experienced sonographer is required. Additionally, it is a highly user-dependent operation. A robotized US system that autonomously scans the peripheral arteries has the potential to overcome these limitations. In this work, we extend a previously proposed system by a hierarchical image analysis pipeline based on convolutional neural networks in order to control the robot. The system was evaluated by checking its feasibility to keep the vessel lumen of a leg phantom within the US image while scanning along the artery. In 100 % of the images acquired during the scan process the whole vessel lumen was visible. While defining an insensitivity margin of 2.74 mm, the mean absolute distance between vessel center and the horizontal image center line was 2.47 mm and 3.90 mm for an easy and complex scenario, respectively. In conclusion, this system presents the basis for fully automatized peripheral artery imaging in humans using a radiation-free approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge