Comparison of Representation Learning Techniques for Tracking in time resolved 3D Ultrasound

Paper and Code

Jan 10, 2022

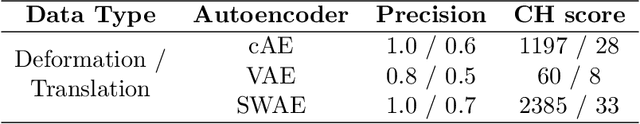

3D ultrasound (3DUS) becomes more interesting for target tracking in radiation therapy due to its capability to provide volumetric images in real-time without using ionizing radiation. It is potentially usable for tracking without using fiducials. For this, a method for learning meaningful representations would be useful to recognize anatomical structures in different time frames in representation space (r-space). In this study, 3DUS patches are reduced into a 128-dimensional r-space using conventional autoencoder, variational autoencoder and sliced-wasserstein autoencoder. In the r-space, the capability of separating different ultrasound patches as well as recognizing similar patches is investigated and compared based on a dataset of liver images. Two metrics to evaluate the tracking capability in the r-space are proposed. It is shown that ultrasound patches with different anatomical structures can be distinguished and sets of similar patches can be clustered in r-space. The results indicate that the investigated autoencoders have different levels of usability for target tracking in 3DUS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge