Florian Lingenfelser

The AffectToolbox: Affect Analysis for Everyone

Feb 23, 2024

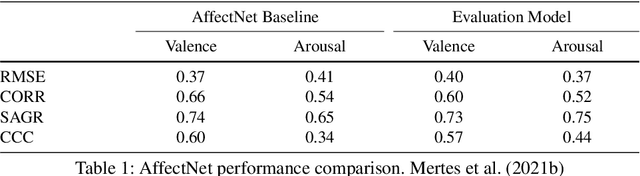

Abstract:In the field of affective computing, where research continually advances at a rapid pace, the demand for user-friendly tools has become increasingly apparent. In this paper, we present the AffectToolbox, a novel software system that aims to support researchers in developing affect-sensitive studies and prototypes. The proposed system addresses the challenges posed by existing frameworks, which often require profound programming knowledge and cater primarily to power-users or skilled developers. Aiming to facilitate ease of use, the AffectToolbox requires no programming knowledge and offers its functionality to reliably analyze the affective state of users through an accessible graphical user interface. The architecture encompasses a variety of models for emotion recognition on multiple affective channels and modalities, as well as an elaborate fusion system to merge multi-modal assessments into a unified result. The entire system is open-sourced and will be publicly available to ensure easy integration into more complex applications through a well-structured, Python-based code base - therefore marking a substantial contribution toward advancing affective computing research and fostering a more collaborative and inclusive environment within this interdisciplinary field.

Intercategorical Label Interpolation for Emotional Face Generation with Conditional Generative Adversarial Networks

Apr 26, 2022

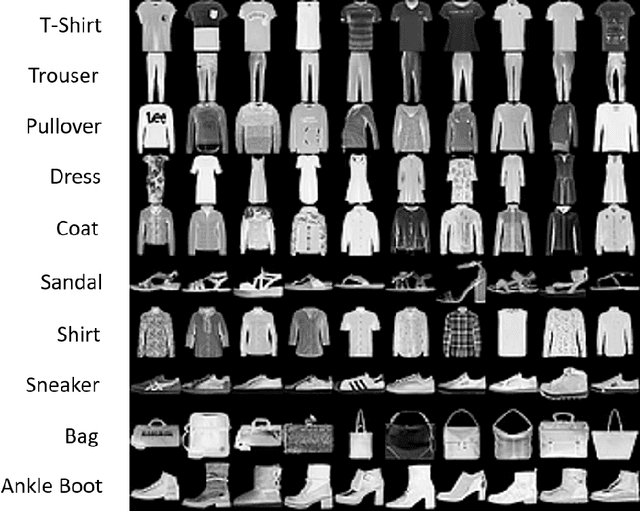

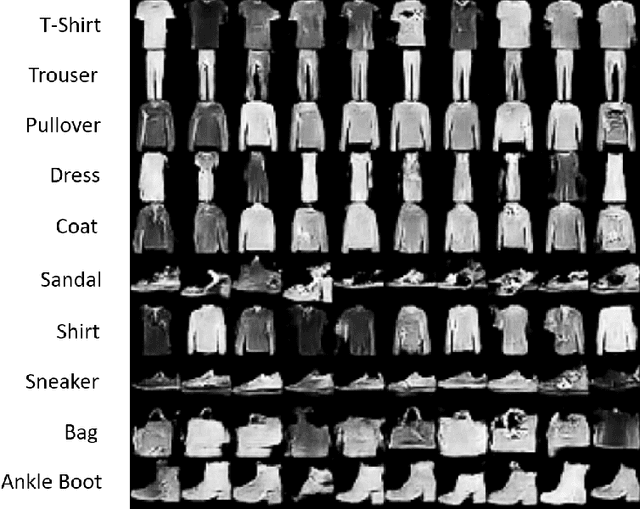

Abstract:Generative adversarial networks offer the possibility to generate deceptively real images that are almost indistinguishable from actual photographs. Such systems however rely on the presence of large datasets to realistically replicate the corresponding domain. This is especially a problem if not only random new images are to be generated, but specific (continuous) features are to be co-modeled. A particularly important use case in \emph{Human-Computer Interaction} (HCI) research is the generation of emotional images of human faces, which can be used for various use cases, such as the automatic generation of avatars. The problem hereby lies in the availability of training data. Most suitable datasets for this task rely on categorical emotion models and therefore feature only discrete annotation labels. This greatly hinders the learning and modeling of smooth transitions between displayed affective states. To overcome this challenge, we explore the potential of label interpolation to enhance networks trained on categorical datasets with the ability to generate images conditioned on continuous features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge